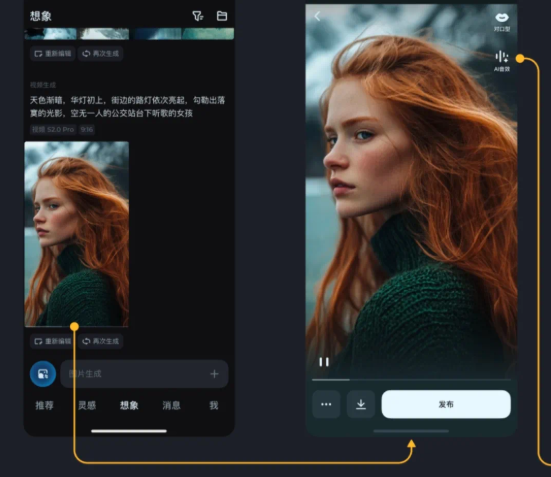

Still worried about short video dubbing? Are you always unable to find the right background music? Now, ByteDance has launched a revolutionary AI technology - the SeedFoley sound effect generation model, which has completely solved the sound effect problems in video creation. With just a simple operation, SeedFoley can intelligently match professional-grade sound effects to your videos, making your works instantly from silent and silent movies to audio blockbusters. This technology has quickly launched the video creation platform "A Dream", a subsidiary of ByteDance, allowing every user to easily experience the magical function of generating sound effects with one click.

SeedFoley's core technology lies in its revolutionary end-to-end architecture, which cleverly combines the space-time characteristics of video with a powerful diffusion generation model to achieve high synchronization of sound effects and video content. First, SeedFoley will perform frame extraction analysis on the video, extract key information of each frame, and then deeply interpret the video content through the video encoder to understand the actions and scenes in it. Then, this information is projected into the conditional space, providing direction for sound effect generation. During the sound effect generation process, SeedFoley adopts an improved diffusion model framework to intelligently generate a sound effect solution that perfectly matches it based on the video content.

In order to allow AI to better understand the art of sound, SeedFoley learned a large number of voice and music-related tags during the training process, allowing it to distinguish sound effects from non-sound effects and achieve more accurate sound effects generation. In addition, SeedFoley can also handle video inputs of various lengths, whether it is a short video of a few seconds or a long video of a few minutes, it can easily deal with it, and it has reached an industry-leading level in terms of sound accuracy, synchronization and matching with video content.

SeedFoley's video encoder uses a combination of fast and slow features to capture subtle actions in the video at high frame rates and extract the semantic information of the video at low frame rates. This combination of fast and slow not only retains key motion characteristics, but also effectively reduces computing costs, achieving a perfect balance between low power consumption and high performance. Through this technology, SeedFoley can realize 8fps frame-level video feature extraction under low computing resources, accurately positioning every action in the video.

In terms of audio characterization model, SeedFoley uses the original waveform as input and obtains 1D audio characterization after encoding. Compared with the traditional Meer spectrum model, this method has more advantages in audio reconstruction and generation modeling. In order to ensure the complete retention of high-frequency information, SeedFoley's audio sampling rate is as high as 32k, and the audio per second can extract 32 audio potential characterizations, effectively improving the timing resolution of the audio and making the generated sound effects more delicate and realistic.

SeedFoley's audio representation model also adopts a two-stage joint training strategy. In the first stage, the phase information in the audio representation is stripped using a mask strategy, and the dephased potential representation is used as the optimization goal of the diffusion model. In the second stage, the phase information is reconstructed from the dephasing representation using an audio decoder to restore the sound to its most realistic state. This step-by-step strategy effectively reduces the difficulty of predicting representations by the diffusion model, and ultimately realizes the generation and restoration of potential representations of high-quality audio.

In terms of diffusion model, SeedFoley chose the DiffusionTransformer framework, and achieved accurate probability matching from Gaussian noise distribution to the target audio representation space by optimizing the continuous mapping relationship on the probability path. Compared with the traditional diffusion model that relies on Markov chain sampling, SeedFoley effectively reduces the number of inference steps by constructing a continuous transformation path, greatly reduces the inference cost, and makes the sound effect generation faster and more efficient.

The birth of SeedFoley marks the deep integration of video content and audio generation. It can accurately extract video frame-level visual information, and accurately identify the vocal subject and action scenes in the video by insight into multi-frame picture information. Whether it is musical moments with a strong sense of rhythm or the tense plots in the movie, SeedFoley can accurately pinpoint points and create an immersive and realistic experience. What is even more surprising is that SeedFoley can also intelligently distinguish between action sound effects and ambient sound effects, significantly improving the narrative tension and emotional transmission efficiency of the video.

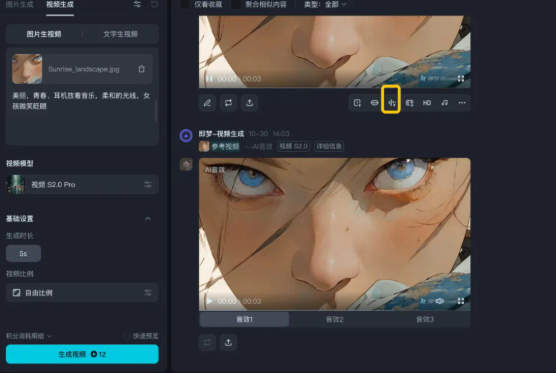

Now, the AI sound effect function has been officially launched on the Imeng platform. Users only need to use the Imeng to generate videos and select the AI sound effect function to generate 3 professional sound effects solutions in one click. Whether it is AI video creation, life Vlog, short film production or game production, SeedFoley can help you easily create high-quality videos with professional sound effects, so that your works can instantly sound!