In the field of multimodal artificial intelligence, Zhiyuan Research Institute has cooperated with many universities to launch the new multimodal vector model BGE-VL. This innovation marks a major breakthrough in multimodal retrieval technology. Since its launch, the BGE series models have been widely praised for their outstanding performance, and the launch of BGE-VL has further enriched this ecosystem. This model performed particularly well in many key tasks such as graphic and text retrieval and combined image retrieval, demonstrating its leading position in the field of multimodal retrieval.

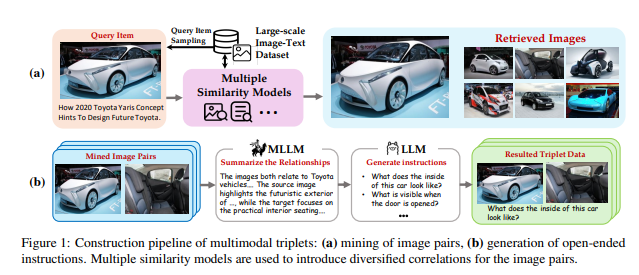

The success of BGE-VL is inseparable from the MegaPairs data synthesis technology behind it. This innovative method significantly improves the scalability and quality of data by mining existing large-scale graphic and text data. MegaPairs is able to generate diverse data sets at extremely low cost, and its containing more than 26 million samples provides a solid foundation for training multimodal retrieval models. This technology has enabled BGE-VL to achieve leading results in multiple mainstream multimodal search benchmarks, further consolidating its position in the industry.

With the increasing development of multimodal retrieval technology, users' needs for information acquisition are becoming more and more diverse. Previous retrieval models mostly rely on a single graphic pair for training, and cannot effectively deal with complex combined inputs. BGE-VL successfully overcomes this limitation by introducing MegaPairs data, allowing the model to more comprehensively understand and process multimodal queries, thereby providing users with more accurate retrieval services.

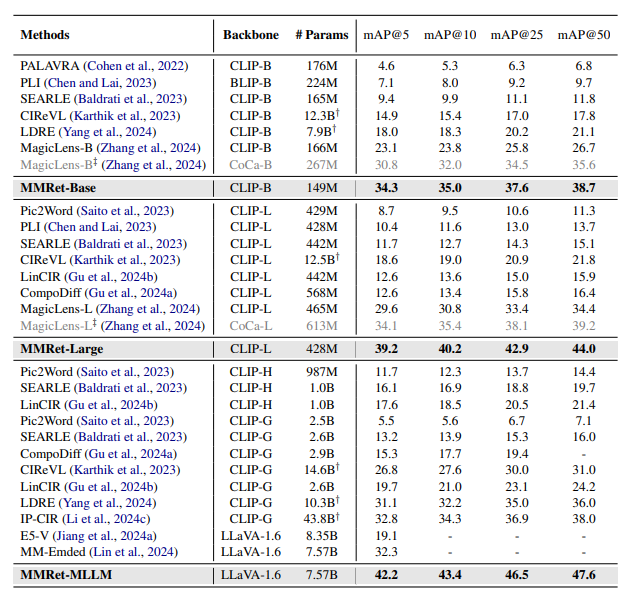

In performance evaluation of multiple tasks, the Zhiyuan team found that the BGE-VL model performed particularly well on the Massive Multimodal Embedding Benchmark (MMEB). Although MegaPairs does not cover most tasks in MMEB, its task generalization capabilities are still exciting. In addition, in the evaluation of combined image retrieval, BGE-VL also performed outstandingly, significantly surpassing many well-known models, such as Google's MagicLens and Nvidia's MM-Embed, further proving its leading position in the field of multimodal retrieval.

Looking ahead, Zhiyuan Research Institute plans to continue to deepen MegaPairs technology, combine with richer multimodal search scenarios, and strive to create a more comprehensive and efficient multimodal searcher to provide users with more accurate information services. With the continuous development of multimodal technology, the launch of BGE-VL will undoubtedly promote further exploration and innovation in related fields and inject new impetus into the progress of artificial intelligence technology.

Paper address: https://arxiv.org/abs/2412.14475

Project homepage: https://github.com/VectorSpaceLab/MegaPairs

Model address: https://huggingface.co/BAAI/BGE-VL-MLLM-S1