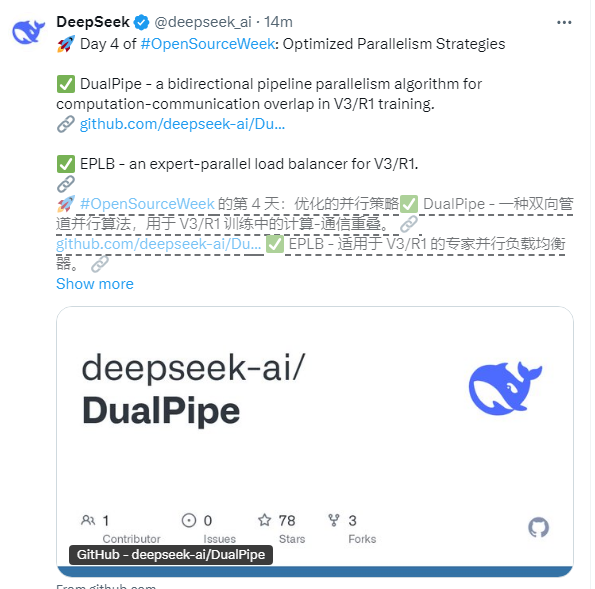

Today, DeepSeek, a leading company in the field of artificial intelligence in China, officially announced the fourth day of its open source plan - Optimized Parallelism Strategies. The core technologies released this time include the two-way pipeline parallel algorithm DualPipe, the expert parallel load balancer EPLB, and the deep optimization of the computing-communication overlap mechanism. These technological upgrades directly target key issues in large-scale language model training, providing a new solution for the efficient operation of super wanka-level clusters.

DualPipe is one of the core of this technology upgrade, designed specifically for the V3/R1 architecture. Through an innovative two-way data flow pipeline, DualPipe achieves a high overlap between computing and communication. Compared with traditional one-way pipelines, this technology significantly improves computing throughput, especially suitable for model training with a scale of 100 billion to 100 billion parameters. According to the GitHub code base, DualPipe performs forward computing synchronously in the backpropagation stage through an intelligent scheduling mechanism, which increases hardware utilization by about 30%.

EPLB technology aims at the "hot experts" problem in hybrid expert (MoE) model training, and for the first time realizes dynamic load balancing of experts parallel. Traditional methods often lead to overloading of some calculation cards due to uneven allocation of expert tasks. Through real-time monitoring and adaptive allocation, EPLB increases the overall utilization rate of the Wanka-level cluster to more than 92%, effectively avoiding idle resources.

In addition, DeepSeek also built a spatio-temporal efficiency model of 3D parallelism (data/pipeline/tensor parallelism) for the first time based on the communication overlap analysis tool of V3/R1 architecture. Through open source analytical data sets, developers can accurately locate conflicting nodes between computing and communication, providing a tuning benchmark for hyperscale model training. According to tests, this optimization reduces end-to-end training time by about 15%.

This technology release has attracted strong attention in the industry. Experts pointed out that the combined innovation of DualPipe and EPLB directly responds to the two major challenges of current large-scale training: First, with the exponential growth of model scale, the scalability bottleneck of traditional parallel strategies is becoming increasingly prominent; Second, the popularity of hybrid expert models has made dynamic load balancing a basic need. The technical director of a cloud computing manufacturer commented: "These tools will significantly reduce the hardware threshold for model training of hundreds of billions of dollars, and are expected to reduce the training cost by 20%-30%.

DeepSeek's CTO emphasized in the technical document that the open source strategy has been verified in its internal training of several 100 billion parameter models and will continue to iterate and optimize in the future. At present, these three technologies are open source on GitHub, supporting developers to customize and apply them to different hardware environments.

As the global AI competition enters the "scaling victory" stage, DeepSeek has opened source of key technologies for four consecutive days, not only demonstrates the technical strength of Chinese AI companies, but also provides the industry with reusable infrastructure. This technological innovation driven by "open collaboration" may reshape the industrial ecosystem of big model training.