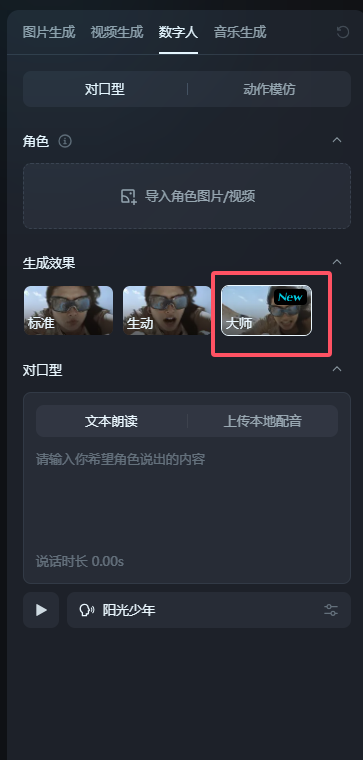

ByteDance's OmniHuman-1 function has now been officially opened for use on the Imeng platform. Recently, Jimeng AI announced the full launch of the Digital Human-Master mode. This innovative function allows users to automatically generate advanced digital humans with natural movements and realistic expressions by just uploading a photo and an audio. Unlike the traditional model, the Master model does not require inputting complex prompt words, which greatly reduces the threshold for digital human creation and allows more users to easily get started.

The launch of this function not only greatly reduces the difficulty of digital people's creation, but also significantly improves the freedom of creation. The animation performance of the new generation of digital people is particularly amazing, completely getting rid of the problem of rigid models in the past and bringing users a smoother and more natural visual experience. Whether it is facial expressions or body movements, they have reached an unprecedented level of reality.

The biggest highlight of the Master Mode is that it breaks through the limitation that traditional digital people can only drive head movements, and realizes the natural generation of whole-body movements. This means that the digital people created by users are no longer a single "big-headed doll", but have complete body language. Judging from the feedback from current community users, this function performs particularly well in complex scenes such as speech, singing, and dancing, showing strong application potential.

In order to test this function, the editor uploaded a photo of Nezha and added an audio. The generated effects are surprising, with smooth and natural movements and vivid expressions, which are ideal for creating lip-syncing animations or digital lecturers. Currently, Master Mode supports uploading audio up to 15 seconds, which is enough to meet the needs of most short video creations.

This feature is undoubtedly a great blessing for short video creators, virtual anchors and advertising makers. It not only greatly reduces production costs, but also helps users produce more expressive and attractive content. Whether it is producing live content for virtual anchors or adding vivid digital human roles to advertisements, the master model can significantly improve efficiency and quality.

Furthermore, the potential of Master Mode goes far beyond that. With the continuous advancement of technology, it is likely to become a powerful engine for AI digital human technology to enter broader fields such as film and television, games, etc. In the future, we may see movies starring AI Digital Man, or play an immersive game world dominated by digital Man NPCs. All of this makes people look forward to it, and the future of digital human technology will undoubtedly be even more exciting.