Recently, Google released a new vision-language model (VLM) called PaliGemma2Mix, an innovation that marks a major breakthrough in artificial intelligence technology in the field of image and text processing. PaliGemma2Mix can not only process visual information and text input at the same time, but also generate corresponding outputs according to requirements, providing powerful technical support for multitasking.

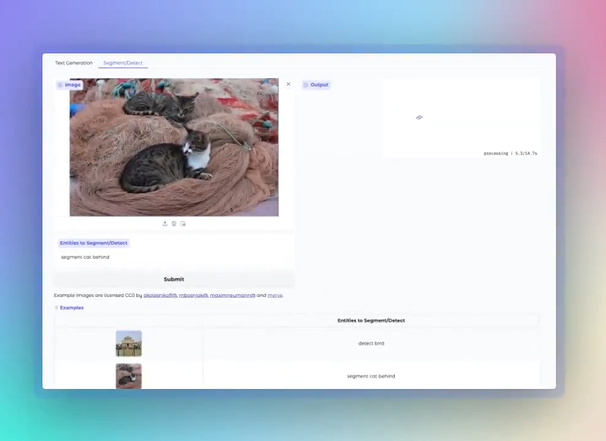

PaliGemma2Mix has extremely comprehensive functions, covering a variety of visual-language tasks such as image description, optical character recognition (OCR), image question and answer, object detection and image segmentation. Whether developers or researchers can use the model directly through pre-training checkpoints, or fine-tune according to specific needs, to meet the needs of different application scenarios.

As an optimized version of PaliGemma2, PaliGemma2Mix has been specially adjusted for hybrid tasks, aiming to provide developers with a more convenient exploration experience. The model provides three parameter scales, including 3B (3 billion parameters), 10B (10 billion parameters) and 28B (28 billion parameters), and supports two resolutions: 224px and 448px, which can flexibly adapt to different computing resources and task requirements.

The core functional highlights of PaliGemma2Mix include image description, optical character recognition (OCR), image question and answer and object detection. In terms of image description, the model is able to generate detailed short or long descriptions, such as identifying a picture of a cow standing on the beach and providing rich descriptions. In terms of OCR, it can extract text from images, identify logos, labels and document content, providing great convenience for information extraction. In addition, users can also upload pictures and ask questions. The model will analyze the pictures and give accurate answers, and can also identify specific objects in the image, such as animals, vehicles, etc.

It is worth mentioning that developers can download the mixed weights of PaliGemma2Mix through the Kaggle and Hugging Face platforms to facilitate further experiments and development. If you are interested in this model, you can explore through Hugging Face's demonstration platform to gain insight into its powerful features and application potential.

With the launch of PaliGemma2Mix, Google's research in the field of vision-language models has taken another important step. This model not only demonstrates the huge potential of artificial intelligence technology, but also provides more possibilities for future practical applications. We look forward to this technology being able to show its value in more fields and promote the further development of artificial intelligence technology.

Technical report: https://arxiv.org/abs/2412.03555