In the field of artificial intelligence, the rapid development of speech comprehension language models (SULMs) has attracted widespread attention. Northwestern Polytechnical University ASLP Laboratory recently released the open speech comprehension model OSUM, aiming to explore how to effectively train and utilize the speech comprehension model to promote research and innovation in the academic community when academic resources are limited.

The OSUM model combines the Whisper encoder with the Qwen2 language model and supports 8 speech tasks, including speech recognition (ASR), timestamped speech recognition (SRWT), speech event detection (VED), speech emotion recognition (SER), speech style recognition (SSR), speaker gender classification (SGC), speaker age prediction (SAP), and voice-to-text chat (STTC). By adopting the ASR+X training strategy, this model can efficiently and stably optimize speech recognition while performing target tasks, improving the ability of multi-task learning.

The release of the OSUM model not only focuses on performance, but also emphasizes transparency. Its training methods and data preparation process have been opened to provide valuable reference and guidance to the academic community. According to the technical report v2.0, the amount of training data for the OSUM model has been increased to 50.5K hours, significantly higher than the previous 44.1K hours. Among them, it includes 3000 hours of speech gender classification data and 6800 hours of speaker age prediction data. The expansion of these data makes the model perform better in various tasks.

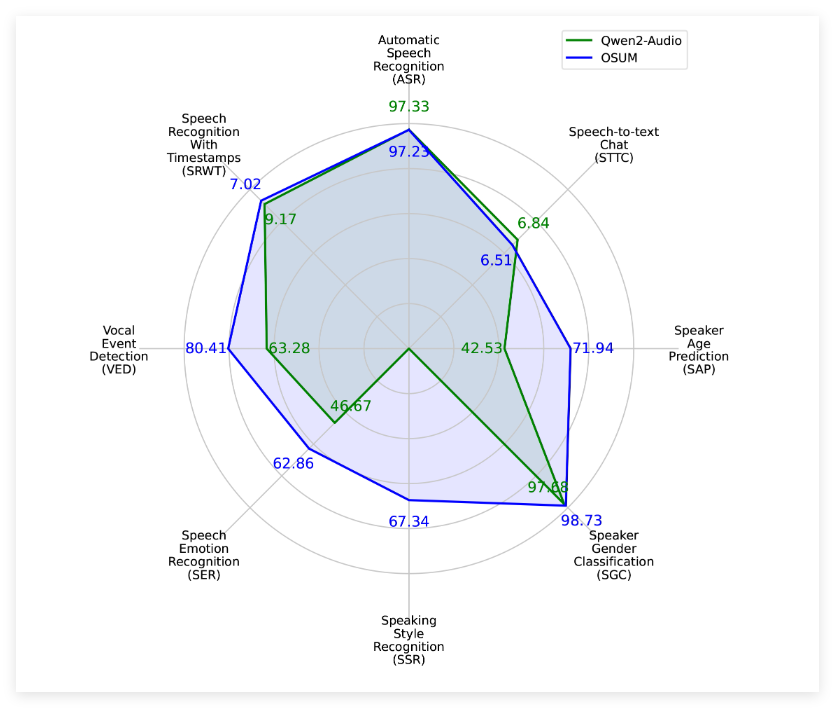

According to the evaluation results, OSUM is better than the Qwen2-Audio model in multiple tasks, even with significantly less computing resources and training data. The relevant evaluation results cover not only the public test set, but also the internal test set, demonstrating the good performance of the OSUM model on speech comprehension tasks.

The ASLP Laboratory of Northwestern Polytechnical University said that OSUM’s goal is to promote the development of advanced speech understanding technologies through an open research platform. Researchers and developers can freely use the code and weights of the model, and can even be used for commercial purposes, thereby accelerating the application and promotion of technology.

Project entrance: https://github.com/ASLP-lab/OSUM?tab=readme-ov-file

The OSUM model combines the Whisper encoder and the Qwen2 language model to support multiple voice tasks and help multi-task learning.

OSUM In Technical Report v2.0, the training data volume increased to 50.5K hours, improving the performance of the model.

The code and weights of this model are open to use under the Apache 2.0 license, encouraging widespread use in academia and industry.