According to the latest news, iPhone15Pro users will usher in a major update: Apple's Visual Intelligence feature will be officially launched on the Pro series flagship models released in 2023. This news undoubtedly excites many users because it means that iPhone15Pro users have another reason to keep existing devices when considering whether to upgrade to the iPhone16 series.

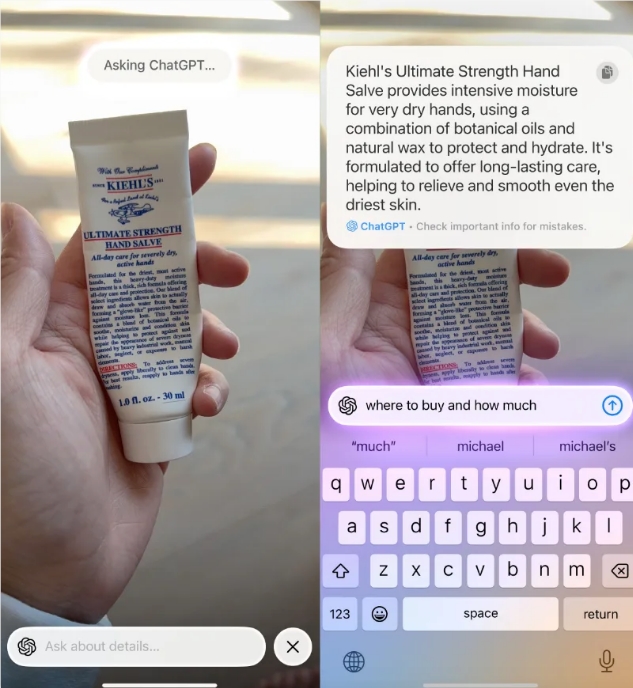

Visual intelligence features are similar to Google's Google Lens, and are an important part of the Apple Intelligence AI feature suite. With this feature, users can use AI to perform real-time analysis by simply pointing the camera at the object. While it can complete some tasks independently, more practical information comes from its screen shortcuts, such as ChatGPT or Google Image Search, further improving the user experience.

For iPhone16 and iPhone16Pro users, the visual intelligence function can be triggered by long pressing the dedicated photo button. However, since the iPhone15Pro, Pro Max, and the recently released iPhone16e do not have a physical camera button, these three devices need to assign the feature to the Operation button, or through the Control Center shortcut. It is reported that this feature will be available in an upcoming software update.

Cherlynn Low of Addiction Technology gives an example of the practicality of visual intelligence. For example, when a user finds a set of towels with unique patterns in his closet, wants to buy more but forgets the place to buy, he can activate the visual intelligence function, select Google search shortcuts, and see if the product you like appears in the search results. In addition, users can also use ChatGPT to ask for detailed information about the product and ordering channels, which greatly facilitates daily life.

It is worth mentioning that the visual intelligence feature does not rely entirely on the help of Google or OpenAI, it can also run independently. Users can directly interact with the text to perform translation, reading and summarizing operations. In addition, users can also use this function to understand the business information pointed to by their mobile phones, such as viewing business hours, menus, services, and even directly purchasing products, further improving the diversity of their application scenarios.

At present, Apple has not disclosed which iOS version will bring Apple Intelligence visual search function to the iPhone 15 Pro series. However, John Gruber of Daring Fireball speculates that the feature may appear in iOS 18.4 and may appear "at any time" in beta beta. This means that users of the iPhone 15 Pro and Pro Max are expected to experience this convenient feature soon, and for those willing to try the beta version of the software, the experience may be earlier.

In general, the addition of visual intelligence features undoubtedly brings more convenience and possibilities to iPhone15Pro users. Whether it is shopping needs in daily life or the rapid acquisition of merchant information, this function will become a right-hand assistant in the hands of users. As iOS update approaches, users can look forward to the official launch of this feature and enjoy the intelligent experience it brings.