The FunAudioLLM project recently launched by Alibaba Tongyi Labs marks an entirely new era of audio generation technology. This open source project redefined the possibility of human-computer voice interaction through its two core models SenseVoice and CosyVoice. FunAudioLLM not only demonstrates Alibaba's deep accumulation in the field of artificial intelligence, but also points out the direction for the future development of intelligent voice technology.

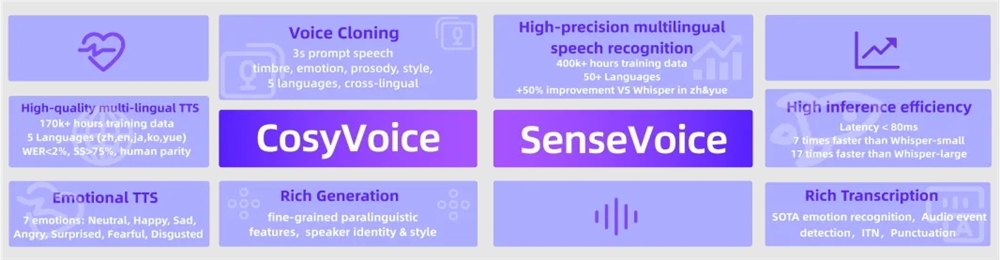

As the project's voice generation engine, CosyVoice's technological breakthroughs are impressive. After 150,000 hours of multilingual data training, the model not only achieved smooth generation of five languages, namely Chinese, English, Japanese, Guangdong and Korean, but also reached a new level in tone simulation and emotional control. Its unique zero-sample voice generation capability enables the model to quickly adapt to the new speaker's voice, providing unlimited possibilities for personalized voice services. Especially in cross-language sound synthesis, CosyVoice has shown amazing adaptability, paving the way for global voice interaction applications.

SenseVoice represents a new benchmark in speech recognition technology. After 400,000 hours of multilingual data training, its recognition accuracy significantly surpasses the existing Whisper model in more than 50 languages. In Chinese and Cantonese recognition, the accuracy rate has increased by more than 50%, which has brought a revolutionary breakthrough to the intelligent voice application in the Chinese market. It is more worth mentioning that SenseVoice integrates emotion recognition and audio event detection functions, allowing the machine not only to understand language, but also to understand the emotions and scene information of the speaker.

FunAudioLLM has extremely wide application scenarios, from multilingual real-time translation to emotional voice conversations, from interactive podcasts to smart audiobooks, every field contains huge commercial value. By combining SenseVoice's precise recognition, strong understanding of LLMs and natural generation of CosyVoice, the project achieves a true end-to-end voice interactive experience. This seamless voice-to-speech translation capability will revolutionize the way cross-language communication and bring new possibilities to globalized business and cultural exchanges.

In terms of technical implementation, CosyVoice adopts advanced speech quantization coding technology to ensure the naturalness and fluency of generated speech. SenseVoice integrates functions such as automatic speech recognition, language recognition, emotion recognition and audio event detection into a unified model through a multi-task learning framework, greatly improving the efficiency and accuracy of the system. This technical architecture not only reduces computing costs, but also provides a good foundation for subsequent model optimization and functional expansion.

Alibaba Tongyi Laboratory's open attitude is also commendable. The project team not only released the complete models and code on ModelScope and Huggingface, but also provided detailed training, reasoning and fine-tuning guides on GitHub. This open source spirit will greatly promote the research and application development in the field of voice technology and have a positive impact on the entire industry.

Project address: https://github.com/FunAudioLLM