Recently, the VITA-MLLM team released its latest research result VITA-1.5. This version has been fully upgraded based on VITA-1.0, especially in the real-time and accuracy of multimodal interactions. . VITA-1.5 not only supports two languages: English and Chinese, but also achieves a qualitative leap in multiple performance indicators, bringing users a smoother and more efficient interactive experience.

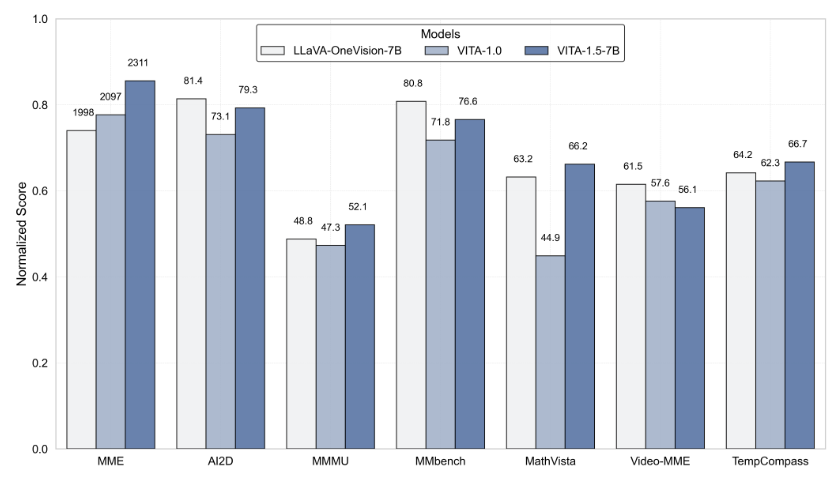

VITA-1.5 has made a major breakthrough in interaction delay, greatly shortening the original 4-second delay to only 1.5 seconds. This improvement allows users to almost no delay during voice interaction, greatly improving the user experience. In addition, VITA-1.5 has also significantly improved in multimodal performance. After evaluation by multiple benchmark tests such as MME, MMBench and MathVista, its average performance has increased from 59.8 to 70.8, demonstrating excellent multimodal processing capabilities.

In terms of voice processing, VITA-1.5 has also been deeply optimized. The error rate of its automatic speech recognition (ASR) system has dropped from 18.4 to 7.5, significantly improving the understanding and response accuracy of voice commands. At the same time, VITA-1.5 introduces a new end-to-end text-to-speech (TTS) module, which can directly receive embeddings from large language models (LLMs) as input, greatly improving the naturalness and coherence of speech synthesis.

To ensure the balance of multimodal capabilities, VITA-1.5 adopts a progressive training strategy, which minimizes the impact of the newly added speech processing module on visual-language performance, and image comprehension performance only slightly decreased from 71.3 to 70.8. Through these technological innovations, VITA-1.5 further promotes the boundaries between real-time visual and voice interactions, laying a solid foundation for future intelligent interaction applications.

For developers, VITA-1.5 is very convenient to use. Developers can quickly get started with simple command line operations, and the team also provides basic and real-time interactive demonstrations to help users better understand and use the system. In order to further improve the real-time interactive experience, users need to prepare some necessary modules, such as Voice Activity Detection (VAD) module. In addition, the code of VITA-1.5 will be fully open source, allowing developers to participate and contribute, and jointly promote the advancement of this technology.

The launch of VITA-1.5 marks another important milestone in the field of interactive multimodal large language model, demonstrating the unremitting pursuit of VITA-MLLM team in technological innovation and user experience. The release of this version not only brings users a more intelligent interactive experience, but also points out the direction for the future development of multimodal technology.

Project entrance: https://github.com/VITA-MLLM/VITA?tab=readme-ov-file

Key points:

VITA-1.5 significantly reduces interaction latency, shortening from 4 seconds to 1.5 seconds, significantly improving the user experience.

Multimodal performance has improved, with the average performance of multiple benchmarks increased from 59.8 to 70.8.

The voice processing capability is enhanced, the ASR error rate has dropped from 18.4 to 7.5, and the speech recognition is more accurate.