Hugging Face has launched a new compact language model, SmolLM2, which is an exciting breakthrough. SmolLM2 has three different parameter size versions that provide powerful performance even on resource-constrained devices, which is of great significance for edge computing and mobile device applications. It excels in multiple benchmarks, surpassing similar models, demonstrating its advantages in scientific reasoning and common sense tasks. SmolLM2's open source and Apache 2.0 license also make it easier to access and apply.

Hugging Face today released SmolLM2, a new set of compact language models that achieve impressive performance while requiring much less computing resources than large models. The new model is released under the Apache 2.0 license and comes in three sizes – 135M, 360M and 1.7B parameters – suitable for deployment on smartphones and other edge devices with limited processing power and memory.

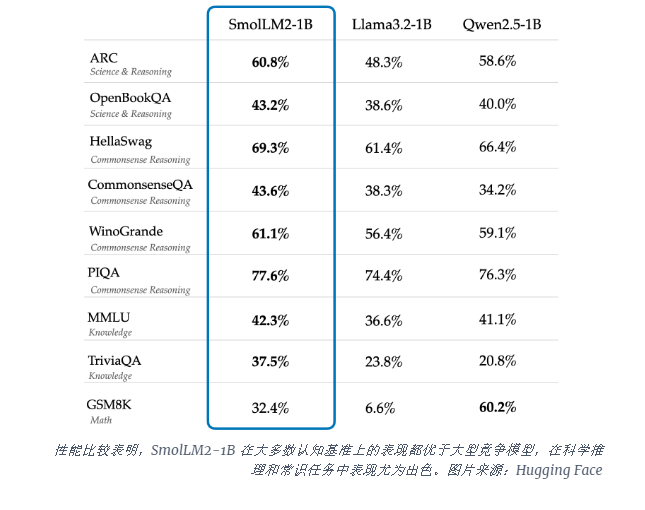

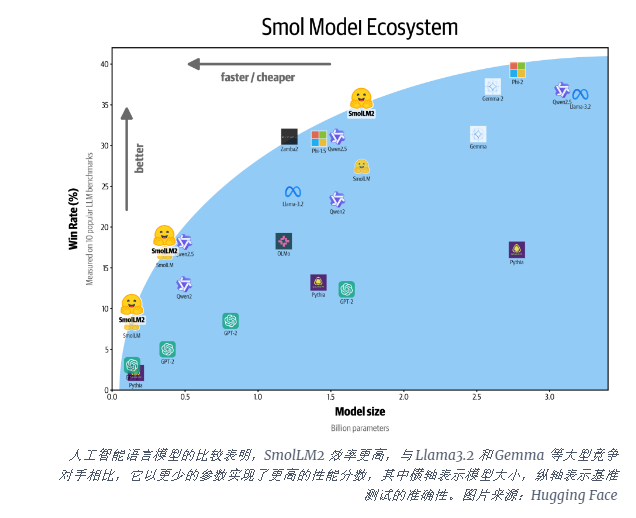

The SmolLM2-1B model outperforms the Meta Llama1B model in several key benchmarks, especially in scientific reasoning and common sense tasks. This model outperforms large competing models on most cognitive benchmarks, using a diverse combination of datasets including FineWeb-Edu and specialized mathematical and coding datasets.

The launch of SmolLM2 comes at a critical moment when the AI industry is struggling to cope with the computing needs to run large language models (LLMs). While companies like OpenAI and Anthropic continue to push the boundaries of model size, there is an increasing recognition of the need for efficient, lightweight AI that can run locally on devices.

SmolLM2 offers a different approach to bringing powerful AI capabilities directly to personal devices, pointing to the future where more users and companies can use advanced AI tools, not just tech giants with huge data centers. These models support a range of applications, including text rewrites, summary, and function calls, suitable for deployment in scenarios where privacy, latency, or connection restrictions make cloud-based AI solutions impractical.

While these smaller models still have limitations, they represent part of a broad trend in more efficient AI models. The release of SmolLM2 shows that the future of artificial intelligence may not only belong to larger models, but to more efficient architectures that can provide powerful performance with fewer resources.

The emergence of SmolLM2 has opened up new possibilities for lightweight AI applications, indicating that AI technology will be more widely used in various devices and scenarios, bringing users a more convenient and efficient experience. Its open source features have also promoted the further development and innovation of the AI community. In the future, we are expected to see more efficient and compact AI models emerging, promoting the popularization and advancement of AI technologies.