This paper introduces a large-scale 4D Gaussian reconstruction model called L4GM, which is capable of efficiently generating high-quality animated objects from single-view videos. It is trained on a large data set containing multi-view videos and is innovatively designed to achieve a fast processing speed of only one second for one-way transmission. The advantage of L4GM is that it can reconstruct long videos and high frame rate videos, and supports 4D interpolation to significantly increase the video frame rate. In addition, the model also shows good generalization ability and can achieve satisfactory results in real scene videos.

Recently, the research team proposed a large-scale 4D Gaussian reconstruction model called L4GM, which can generate animated objects from single-view video inputs and achieve impressive results.

The key to this model is the innovative data set and simplified design, which makes it possible to complete one-way transfer in just one second, while ensuring the high quality of the output animated objects.

Video to 4D compositing

L4GM can generate 4D objects from videos in a few seconds. In the following video example, you can see the target object in the original video and the corresponding generated 4D Gaussian reconstruction model.

Reconstruct long, high FPS, flexible videos

And reconstruct 10 seconds long 30fps video. As an example in the following video,

4D interpolation

The team also trained a 4D interpolation model to increase the frame rate by 3 times. As an example in the following video,

Left: before interpolation. Right: after interpolation

Build perspective video data set

The research team built a dataset containing multi-view videos containing carefully crafted, rendered animated objects from the Objaverse. This dataset displays 44,000 diverse objects covering 110,000 animations from 48 viewpoints, resulting in a total of 120 million videos with a total of 300 million frames. Based on this dataset, L4GM is directly built on the already pre-trained 3D large-scale reconstruction model LGM, which outputs 3D Gaussian ellipsoids from multi-view image input.

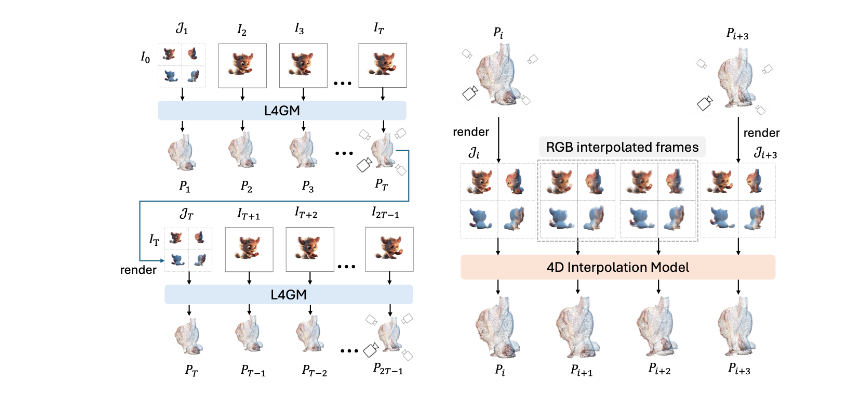

L4GM achieves temporal smoothness by generating a 3D Gaussian splash representation of each frame on video frames sampled at low fps, and then upsampling the representation to higher fps.

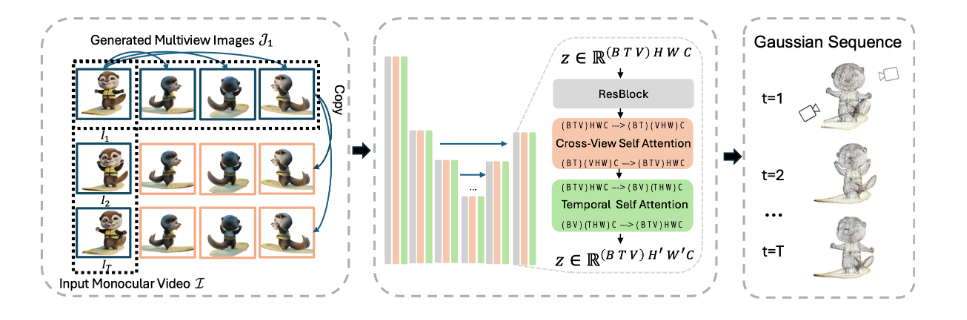

In order to help the model learn temporal consistency, the research team added a temporal self-attention layer to the basic LGM and used multi-view rendering loss at each time step to train the model. By training an interpolation model, this representation is upsampled to a higher frame rate, resulting in an intermediate 3D Gaussian representation.

The research team demonstrated the good generalization ability of L4GM on videos in the wild after training on synthetic data, producing high-quality animated 3D objects. The model accepts single-view video and single-time-step multi-view images as input, and outputs a set of 4D Gaussian probability distributions.

technical framework

The model takes as input a single-view video and a single-time-step multi-view image, and outputs a set of 4D Gaussians. It adopts the U-Net architecture, uses cross-view self-attention to achieve view consistency, and uses time-to-spatial self-attention to achieve temporal consistency.

L4GM allows autoregressive reconstruction, using a multi-view rendering of the last Gaussian as input to the next reconstruction. There is one frame of overlap between two consecutive reconstructions. In addition, the research team also trained a 4D interpolation model. The interpolation model receives the interpolated multi-view video rendered from the reconstruction results and outputs the interpolated Gaussian.

L4GM applicable scenarios include:

Video content generation: L4GM can generate 4D models of animated objects from single-view video input, which has wide applications in video special effects production, game development and other fields. For example, it can be used to generate special effects animations, build virtual scenes, etc.

Video reconstruction and repair: L4GM can reconstruct long-term, high-frame-rate videos and can be used for video repair and restoration to improve video quality and clarity. This can be useful in film restoration, video compression, and video processing.

Video interpolation: Through the trained 4D interpolation model, L4GM can increase the frame rate of the video and make the video smoother. This has potential applications in video editing, slow motion/fast motion effect production, etc.

3D asset generation: L4GM can generate high-quality animated 3D assets, which is very useful for 3D model generation in virtual reality (VR), augmented reality (AR) applications and game development.

Product entrance: https://top.aibase.com/tool/l4gm

All in all, the L4GM model has made significant progress in the field of 4D Gaussian reconstruction, and its high efficiency, high-quality output, and broad application prospects make it a research result of great significance. The emergence of this model will greatly promote progress in areas such as video processing and 3D asset generation.