Recently, Arcee Spark, a large-scale language model based on Qwen2, was born. With its 128k token context length and fine tuning on 1.8 million sample data, it has made waves in the field of artificial intelligence. Arcee Spark shows strong performance and stands out in multiple benchmark tests, even surpassing GPT-3.5, which undoubtedly sets a new benchmark for the development of large-scale language models. Its 7B parameter scale also reflects its significant improvement in speed and performance.

Recently, a Qwen2-based model Arcee Spark was fine-tuned on 1.8 million sample data and has 128k token context. The release of Arcee Spark has attracted widespread attention, especially among practitioners in the field of artificial intelligence.

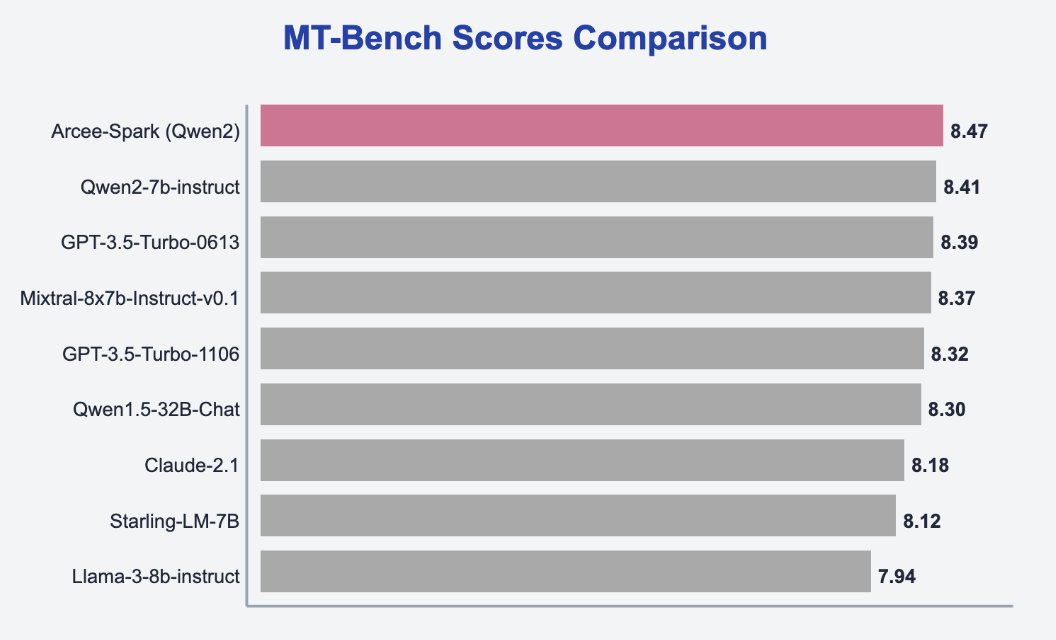

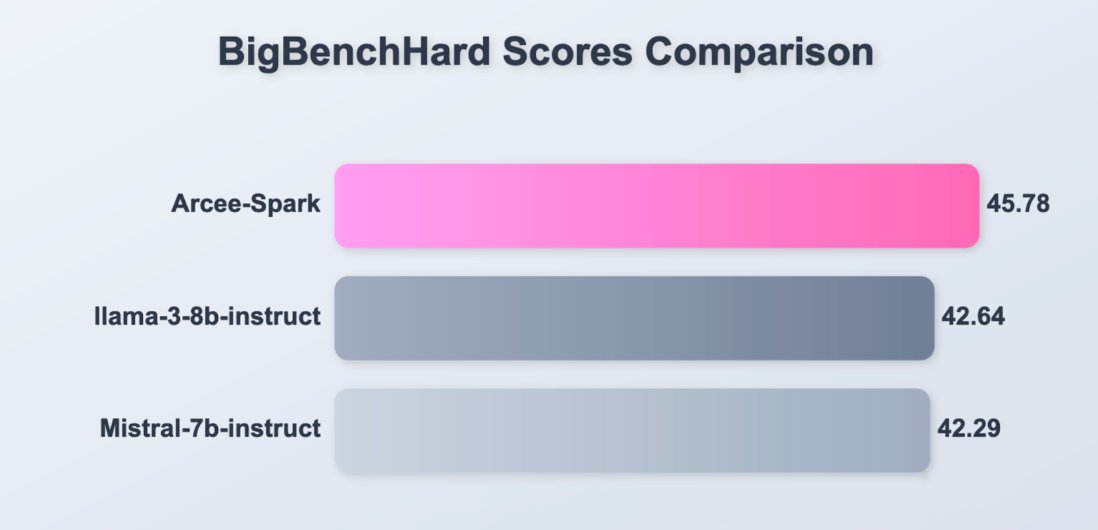

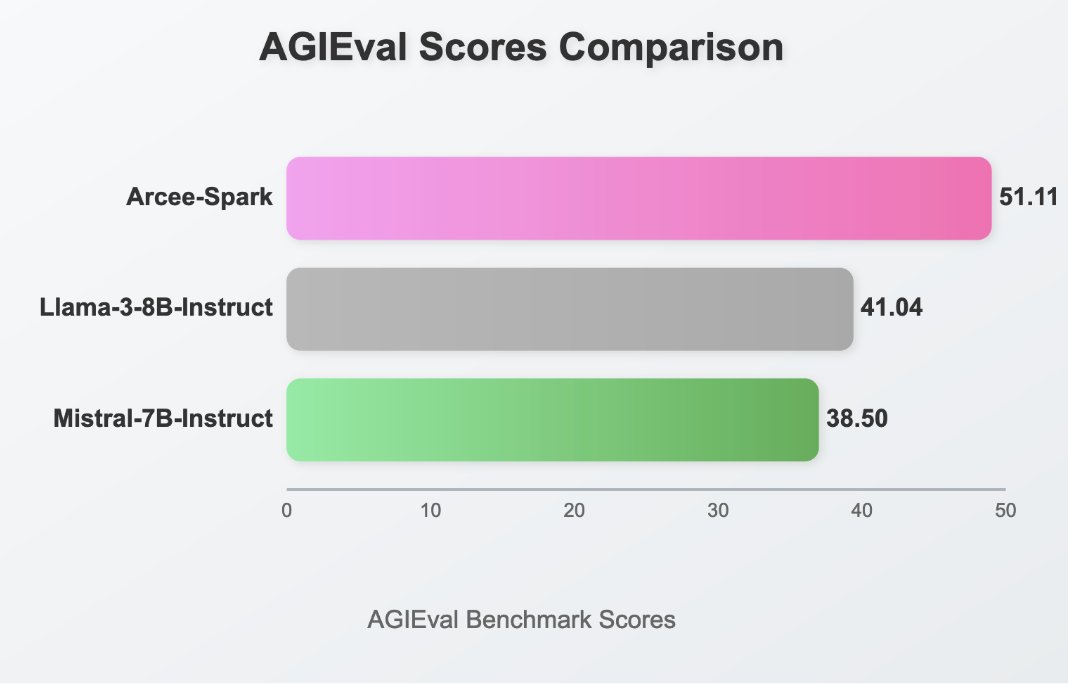

It performs well in benchmarks such as MT-Bench, achieving the highest scores among similar models and even surpassing GPT-3.5 on multiple tasks. It is understood that Arcee Spark is a 7B parameter model and is a faster and more powerful version of Qwen2.

The emergence of Arcee Spark not only represents a significant advancement in large-scale language model technology, but also indicates the possibility of the future development direction of AI technology. Its powerful performance and efficient operating speed will bring broader application prospects to all walks of life, and it is worth looking forward to further in-depth research and applications.