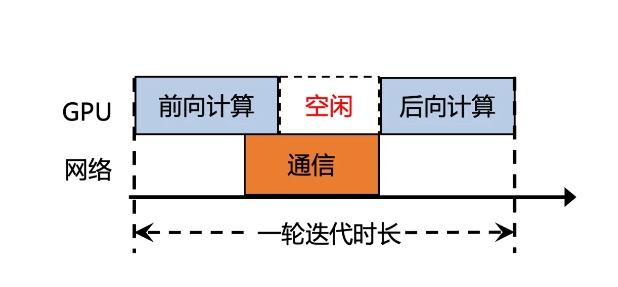

Tencent Cloud has released Xingmai Network 2.0, an upgraded version of the network designed for large model training, aiming to solve the problem of low communication efficiency in large model training. In the old version of Xingmai network, the time for synchronization of calculation results accounted for more than 50%, seriously affecting training efficiency. The new version has significantly improved network performance and reliability through multiple technical upgrades, providing more powerful support for large-scale model training.

1. Supports 100,000-card networking in a single cluster, doubling the scale, increasing network communication efficiency by 60%, increasing large model training efficiency by 20%, and reducing fault location from days to minutes.

2. Self-developed switches, optical modules, network cards and other network equipment are upgraded to make the infrastructure more reliable and support a single cluster with a scale of more than 100,000 GPU cards.

3. The new communication protocol TiTa2.0 is deployed on the network card, and the congestion algorithm is upgraded to an active congestion control algorithm. Communication efficiency is increased by 30%, and large model training efficiency is increased by 10%.

4. The high-performance collective communication library TCCL2.0 uses NVLINK+NET heterogeneous parallel communication to realize parallel transmission of data. It also has Auto-Tune Network Expert adaptive algorithm, which improves communication performance by 30% and large model training efficiency by 10%.

5. The newly added Tencent exclusive technology Lingjing simulation platform can fully monitor the cluster network, accurately locate GPU node problems, and reduce the time to locate 10,000-ka-level training faults from days to minutes.

Through these upgrades, the communication efficiency of the Xingmai network has been increased by 60%, the large model training efficiency has been increased by 20%, and the fault location accuracy has also been improved. These improvements will help improve the efficiency and performance of large model training, allowing expensive GPU resources to be more fully utilized.

The upgrade of Xingmai Network 2.0 has brought significant efficiency improvements and enhanced reliability to large model training. Its improvements in network equipment, communication protocols and fault location will promote the development of large model technology and bring benefits to users. Come to a more cost-effective AI training experience.