OpenAI released its latest multi-modal content review model "omni-moderation-latest" on September 26. This model is based on GPT-4o technology and can more accurately identify harmful text and image content, especially in the identification of non-English content. Outstanding performance. This move will significantly improve the efficiency and accuracy of the content review system, provide developers with more powerful tools, and build a safer user environment. The editor of Downcodes will give you an in-depth understanding of the advantages and features of this new model.

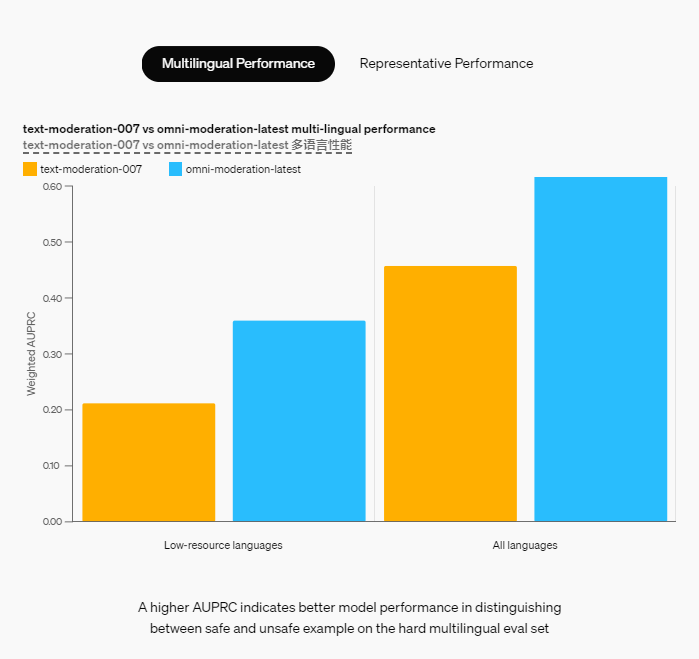

In the digital age, content security issues are becoming increasingly severe. The "omni-moderation-latest" model launched by OpenAI is undoubtedly an important step in meeting this challenge. This model supports multi-modal review of text and images, with significantly improved accuracy compared to previous models, and has added detection capabilities for illegal and violent content. For non-English content, its accuracy rate has increased by 42%, especially in low-resource languages. The advantage of the model is that it is free and easy to access, and developers can easily integrate it into their own applications.

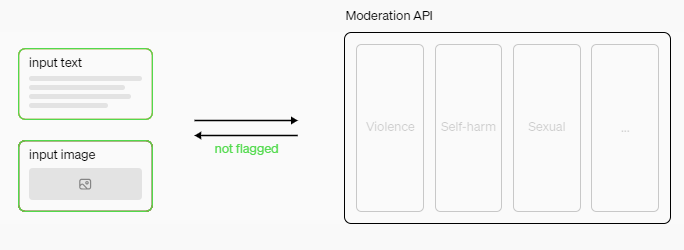

The highlight of the new model is that it supports moderation of text and image input, especially when processing non-English content .

Compared with the previous moderation model, “omni-moderation-latest” not only improves the accuracy of identification, but also increases the ability to detect more harmful content. It can assess multiple categories such as violence, self-harm and sexual content, ensuring users can communicate in a safer space.

Since OpenAI launched the content moderation API (Moderation API) in 2022, the amount and type of content that the automated moderation system needs to process has continued to increase, especially as more and more artificial intelligence applications enter the mass production stage. Today, many companies, such as Grammarly and ElevenLabs, are using this API to protect users and prevent inappropriate content.

The advantages of the new model are reflected in many aspects:

First, it enables multimodal classification of harmful content, assessing combinations of images and text to identify risks related to violence and sexual abuse.

Secondly, the model adds two new types of text review, namely content related to illegality and violence, which further enhances the review capabilities.

In addition, for the detection of non-English content, the accuracy has been greatly improved. The test shows that the accuracy rate in 40 languages has increased by 42%, especially in low-resource languages.

For developers, this new review model is still a free content review API that can be easily accessed. OpenAI hopes that this upgrade will allow more developers to take advantage of the latest research results and security systems to create a more user-friendly online experience.

Official blog: https://openai.com/index/upgrading-the-moderation-api-with-our-new-multimodal-moderation-model/

** Highlights: **

? The new model “omni-moderation-latest” is based on GPT-4o technology and supports multi-modal review of images.

? The detection accuracy for 40 languages has increased by 42%, especially in low-resource languages.

? Two new types of text review have been added to improve the ability to identify illegal and violent content.

All in all, OpenAI’s “omni-moderation-latest” model has brought significant progress in the field of content moderation. Its multi-modal capabilities, higher accuracy and support for multiple languages will effectively improve the level of online content security. . This is good news for both developers and users. The editor of Downcodes looks forward to OpenAI bringing more innovative technologies in the future to jointly build a safer, healthier and more friendly Internet environment.