The editor of Downcodes learned that Tencent Youtu Lab cooperated with the research team of Shanghai Jiao Tong University to develop a breakthrough knowledge enhancement method, which brought revolutionary changes to large model optimization. This method does not require traditional model fine-tuning, directly extracts knowledge from open source data, significantly simplifies the optimization process, and surpasses the state-of-the-art technology (SOTA) in multiple tasks. This innovative technology effectively solves the problem of traditional model fine-tuning methods' dependence on large amounts of annotated data and computing resources, and provides new possibilities for the promotion of large models in practical applications.

Tencent Youtu Lab and the research team of Shanghai Jiao Tong University jointly launched a revolutionary knowledge enhancement method, opening up a new path for large model optimization. This innovative technology abandons the limitations of traditional model fine-tuning, extracts knowledge directly from open source data, greatly simplifies the model optimization process, and achieves outstanding performance beyond the state-of-the-art technology (SOTA) in multiple tasks.

In recent years, although large language models (LLMs) have made significant progress in various fields, they still face many challenges in practical applications. Traditional model fine-tuning methods require a large amount of annotated data and computing resources, which is often difficult to achieve for many practical businesses. Although the open source community provides a wealth of fine-tuning models and instruction data sets, how to effectively utilize these resources and improve the task capabilities and generalization performance of the model with limited labeled samples has always been a problem faced by the industry.

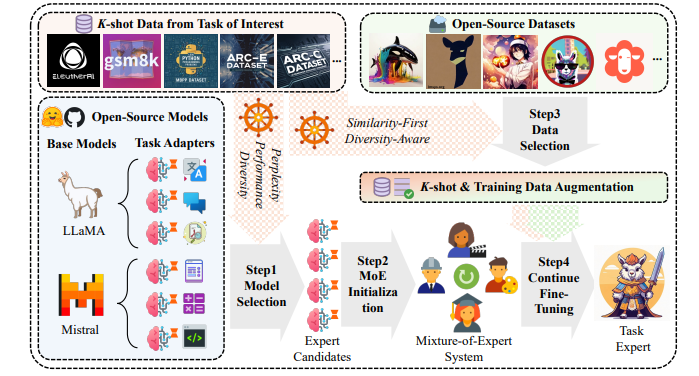

In response to this problem, the research team proposed a novel experimental framework that focuses on using open source knowledge to enhance model capabilities under the condition of K-shot labeled real business data. This framework fully leverages the value of limited samples and provides performance improvements for large language models on directional tasks.

The core innovations of this research include:

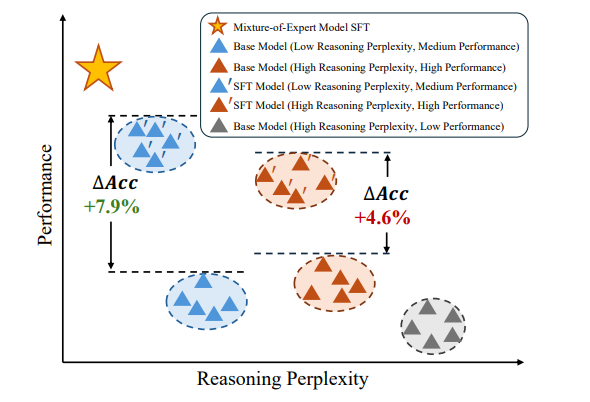

Efficient model selection: Maximize the potential of existing models under limited data conditions by comprehensively evaluating inference perplexity, model performance, and knowledge richness.

Knowledge extraction optimization: Designed a method to extract relevant knowledge from open source data. Through a data screening strategy that balances similarity and diversity, it provides supplementary information to the model while reducing the risk of overfitting.

Adaptive model system: An adaptive system based on a hybrid expert model structure is constructed to realize knowledge complementation between multiple effective models and improve overall performance.

During the experimental phase, the research team conducted a comprehensive evaluation using six open source datasets. Results show that this new method outperforms baselines and other state-of-the-art methods in various tasks. By visualizing expert activation patterns, the study also found that each expert's contribution to the model is indispensable, further confirming the effectiveness of the method.

This research not only demonstrates the huge potential of open source knowledge in the field of large models, but also provides new ideas for the future development of artificial intelligence technology. It breaks through the limitations of traditional model optimization and provides a feasible solution for enterprises and research institutions to improve model performance under limited resources.

As this technology continues to be improved and promoted, we have reason to believe that it will play an important role in the intelligent upgrading of various industries. This cooperation between Tencent Youtu and Shanghai Jiao Tong University is not only a model of cooperation between academia and industry, but also an important step in promoting artificial intelligence technology to a higher level.

Paper address: https://www.arxiv.org/pdf/2408.15915

This research result provides a new idea and feasible solution for large model optimization. It has huge potential in practical applications and is worth looking forward to further applications and development in the future. The editor of Downcodes will continue to pay attention to the latest developments in this field and bring more exciting reports to readers.