yolor

1.0.0

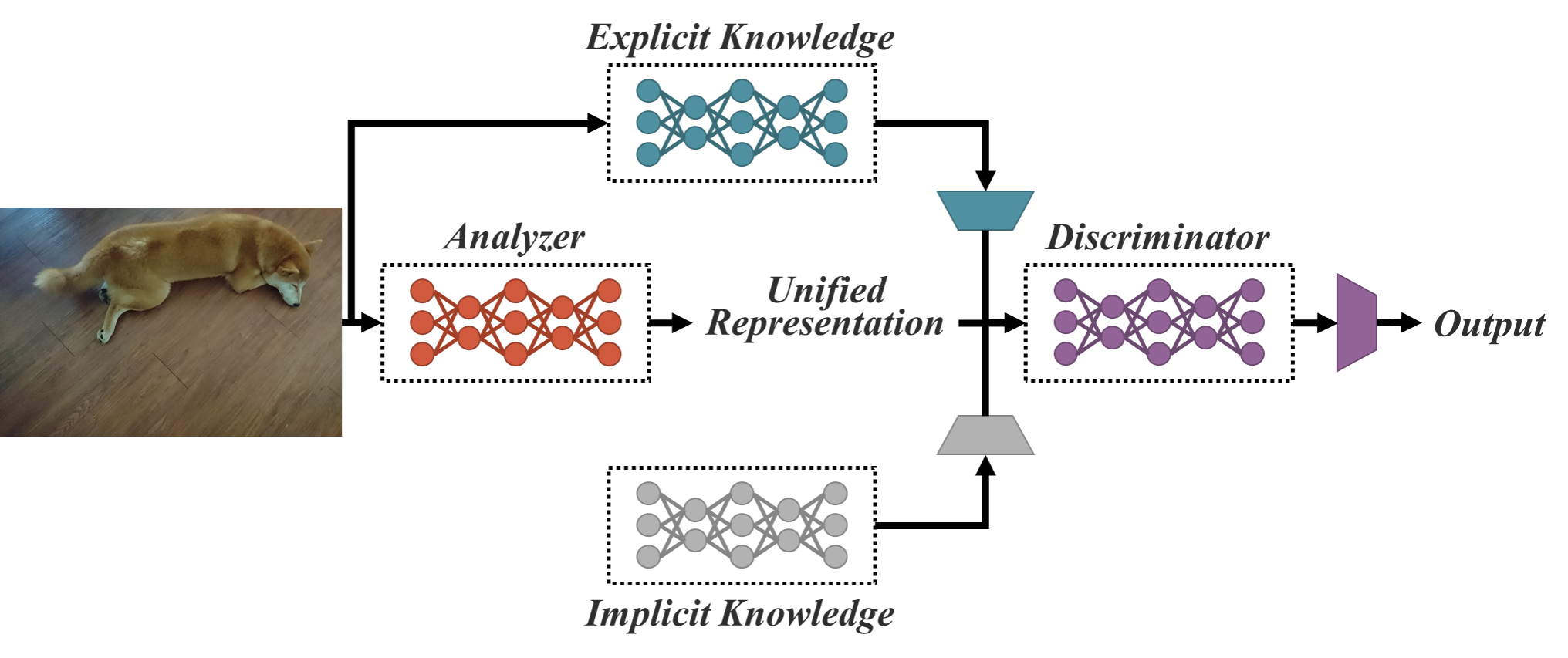

Implémentation du papier - Vous n'apprenez qu'une seule représentation: réseau unifié pour plusieurs tâches

Pour obtenir les résultats sur la table, veuillez utiliser cette branche.

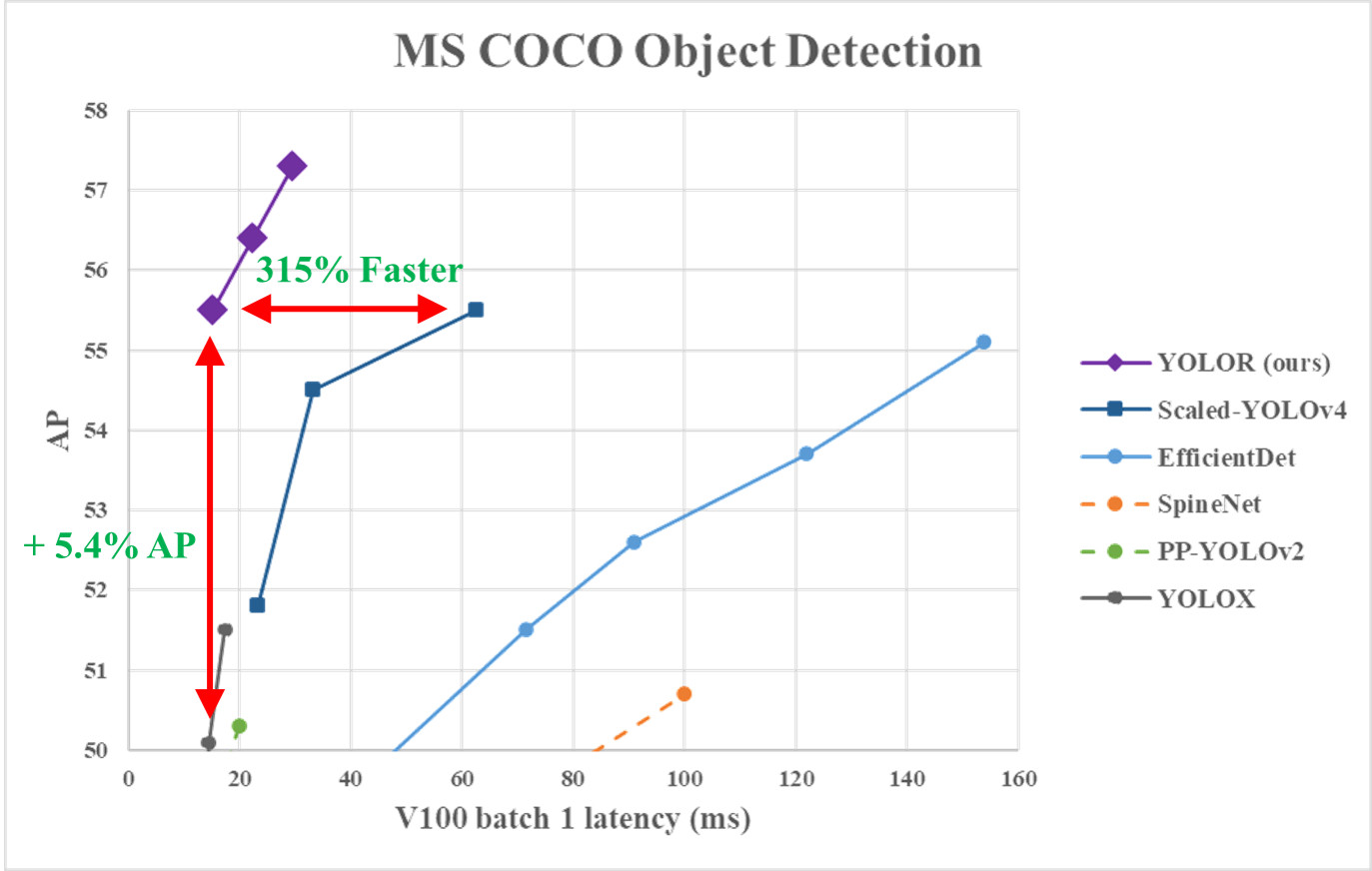

| Modèle | Taille de test | Test AP | Test AP 50 | Test AP 75 | Batch1 débit | Inférence Batch32 |

|---|---|---|---|---|---|---|

| Yolor-csp | 640 | 52,8% | 71,2% | 57,6% | 106 ips | 3,2 ms |

| Yolor-csp-x | 640 | 54,8% | 73,1% | 59,7% | 87 ips | 5,5 ms |

| Yolor-p6 | 1280 | 55,7% | 73,3% | 61,0% | 76 ips | 8,3 ms |

| Yolor-w6 | 1280 | 56,9% | 74,4% | 62,2% | 66 ips | 10,7 ms |

| Yolor-e6 | 1280 | 57,6% | 75,2% | 63,0% | 45 ips | 17.1 MS |

| Yolor-d6 | 1280 | 58,2% | 75,8% | 63,8% | 34 ips | 21,8 ms |

| Yolov4-p5 | 896 | 51,8% | 70,3% | 56,6% | 41 ips (vieux) | - |

| Yolov4-p6 | 1280 | 54,5% | 72,6% | 59,8% | 30 ips (vieux) | - |

| Yolov4-p7 | 1536 | 55,5% | 73,4% | 60,8% | 16 ips (vieux) | - |

| Modèle | Taille de test | Ap Val | AP 50 Val | AP 75 Val | AP S Val | AP M Val | Ap l Val | poids |

|---|---|---|---|---|---|---|---|---|

| Yolov4-csp | 640 | 49,1% | 67,7% | 53,8% | 32,1% | 54,4% | 63,2% | - |

| Yolor-csp | 640 | 49,2% | 67,6% | 53,7% | 32,9% | 54,4% | 63,0% | poids |

| Yolor-csp * | 640 | 50,0% | 68,7% | 54,3% | 34,2% | 55,1% | 64,3% | poids |

| Yolov4-csp-x | 640 | 50,9% | 69,3% | 55,4% | 35,3% | 55,8% | 64,8% | - |

| Yolor-csp-x | 640 | 51,1% | 69,6% | 55,7% | 35,7% | 56,0% | 65,2% | poids |

| Yolor-csp-x * | 640 | 51,5% | 69,9% | 56,1% | 35,8% | 56,8% | 66,1% | poids |

Développement...

| Modèle | Taille de test | Test AP | Test AP 50 | Test AP 75 | Test AP S | Test AP M | Test ap l |

|---|---|---|---|---|---|---|---|

| Yolor-csp | 640 | 51,1% | 69,6% | 55,7% | 31,7% | 55,3% | 64,7% |

| Yolor-csp-x | 640 | 53,0% | 71,4% | 57,9% | 33,7% | 57,1% | 66,8% |

Train à partir de zéro pour 300 époques ...

| Modèle | Informations | Taille de test | AP |

|---|---|---|---|

| Yolor-csp | évolution | 640 | 48,0% |

| Yolor-csp | stratégie | 640 | 50,0% |

| Yolor-csp | Stratégie + Simota | 640 | 51,1% |

| Yolor-csp-x | stratégie | 640 | 51,5% |

| Yolor-csp-x | Stratégie + Simota | 640 | 53,0% |

Environnement Docker (recommandé)

# create the docker container, you can change the share memory size if you have more.

nvidia-docker run --name yolor -it -v your_coco_path/:/coco/ -v your_code_path/:/yolor --shm-size=64g nvcr.io/nvidia/pytorch:20.11-py3

# apt install required packages

apt update

apt install -y zip htop screen libgl1-mesa-glx

# pip install required packages

pip install seaborn thop

# install mish-cuda if you want to use mish activation

# https://github.com/thomasbrandon/mish-cuda

# https://github.com/JunnYu/mish-cuda

cd /

git clone https://github.com/JunnYu/mish-cuda

cd mish-cuda

python setup.py build install

# install pytorch_wavelets if you want to use dwt down-sampling module

# https://github.com/fbcotter/pytorch_wavelets

cd /

git clone https://github.com/fbcotter/pytorch_wavelets

cd pytorch_wavelets

pip install .

# go to code folder

cd /yolor

Environnement Colab

git clone https://github.com/WongKinYiu/yolor

cd yolor

# pip install required packages

pip install -qr requirements.txt

# install mish-cuda if you want to use mish activation

# https://github.com/thomasbrandon/mish-cuda

# https://github.com/JunnYu/mish-cuda

git clone https://github.com/JunnYu/mish-cuda

cd mish-cuda

python setup.py build install

cd ..

# install pytorch_wavelets if you want to use dwt down-sampling module

# https://github.com/fbcotter/pytorch_wavelets

git clone https://github.com/fbcotter/pytorch_wavelets

cd pytorch_wavelets

pip install .

cd ..

Préparer un ensemble de données CoCo

cd /yolor

bash scripts/get_coco.sh

Préparer le poids pré-entraîné

cd /yolor

bash scripts/get_pretrain.sh

yolor_p6.pt

python test.py --data data/coco.yaml --img 1280 --batch 32 --conf 0.001 --iou 0.65 --device 0 --cfg cfg/yolor_p6.cfg --weights yolor_p6.pt --name yolor_p6_val

Vous obtiendrez les résultats:

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.52510

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.70718

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.57520

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.37058

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.56878

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.66102

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.39181

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.65229

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.71441

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.57755

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.75337

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.84013

Formation unique du GPU:

python train.py --batch-size 8 --img 1280 1280 --data coco.yaml --cfg cfg/yolor_p6.cfg --weights '' --device 0 --name yolor_p6 --hyp hyp.scratch.1280.yaml --epochs 300

Formation multiple GPU:

python -m torch.distributed.launch --nproc_per_node 2 --master_port 9527 train.py --batch-size 16 --img 1280 1280 --data coco.yaml --cfg cfg/yolor_p6.cfg --weights '' --device 0,1 --sync-bn --name yolor_p6 --hyp hyp.scratch.1280.yaml --epochs 300

Horaire de formation dans le journal:

python -m torch.distributed.launch --nproc_per_node 8 --master_port 9527 train.py --batch-size 64 --img 1280 1280 --data data/coco.yaml --cfg cfg/yolor_p6.cfg --weights '' --device 0,1,2,3,4,5,6,7 --sync-bn --name yolor_p6 --hyp hyp.scratch.1280.yaml --epochs 300

python -m torch.distributed.launch --nproc_per_node 8 --master_port 9527 tune.py --batch-size 64 --img 1280 1280 --data data/coco.yaml --cfg cfg/yolor_p6.cfg --weights 'runs/train/yolor_p6/weights/last_298.pt' --device 0,1,2,3,4,5,6,7 --sync-bn --name yolor_p6-tune --hyp hyp.finetune.1280.yaml --epochs 450

python -m torch.distributed.launch --nproc_per_node 8 --master_port 9527 train.py --batch-size 64 --img 1280 1280 --data data/coco.yaml --cfg cfg/yolor_p6.cfg --weights 'runs/train/yolor_p6-tune/weights/epoch_424.pt' --device 0,1,2,3,4,5,6,7 --sync-bn --name yolor_p6-fine --hyp hyp.finetune.1280.yaml --epochs 450

yolor_p6.pt

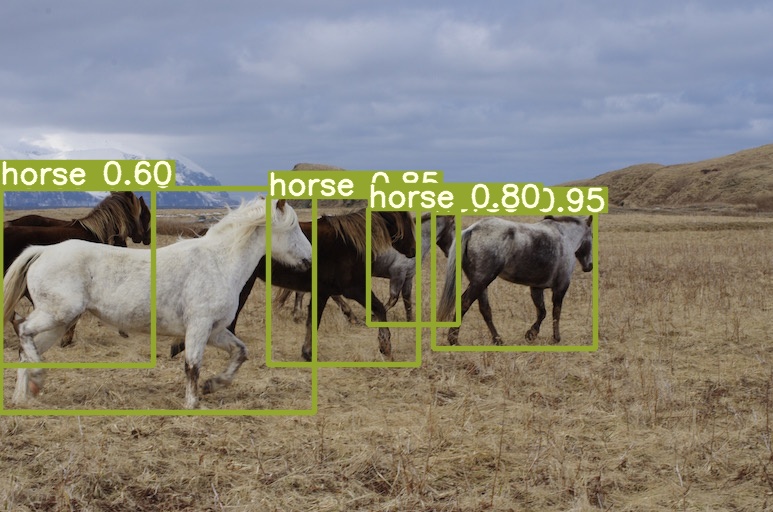

python detect.py --source inference/images/horses.jpg --cfg cfg/yolor_p6.cfg --weights yolor_p6.pt --conf 0.25 --img-size 1280 --device 0

Vous obtiendrez les résultats:

@article{wang2023you,

title={You Only Learn One Representation: Unified Network for Multiple Tasks},

author={Wang, Chien-Yao and Yeh, I-Hau and Liao, Hong-Yuan Mark},

journal={Journal of Information Science and Engineering},

year={2023}

}