TuoTuo A Topic Modelling library written in Python. TuoTuo is also a cute boy, my son, who is now 6 months old.

Use the package manager pip to install TuoTuo. You may find the Pypi distribution here.

pip install TuoTuo --upgradeCurrently, the library only supports Topic modeling via Latent Dirichlet Allocation (LDA). As we know, LDA can be implemented using Gibbs Sampling and Variational Inference, we choose the latter as this is mathematically more sophisticated

import torch as tr

from tuotuo.generator import doc_generator

gen = doc_generator(

M = 100,

# we sample 100 documents

L = 20,

# each document would contain 20 pre-defined words

topic_prior = tr.tensor([1,1,1,1,1], dtype=tr.double)

# we use a exchangable Dirichlet Distribution as our topic prior,

# that is a uniform distribution on 5 topics

)

train_docs = gen.generate_doc()from tuotuo.lda_model import LDASmoothed

import matplotlib.pyplot as plt

lda = LDASmoothed(

num_topics = 5,

)

perplexes = lda.fit(

train_docs,

sampling= False,

verbose=True,

return_perplexities=True,

)

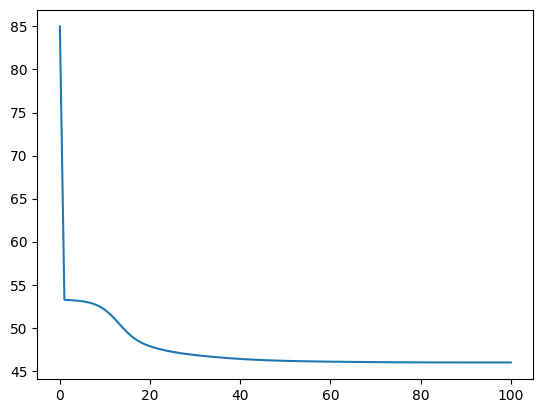

plt.plot(perplexes)

=>=>=>=>=>=>=>=>

Topic Dirichlet Prior, Alpha

1

Exchangeable Word Dirichlet Prior, Eta

1

Var Inf - Word Dirichlet prior, Lambda

(5, 40)

Var Inf - Topic Dirichlet prior, Gamma

(100, 5)

Init perplexity = 84.99592157507153

End perplexity = 45.96696541539976

for topic_index in range(lda._lambda_.shape[0]):

top5 = np.argsort(lda._lambda_[topic_index,:],)[-5:]

print(f"Topic {topic_index}")

for i, idx in enumerate(top5):

print(f"Top {i+1} -> {lda.train_doc.idx_to_vocab[idx]}")

print()

=>=>=>=>=>=>=>=>

Topic 0

Top 1 -> physical

Top 2 -> quantum

Top 3 -> research

Top 4 -> scientst

Top 5 -> astrophysics

Topic 1

Top 1 -> divorce

Top 2 -> attorney

Top 3 -> court

Top 4 -> bankrupt

Top 5 -> contract

Topic 2

Top 1 -> content

Top 2 -> Craftsmanship

Top 3 -> concert

Top 4 -> asymmetrical

Top 5 -> Symmetrical

Topic 3

Top 1 -> recreation

Top 2 -> FIFA

Top 3 -> football

Top 4 -> Olympic

Top 5 -> athletics

Topic 4

Top 1 -> fever

Top 2 -> appetite

Top 3 -> contagious

Top 4 -> decongestant

Top 5 -> injectionAs we can see from the top 5 words, we can easily realize the following mapping:

Topic 0 -> science Topic 1 -> law Topic 2 -> art Topic 3 -> sport Topic 4 -> health

Pull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.

As there is no mature topic modeling library available, we are also looking for collaborators who would like to contribute in the following directions:

Most of the work is completed for this part, we still need to work on:

Extend the library to support neural variational inference Following this ICML paper: Neural Variational Inference for Text Processing

Extend the training to support Reinforcement Learning Following this ACL paper: Neural Topic Model with Reinforcement Learning

MIT