The images are compressed for loading speed.

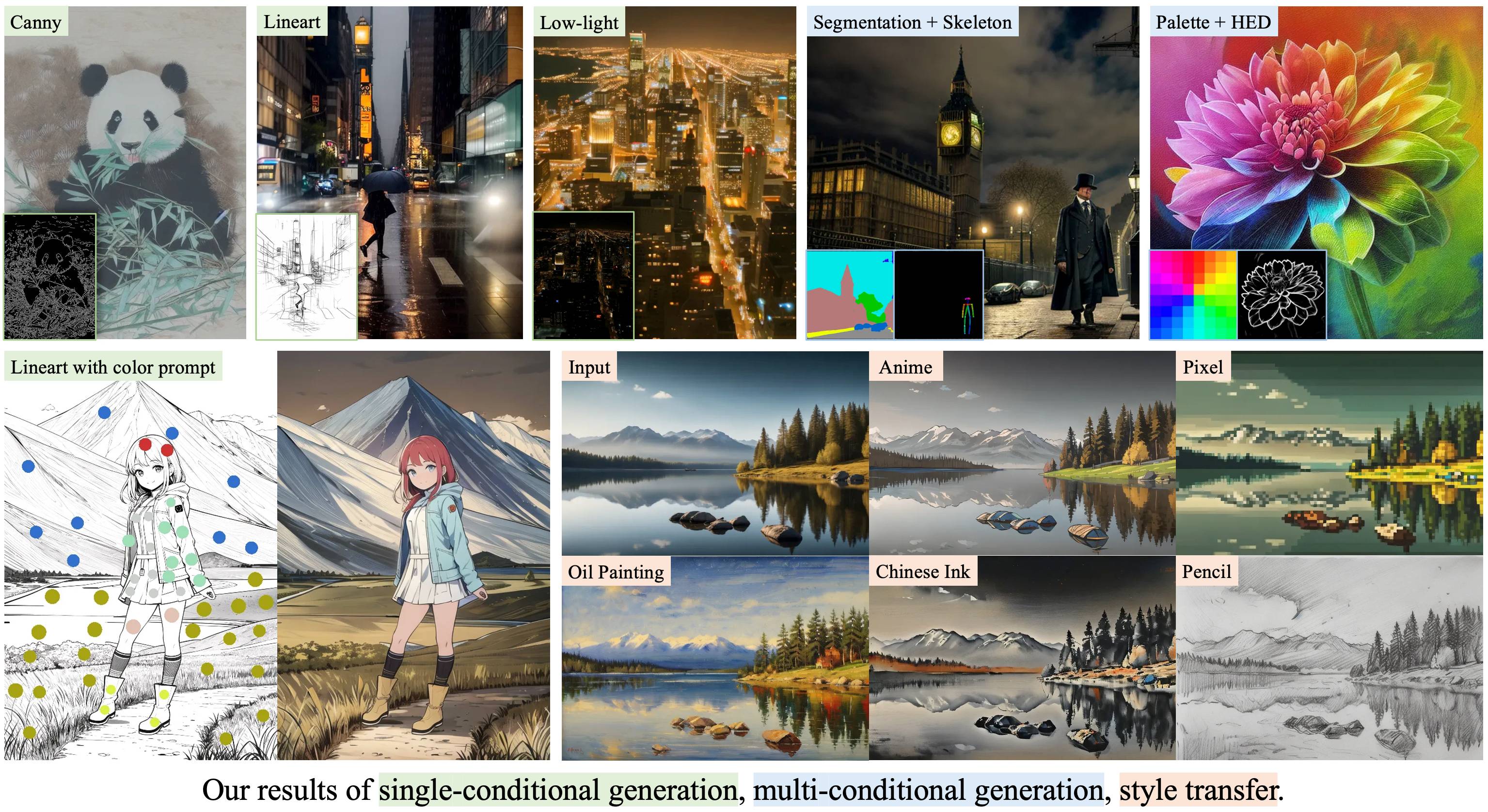

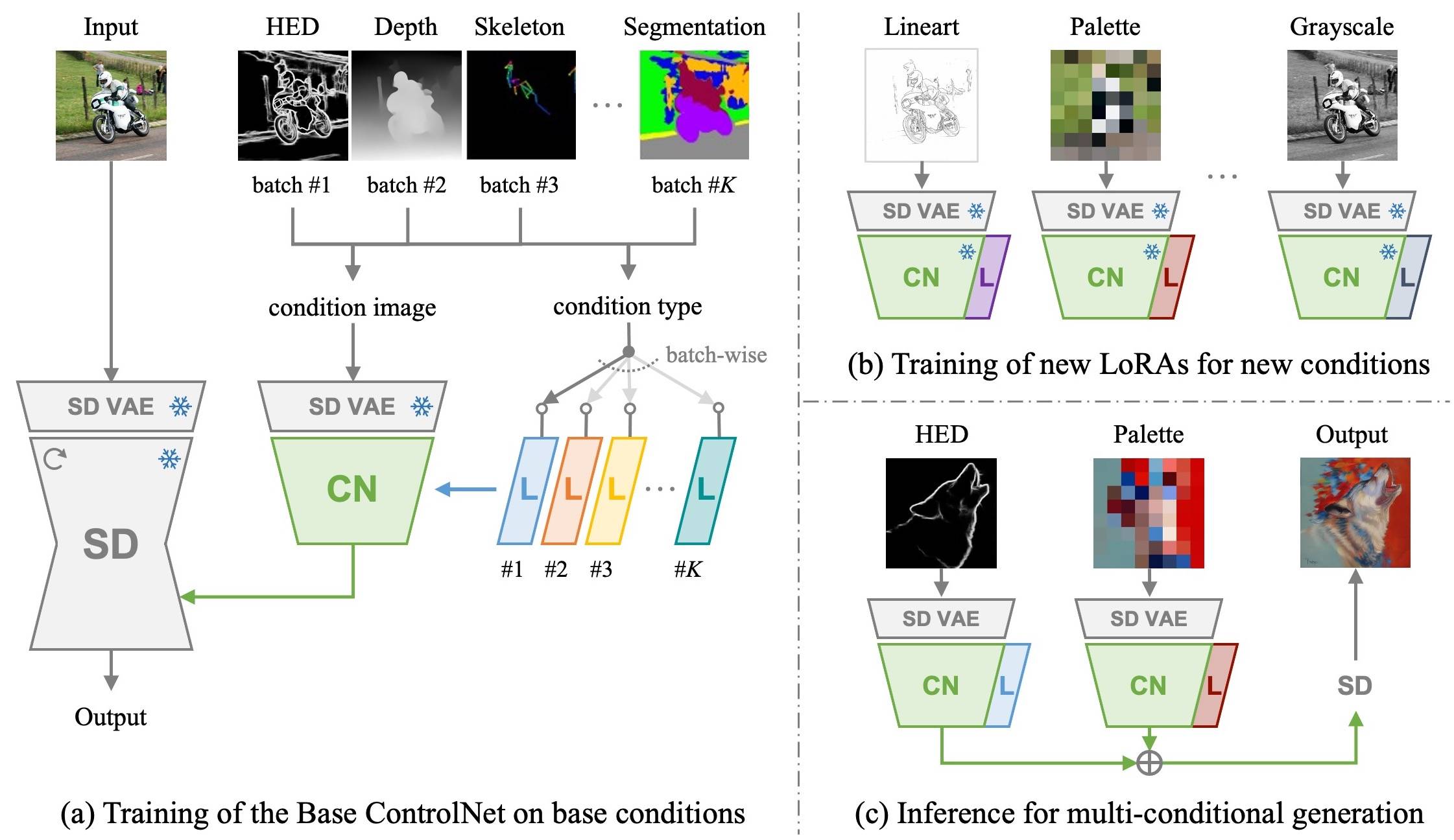

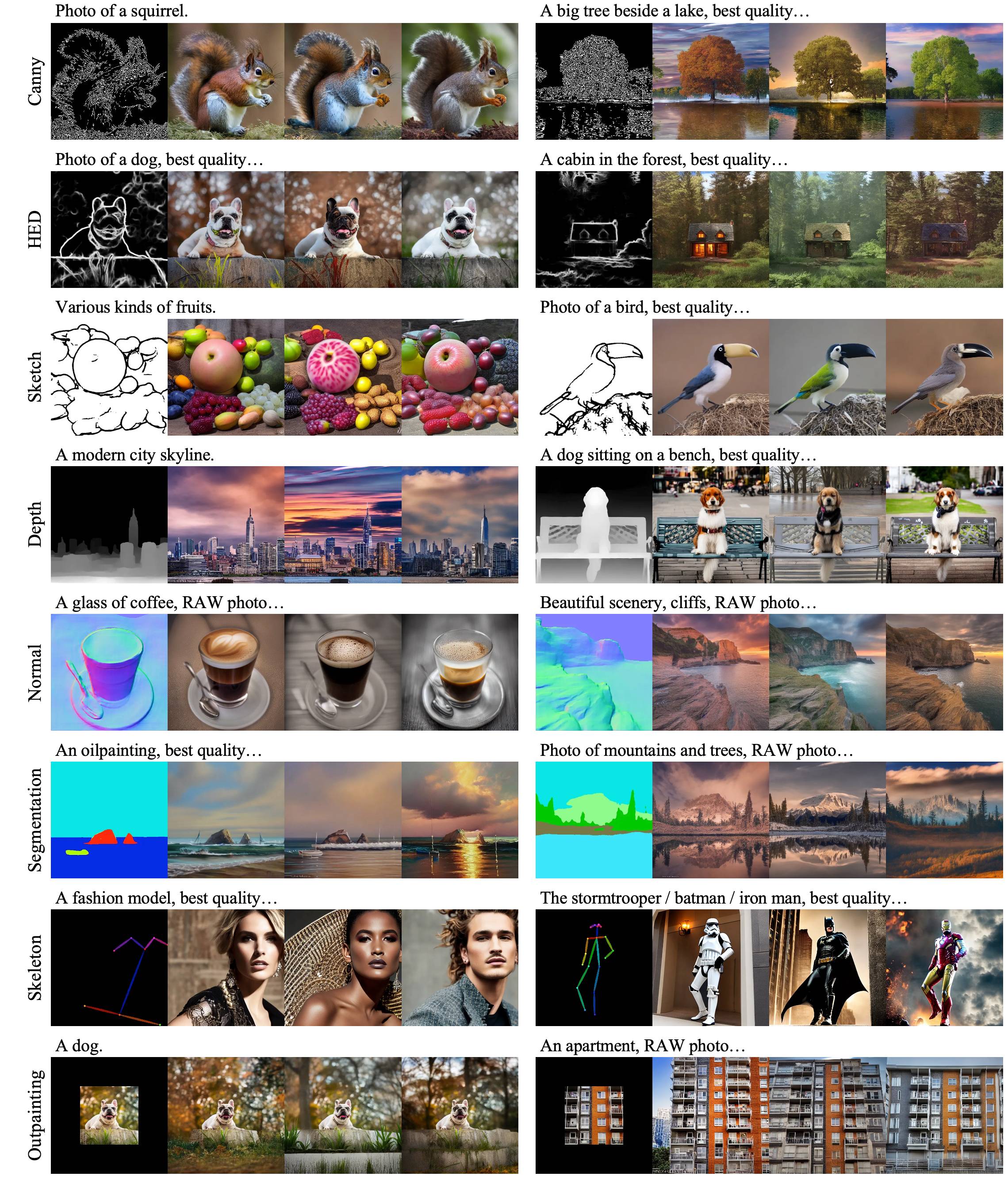

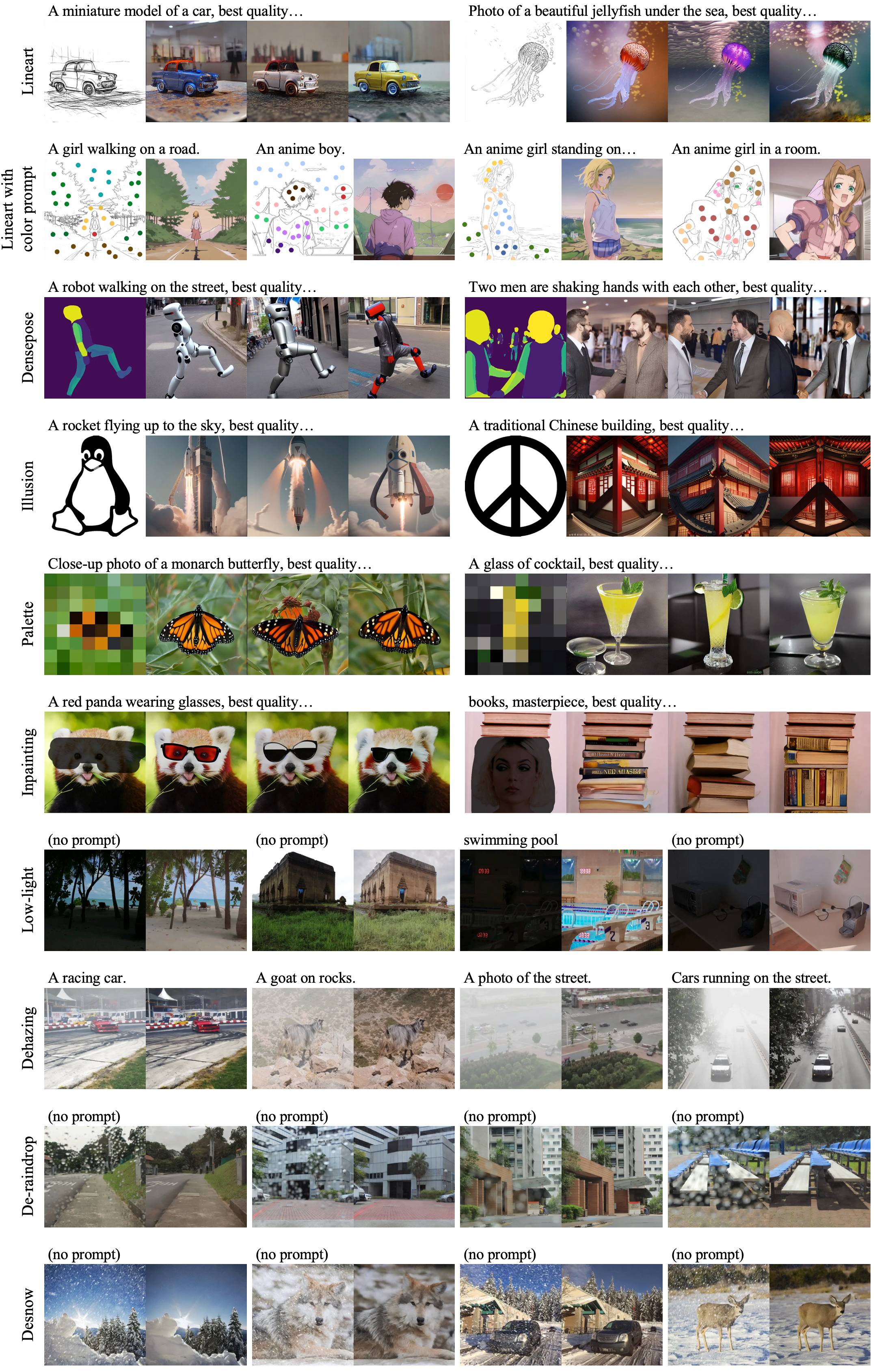

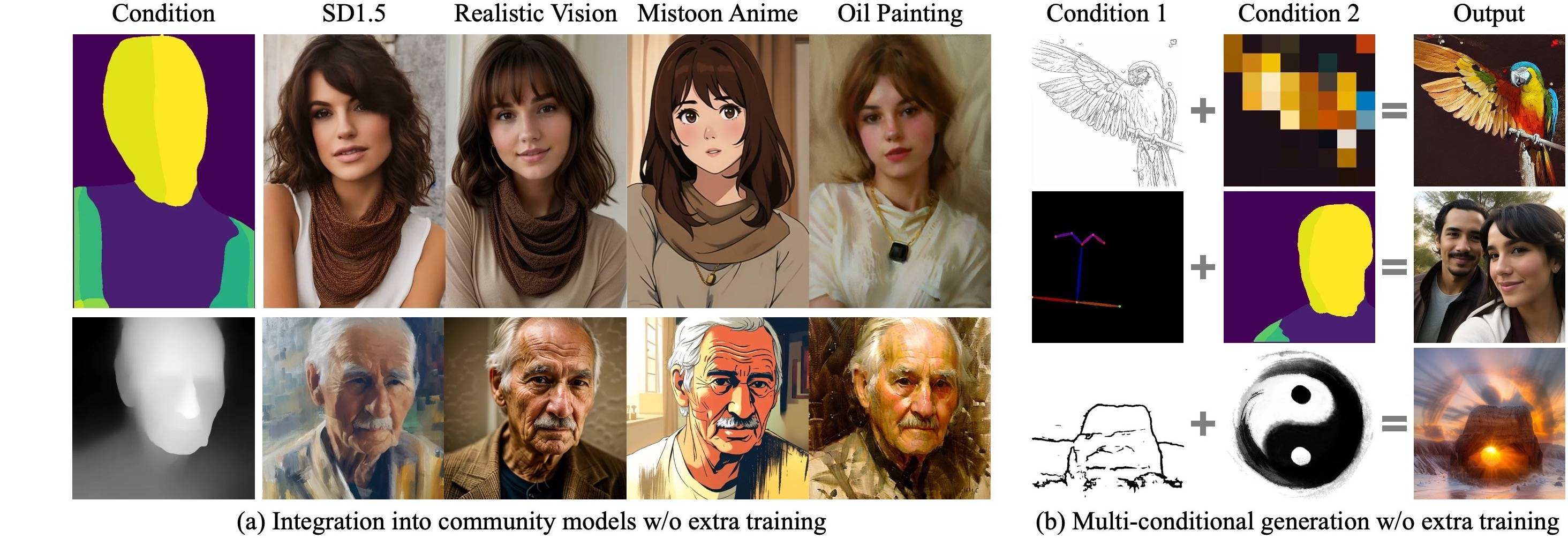

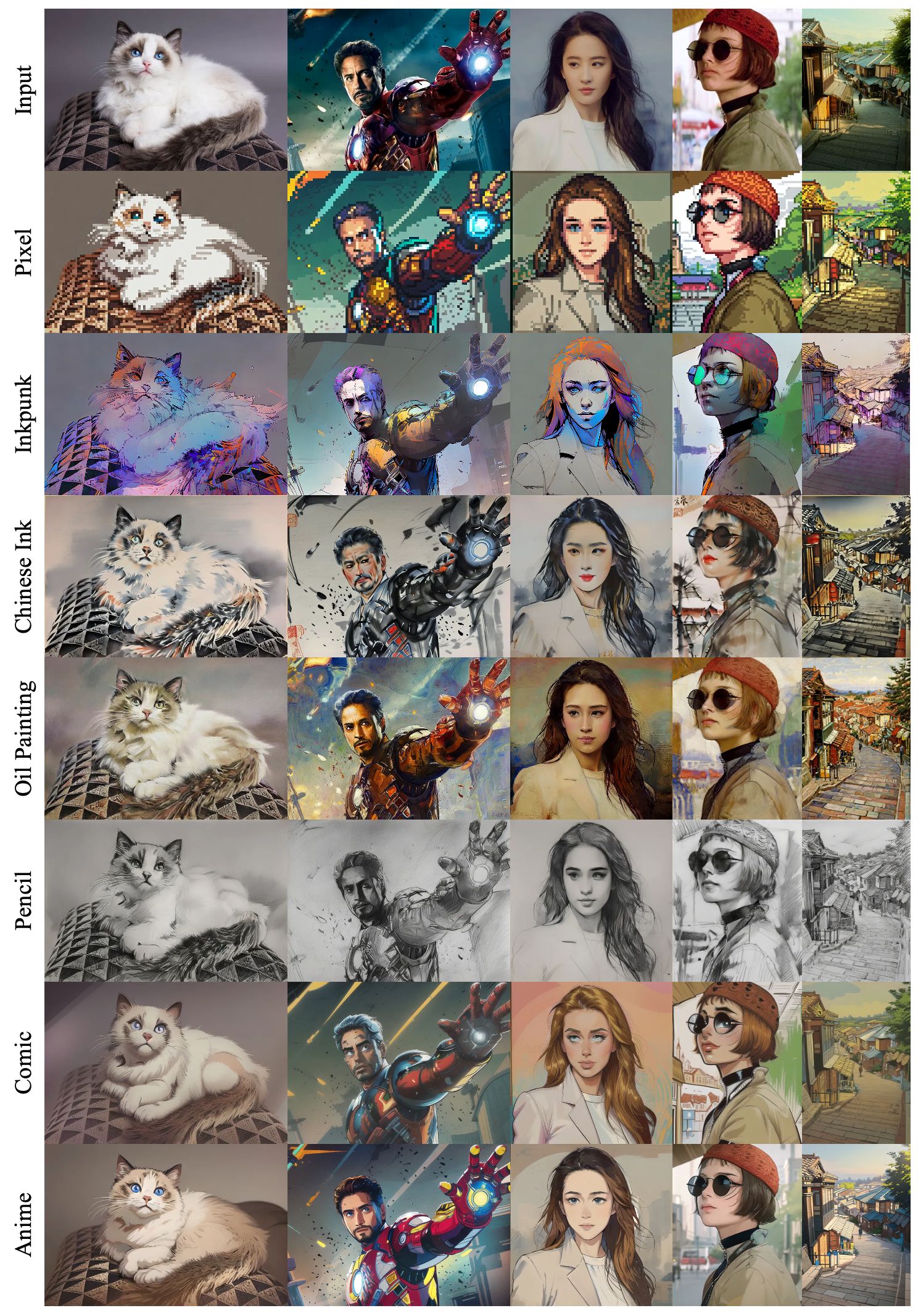

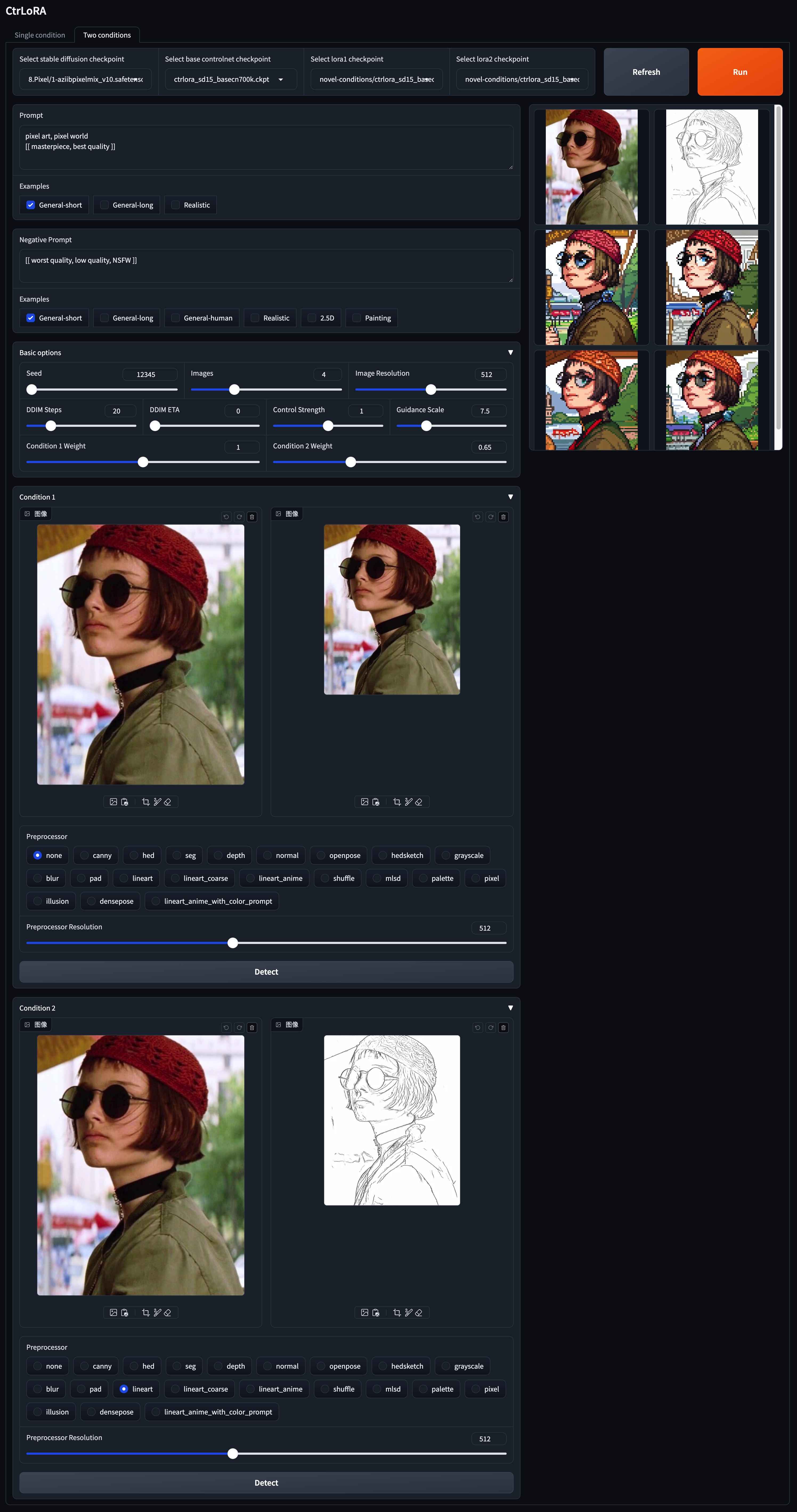

CtrLoRA: An Extensible and Efficient Framework for Controllable Image Generation

Yifeng Xu1,2, Zhenliang He1, Shiguang Shan1,2, Xilin Chen1,2

1Key Lab of AI Safety, Institute of Computing Technology, CAS, China

2University of Chinese Academy of Sciences, China

We first train a Base ControlNet along with condition-specific LoRAs on base conditions with a large-scale dataset. Then, our Base ControlNet can be efficiently adapted to novel conditions by new LoRAs with as few as 1,000 images and less than 1 hour on a single GPU.

|

|---|

|

|---|

|

|---|

|

|---|

Clone this repo:

git clone --depth 1 https://github.com/xyfJASON/ctrlora.git

cd ctrloraCreate and activate a new conda environment:

conda create -n ctrlora python=3.10

conda activate ctrloraInstall pytorch and other dependencies:

pip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu117

pip install -r requirements.txtWe provide our pretrained models here. Please put the Base ControlNet (ctrlora_sd15_basecn700k.ckpt) into ./ckpts/ctrlora-basecn and the LoRAs into ./ckpts/ctrlora-loras.

The naming convention of the LoRAs is ctrlora_sd15_<basecn>_<condition>.ckpt for base conditions and ctrlora_sd15_<basecn>_<condition>_<images>_<steps>.ckpt for novel conditions.

You also need to download the SD1.5-based Models and put them into ./ckpts/sd15. Models used in our work:

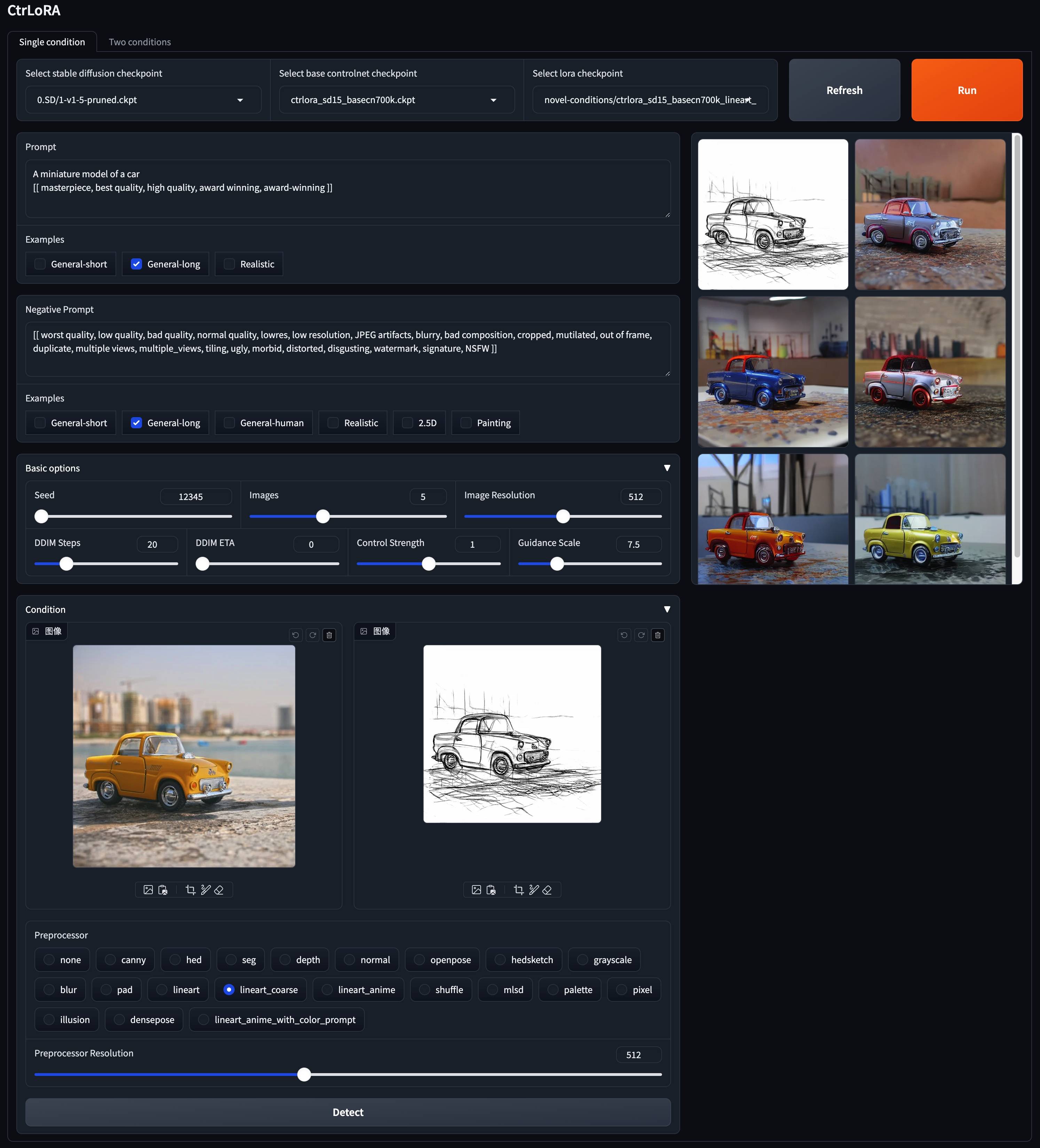

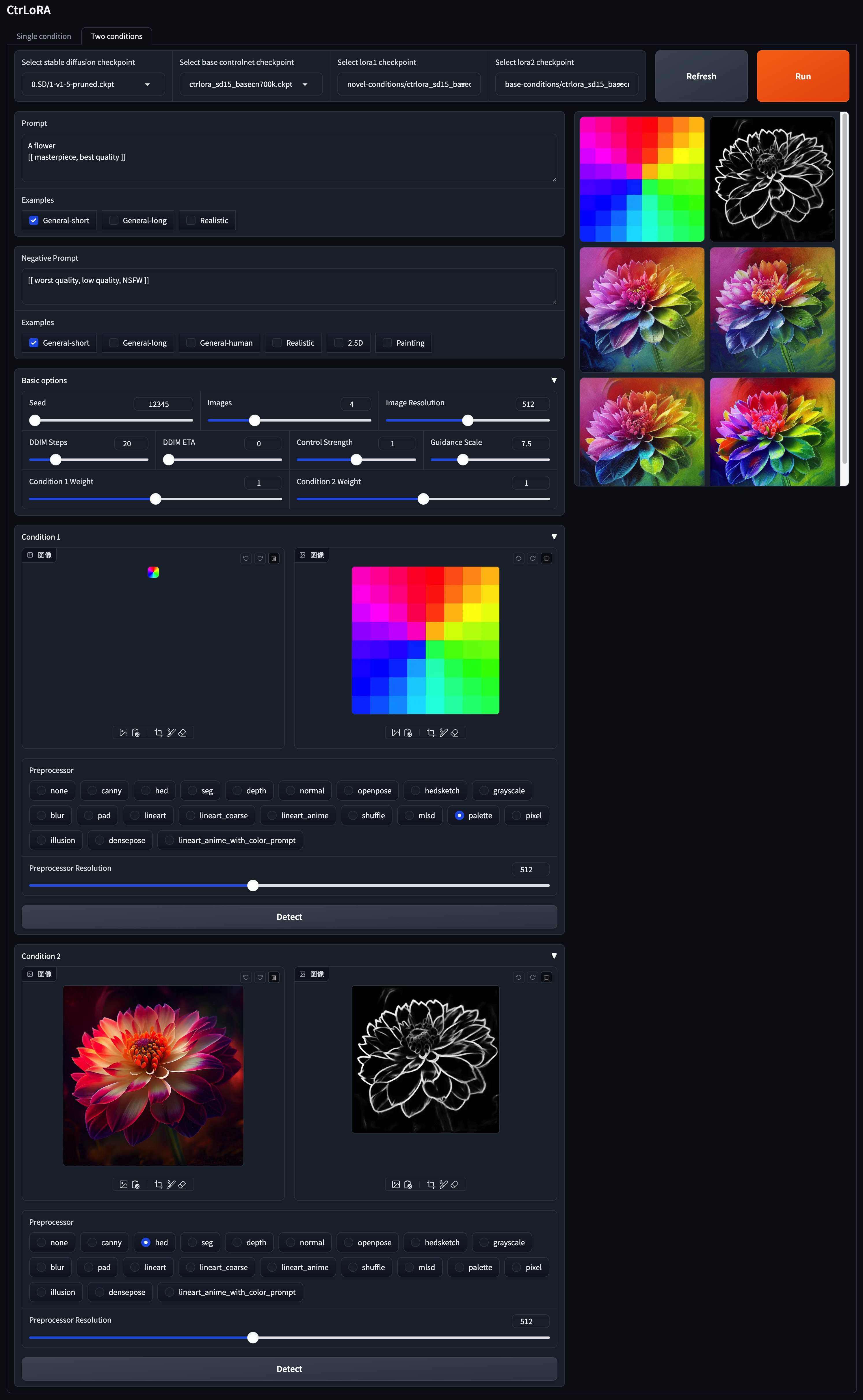

v1-5-pruned.ckpt): official / mirrorpython app/gradio_ctrlora.pyRequires at least 9GB/21GB GPU RAM to generate a batch of one/four 512x512 images.

Based on our Base ControlNet, you can train a LoRA for your custom condition with as few as 1,000 images and less than 1 hour on a single GPU (20GB).

First, download the Stable Diffusion v1.5 (v1-5-pruned.ckpt) into ./ckpts/sd15 and the Base ControlNet (ctrlora_sd15_basecn700k.ckpt) into ./ckpts/ctrlora-basecn as described above.

Second, put your custom data into ./data/<custom_data_name> with the following structure:

data

└── custom_data_name

├── prompt.json

├── source

│ ├── 0000.jpg

│ ├── 0001.jpg

│ └── ...

└── target

├── 0000.jpg

├── 0001.jpg

└── ...

source contains condition images, such as canny edges, segmentation maps, depth images, etc.target contains ground-truth images corresponding to the condition images.prompt.json should follow the format like {"source": "source/0000.jpg", "target": "target/0000.jpg", "prompt": "The quick brown fox jumps over the lazy dog."}.Third, run the following command to train the LoRA for your custom condition:

python scripts/train_ctrlora_finetune.py

--dataroot ./data/<custom_data_name>

--config ./configs/ctrlora_finetune_sd15_rank128.yaml

--sd_ckpt ./ckpts/sd15/v1-5-pruned.ckpt

--cn_ckpt ./ckpts/ctrlora-basecn/ctrlora_sd15_basecn700k.ckpt

[--name NAME]

[--max_steps MAX_STEPS]--dataroot: path to the custom data.--name: name of the experiment. The logging directory will be ./runs/name. Default: current time.--max_steps: maximum number of training steps. Default: 100000.After training, extract the LoRA weights with the following command:

python scripts/tool_extract_weights.py -t lora --ckpt CHECKPOINT --save_path SAVE_PATH--ckpt: path to the checkpoint produced by the above training.--save_path: path to save the extracted LoRA weights.Finally, put the extracted LoRA into ./ckpts/ctrlora-loras and use it in the Gradio demo.

Please refer to the instructions here for more details of training, fine-tuning, and evaluation.

This project is built upon Stable Diffusion, ControlNet, and UniControl. Thanks for their great work!

If you find this project helpful, please consider citing:

@article{xu2024ctrlora,

title={CtrLoRA: An Extensible and Efficient Framework for Controllable Image Generation},

author={Xu, Yifeng and He, Zhenliang and Shan, Shiguang and Chen, Xilin},

journal={arXiv preprint arXiv:2410.09400},

year={2024}

}