Simplified Chinese | English

This project is a speech synthesis project based on Pytorch, using VITS. VITS (Variational Inference with adversarial learning for end-to-end Text-to-Speech) is a speech synthesis method. This end-to-end model is very simple to use and does not require too complex processes such as text alignment. It is directly trained and generated with one click, which greatly reduces the learning threshold.

Everyone is welcome to scan the code to enter the Knowledge Planet or QQ group to discuss. Knowledge Planet provides project model files and bloggers' other related projects model files, as well as other resources.

| Dataset | Language (Dialect) | Number of speakers | Speaker name | Download address |

|---|---|---|---|---|

| BZNSYP | mandarin | 1 | Standard female voice | Click to download |

| Cantonese dataset | Cantonese | 10 | Male Voice 1 Girls 1 ··· | Click to download |

conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.6 -c pytorch -c nvidiaUse pip to install, the command is as follows:

python -m pip install mvits -U -i https://pypi.tuna.tsinghua.edu.cn/simpleIt is recommended to install the source code , which can ensure the use of the latest code.

git clone https://github.com/yeyupiaoling/VITS-Pytorch.git

cd VITS-Pytorch/

pip install . The project supports the direct generation of BZNSYP and AiShell3 data lists. Taking BZNSYP as an example, download BZNSYP to the dataset directory and decompress. Then execute the create_list.py program and generate a data table in the following format, the format is <音频路径>|<说话人名称>|<标注数据> . Note that the labeling data requires labeling language. For example, in Mandarin, you must wrap the text in [ZH] . Other languages support Japanese: [JA] , English:[EN], 한국어:[KO]. Custom data sets can be generated in this format.

The project provides two text processing methods, different text processing methods, and supports different languages, namely cjke_cleaners2 and chinese_dialect_cleaners . This configuration is modified on dataset_conf.text_cleaner . cjke_cleaners2 supports languages {"普通话": "[ZH]", "日本語": "[JA]", "English": "[EN]", "한국어": "[KO]"} , chinese_dialect_cleaners supports languages {"普通话": "[ZH]", "日本語": "[JA]", "English": "[EN]", "粤语": "[GD]", "上海话": "[SH]", "苏州话": "[SZ]", "无锡话": "[WX]", "常州话": "[CZ]", "杭州话": "[HZ]", ·····} , For more languages, you can view the source code LANGUAGE_MARKS.

dataset/BZNSYP/Wave/000001.wav|标准女声|[ZH]卡尔普陪外孙玩滑梯。[ZH]

dataset/BZNSYP/Wave/000002.wav|标准女声|[ZH]假语村言别再拥抱我。[ZH]

dataset/BZNSYP/Wave/000003.wav|标准女声|[ZH]宝马配挂跛骡鞍,貂蝉怨枕董翁榻。[ZH]

After having the data list, you need to generate a phoneme data list. Just execute preprocess_data.py --train_data_list=dataset/bznsyp.txt to generate a phoneme data list. At this point, the data is all ready.

dataset/BZNSYP/Wave/000001.wav|0|kʰa↓↑əɹ`↓↑pʰu↓↑ pʰeɪ↑ waɪ↓swən→ wan↑ xwa↑tʰi→.

dataset/BZNSYP/Wave/000002.wav|0|tʃ⁼ja↓↑ɥ↓↑ tsʰwən→jɛn↑p⁼iɛ↑ ts⁼aɪ↓ jʊŋ→p⁼ɑʊ↓ wo↓↑.

dataset/BZNSYP/Wave/000003.wav|0|p⁼ɑʊ↓↑ma↓↑ pʰeɪ↓k⁼wa↓ p⁼wo↓↑ lwo↑an→, t⁼iɑʊ→ts`ʰan↑ ɥæn↓ ts`⁼ən↓↑ t⁼ʊŋ↓↑ʊŋ→ tʰa↓.

Now you can start training the model. The parameters in the configuration file generally do not need to be modified. The number of speakers and the name of the speaker will be modified by preprocess_data.py . The only thing that may need to be modified is train.batch_size . If the video memory is insufficient, this parameter can be reduced.

# 单卡训练

CUDA_VISIBLE_DEVICES=0 python train.py

# 多卡训练

CUDA_VISIBLE_DEVICES=0,1 torchrun --standalone --nnodes=1 --nproc_per_node=2 train.pyTraining output log:

[2023-08-28 21:04:42.274452 INFO ] utils:print_arguments:123 - ----------- 额外配置参数 -----------

[2023-08-28 21:04:42.274540 INFO ] utils:print_arguments:125 - config: configs/config.yml

[2023-08-28 21:04:42.274580 INFO ] utils:print_arguments:125 - epochs: 10000

[2023-08-28 21:04:42.274658 INFO ] utils:print_arguments:125 - model_dir: models

[2023-08-28 21:04:42.274702 INFO ] utils:print_arguments:125 - pretrained_model: None

[2023-08-28 21:04:42.274746 INFO ] utils:print_arguments:125 - resume_model: None

[2023-08-28 21:04:42.274788 INFO ] utils:print_arguments:126 - ------------------------------------------------

[2023-08-28 21:04:42.727728 INFO ] utils:print_arguments:128 - ----------- 配置文件参数 -----------

[2023-08-28 21:04:42.727836 INFO ] utils:print_arguments:131 - dataset_conf:

[2023-08-28 21:04:42.727909 INFO ] utils:print_arguments:138 - add_blank: True

[2023-08-28 21:04:42.727975 INFO ] utils:print_arguments:138 - batch_size: 16

[2023-08-28 21:04:42.728037 INFO ] utils:print_arguments:138 - cleaned_text: True

[2023-08-28 21:04:42.728097 INFO ] utils:print_arguments:138 - eval_sum: 2

[2023-08-28 21:04:42.728157 INFO ] utils:print_arguments:138 - filter_length: 1024

[2023-08-28 21:04:42.728204 INFO ] utils:print_arguments:138 - hop_length: 256

[2023-08-28 21:04:42.728235 INFO ] utils:print_arguments:138 - max_wav_value: 32768.0

[2023-08-28 21:04:42.728266 INFO ] utils:print_arguments:138 - mel_fmax: None

[2023-08-28 21:04:42.728298 INFO ] utils:print_arguments:138 - mel_fmin: 0.0

[2023-08-28 21:04:42.728328 INFO ] utils:print_arguments:138 - n_mel_channels: 80

[2023-08-28 21:04:42.728359 INFO ] utils:print_arguments:138 - num_workers: 4

[2023-08-28 21:04:42.728388 INFO ] utils:print_arguments:138 - sampling_rate: 22050

[2023-08-28 21:04:42.728418 INFO ] utils:print_arguments:138 - speakers_file: dataset/speakers.json

[2023-08-28 21:04:42.728448 INFO ] utils:print_arguments:138 - text_cleaner: cjke_cleaners2

[2023-08-28 21:04:42.728483 INFO ] utils:print_arguments:138 - training_file: dataset/train.txt

[2023-08-28 21:04:42.728539 INFO ] utils:print_arguments:138 - validation_file: dataset/val.txt

[2023-08-28 21:04:42.728585 INFO ] utils:print_arguments:138 - win_length: 1024

[2023-08-28 21:04:42.728615 INFO ] utils:print_arguments:131 - model:

[2023-08-28 21:04:42.728648 INFO ] utils:print_arguments:138 - filter_channels: 768

[2023-08-28 21:04:42.728685 INFO ] utils:print_arguments:138 - gin_channels: 256

[2023-08-28 21:04:42.728717 INFO ] utils:print_arguments:138 - hidden_channels: 192

[2023-08-28 21:04:42.728747 INFO ] utils:print_arguments:138 - inter_channels: 192

[2023-08-28 21:04:42.728777 INFO ] utils:print_arguments:138 - kernel_size: 3

[2023-08-28 21:04:42.728808 INFO ] utils:print_arguments:138 - n_heads: 2

[2023-08-28 21:04:42.728839 INFO ] utils:print_arguments:138 - n_layers: 6

[2023-08-28 21:04:42.728870 INFO ] utils:print_arguments:138 - n_layers_q: 3

[2023-08-28 21:04:42.728902 INFO ] utils:print_arguments:138 - p_dropout: 0.1

[2023-08-28 21:04:42.728933 INFO ] utils:print_arguments:138 - resblock: 1

[2023-08-28 21:04:42.728965 INFO ] utils:print_arguments:138 - resblock_dilation_sizes: [[1, 3, 5], [1, 3, 5], [1, 3, 5]]

[2023-08-28 21:04:42.728997 INFO ] utils:print_arguments:138 - resblock_kernel_sizes: [3, 7, 11]

[2023-08-28 21:04:42.729027 INFO ] utils:print_arguments:138 - upsample_initial_channel: 512

[2023-08-28 21:04:42.729058 INFO ] utils:print_arguments:138 - upsample_kernel_sizes: [16, 16, 4, 4]

[2023-08-28 21:04:42.729089 INFO ] utils:print_arguments:138 - upsample_rates: [8, 8, 2, 2]

[2023-08-28 21:04:42.729119 INFO ] utils:print_arguments:138 - use_spectral_norm: False

[2023-08-28 21:04:42.729150 INFO ] utils:print_arguments:131 - optimizer_conf:

[2023-08-28 21:04:42.729184 INFO ] utils:print_arguments:138 - betas: [0.8, 0.99]

[2023-08-28 21:04:42.729217 INFO ] utils:print_arguments:138 - eps: 1e-09

[2023-08-28 21:04:42.729249 INFO ] utils:print_arguments:138 - learning_rate: 0.0002

[2023-08-28 21:04:42.729280 INFO ] utils:print_arguments:138 - optimizer: AdamW

[2023-08-28 21:04:42.729311 INFO ] utils:print_arguments:138 - scheduler: ExponentialLR

[2023-08-28 21:04:42.729341 INFO ] utils:print_arguments:134 - scheduler_args:

[2023-08-28 21:04:42.729373 INFO ] utils:print_arguments:136 - gamma: 0.999875

[2023-08-28 21:04:42.729404 INFO ] utils:print_arguments:131 - train_conf:

[2023-08-28 21:04:42.729437 INFO ] utils:print_arguments:138 - c_kl: 1.0

[2023-08-28 21:04:42.729467 INFO ] utils:print_arguments:138 - c_mel: 45

[2023-08-28 21:04:42.729498 INFO ] utils:print_arguments:138 - enable_amp: True

[2023-08-28 21:04:42.729530 INFO ] utils:print_arguments:138 - log_interval: 200

[2023-08-28 21:04:42.729561 INFO ] utils:print_arguments:138 - seed: 1234

[2023-08-28 21:04:42.729592 INFO ] utils:print_arguments:138 - segment_size: 8192

[2023-08-28 21:04:42.729622 INFO ] utils:print_arguments:141 - ------------------------------------------------

[2023-08-28 21:04:42.729971 INFO ] trainer:__init__:53 - [cjke_cleaners2]支持语言:['日本語', '普通话', 'English', '한국어', "Mix": ""]

[2023-08-28 21:04:42.795955 INFO ] trainer:__setup_dataloader:119 - 训练数据:9984

epoch [1/10000]: 100%|██████████| 619/619 [05:30<00:00, 1.88it/s]]

[2023-08-25 16:44:25.205557 INFO ] trainer:train:168 - ======================================================================

epoch [2/10000]: 100%|██████████| 619/619 [05:20<00:00, 1.93it/s]s]

[2023-08-25 16:49:54.372718 INFO ] trainer:train:168 - ======================================================================

epoch [3/10000]: 100%|██████████| 619/619 [05:19<00:00, 1.94it/s]

[2023-08-25 16:55:21.277194 INFO ] trainer:train:168 - ======================================================================

epoch [4/10000]: 100%|██████████| 619/619 [05:18<00:00, 1.94it/s]

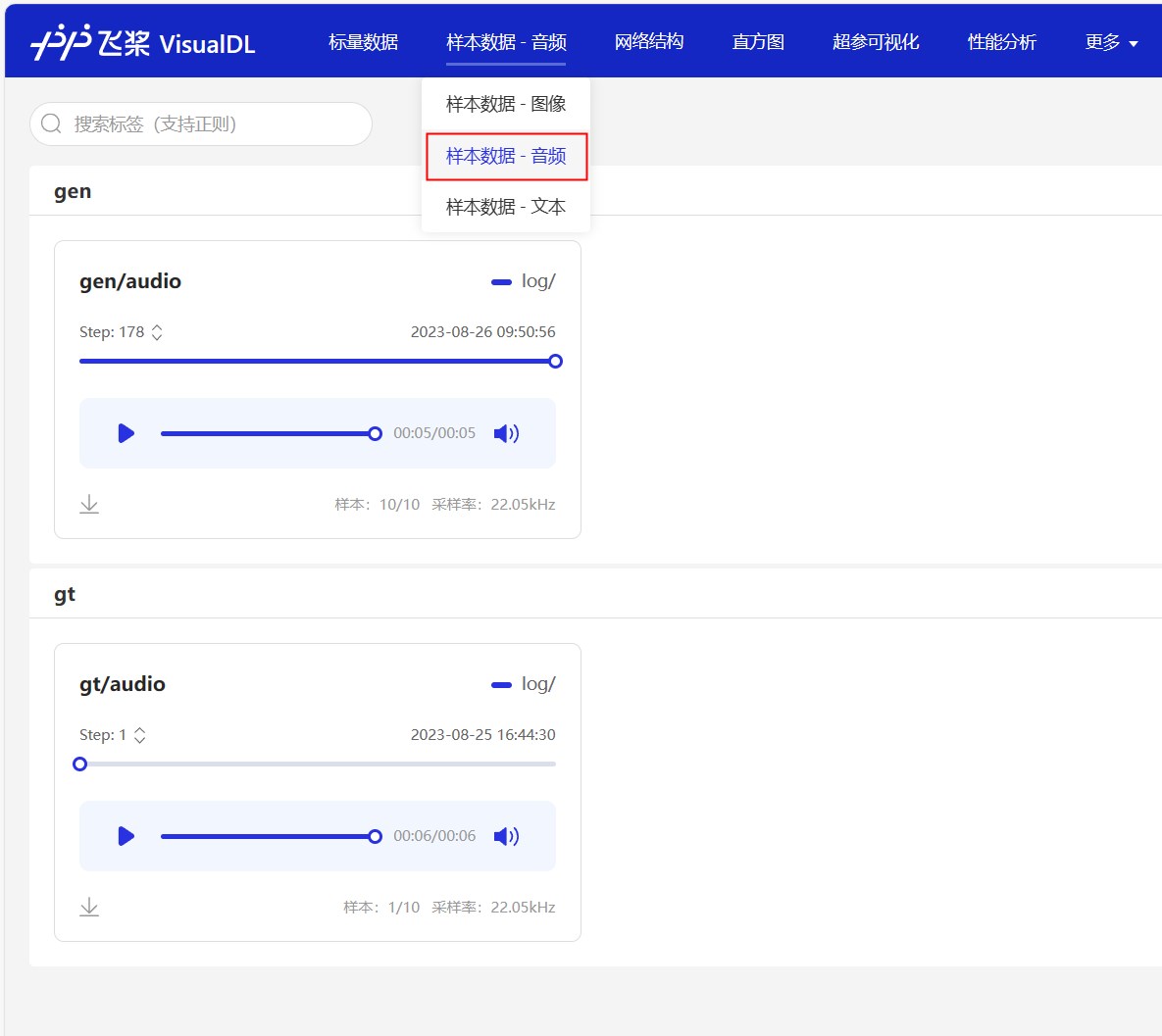

The training logs will also be saved using VisualDL. You can use this tool to view the loss changes and synthesis effects in real time. Just execute visualdl --logdir=log/ --host=0.0.0.0 in the root directory of the project, and visit http://<IP地址>:8040 to open the page. The effect is as follows.

After training to a certain level, you can start using the model for pronunciation. The command is as follows. There are three main parameters, namely --text specifies the text that needs to be synthesized. --language specifies the language of the composite text. If the language is specified as Mix , it is in a mixed mode. The user needs to manually wrap the income text with a language tag. Finally, specify the parameter of the speaker --spk . Go and try it quickly.

python infer.py --text= "你好,我是智能语音助手。 " --language=普通话 --spk=标准女声Reward one dollar to support the author