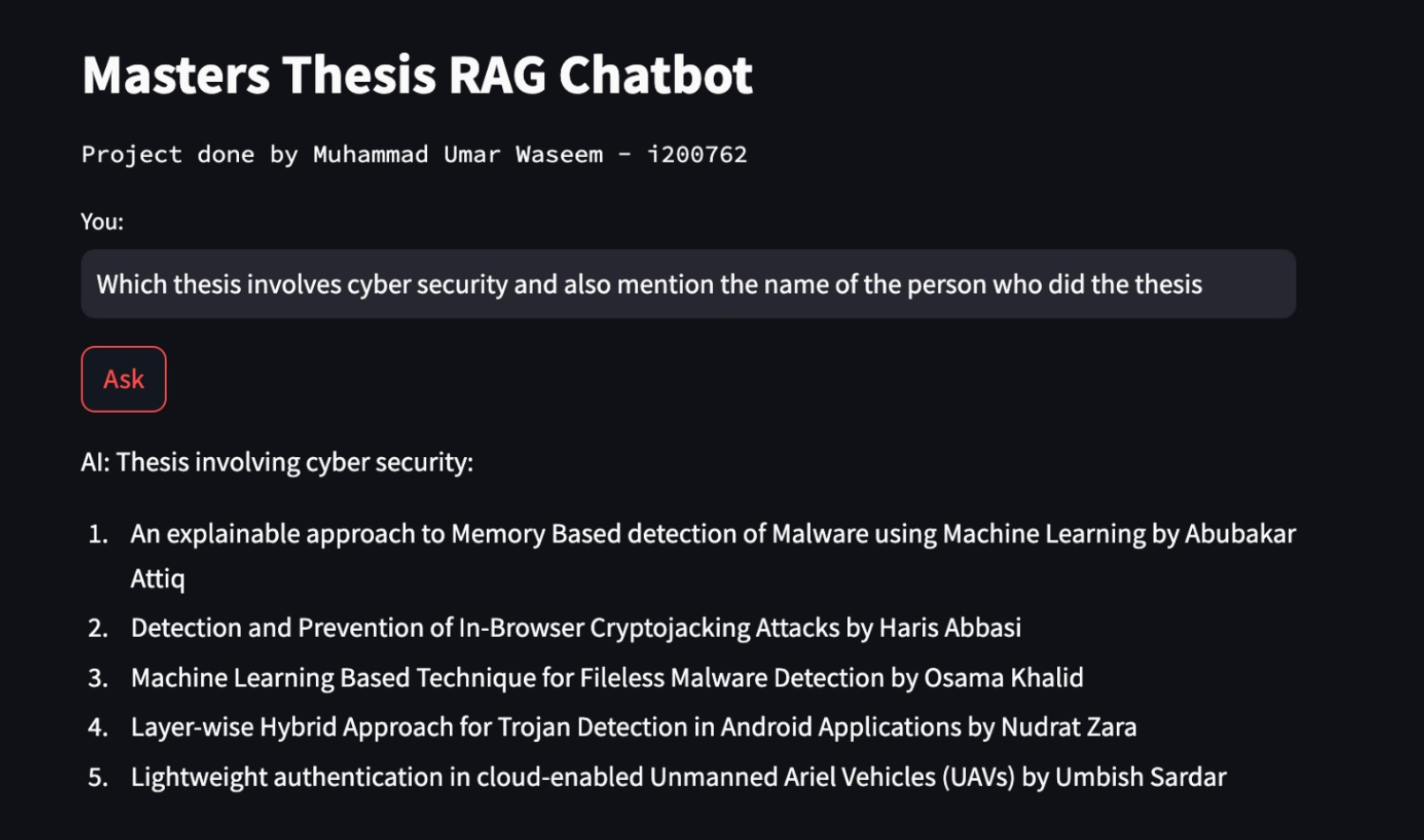

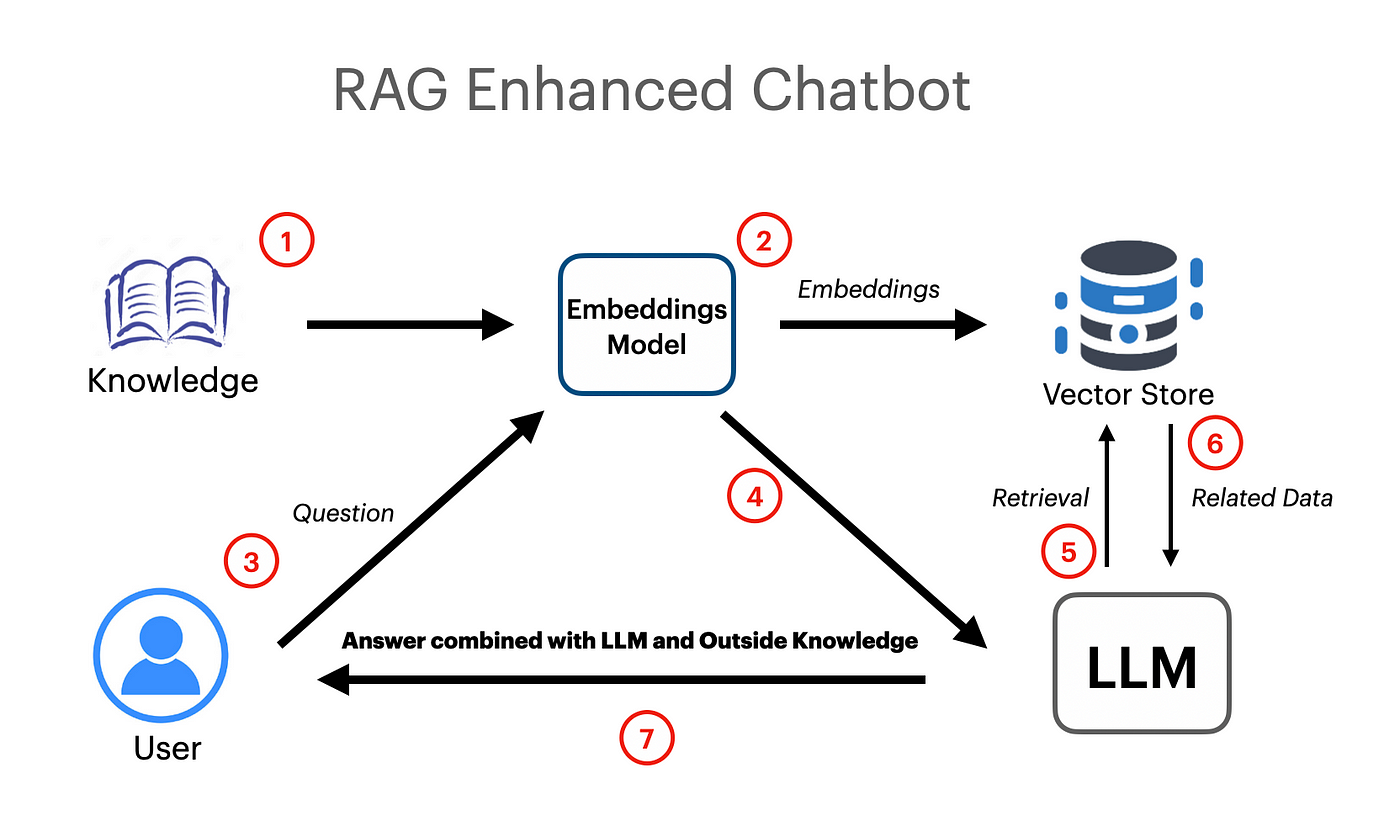

A langchain based RAG app has been made which works using vector embeddings and google Gemini Pro LLM model.

export GOOGLE_API_KEY="your api key"UnstructuredExcelLoader has been used from langchain.document-loaders which is used to load excel spreadsheet data into documents.

Google Gemini Pro model has been used for getting contextual chat completion.

llm = ChatGoogleGenerativeAI(model="gemini-pro", google_api_key=os.environ["GOOGLE_API_KEY"])

embeddings = GoogleGenerativeAIEmbeddings(model="models/embedding-001")FAISS (Facebook AI Similarity Search) Vector Store has been used to create and store semantic embeddings for the loaded documents. The vector store can then later be queried with a similarity search to get most relevant information.

vectordb = FAISS.from_documents(documents=docs ,embedding=embeddings)

# save db as pickle file

with open("vectorstore.pkl", "wb") as f:

pickle.dump(vectordb, f)

#load db from pickle file

with open("vectorstore.pkl", "rb") as f:

my_vector_database = pickle.load(f)

# get 5 most relevant similar results

retriever = my_vector_database.as_retriever(search_kwargs={"k": 5})PromptTemplate has been used from langchain to craft efficient prompts which would later be passed on to the model. The prompt also contains input variables which indicate to the model that some information will be passed in by the user.

template = """

You are a very helpful AI assistant.

You answer every question and apologize polietly if you dont know the answer.

The context contains information about a person,

title of their thesis,

the abstract of their thesis

and a link to their thesis.

Your task is to answer based on that information.

context: {context}

input: {input}

answer:

"""

prompt = PromptTemplate.from_template(template)Retreival Chain has been used to pass documents/embeddings to the model as context for Retreival Augmented Generation.

combine_docs_chain = create_stuff_documents_chain(llm, prompt)

retrieval_chain = create_retrieval_chain(retriever, combine_docs_chain)