GPT2-Chinese

Description

- Chinese version of GPT2 training code, using BERT tokenizer or BPE tokenizer. It is based on the extremely awesome repository from HuggingFace team Transformers. Can write poems, news, novels, or train general language models. Support char level, word level and BPE level. Support large training corpus.

- The Chinese GPT2 training code uses BERT's Tokenizer or Sentencepiece's BPE model (thanks to kangzhonghua's contribution, implementing the BPE model requires slightly modifying the code of train.py). You can write poetry, news, novels, or train a common language model. Supports word unit, word participle mode or BPE mode (requires slightly modified train.py code). Supports large corpus training.

UPDATE 04.11.2024

- Thank you very much for your attention to this project. Since the release of ChatGPT, this project has also attracted some attention again. The project itself is a training program for me to learn Pytorch by myself, and I have no intention of doing long-term maintenance and updates. If you are interested in big model LLM, you can email me ([email protected]) to join the group to communicate, or discuss it in Issue.

UPDATE 02.06.2021

This project has added a general Chinese GPT-2 pre-trained model, a general Chinese GPT-2 pre-trained small model, a Chinese lyrics GPT-2 pre-trained model and a classical Chinese GPT-2 pre-trained model. The model is trained by the UER-py project and is welcome to use it. In addition, the model is uploaded to the Huggingface Model Hub. For more details of the model, please refer to gpt2-chinese-cluecorpussmall, gpt2-distil-chinese-cluecorpussmall, gpt2-chinese-lyric and gpt2-chinese-ancient.

When generating using all models, you need to add a starting symbol before the entered text, such as: If you want to enter "The most beautiful thing is not the rainy day, it is the eaves that have escaped from you", the correct format is "[CLS] The most beautiful thing is not the rainy day, it is the eaves that have escaped from you".

UPDATE 11.03.2020

This project has added the ancient poem GPT-2 pre-trained model and the couplet GPT-2 pre-trained model. The model is trained by the UER-py project and is welcome to use it. In addition, the model is uploaded to the Huggingface Model Hub. For more details of the model, please refer to gpt2-chinese-poem and gpt2-chinese-couplet.

When generating using the ancient poetry model, you need to add a starting symbol before the entered text, such as: If you want to enter "Meishan is like Jiqing," the correct format is "[CLS]Meishan is like Jiqing,".

The corpus format used in the couplet model training is "upper couplet-lower couplet". When using the couplet model to generate, a starting character needs to be added before the input text, such as: If you want to enter "Danfeng River Lengren Chu Go-", the correct format is "[CLS]Danfeng River Lengren Chu Go-".

NEWS 08.11.2020

- CDial-GPT (can be loaded with this code) has been published. This project contains a strictly cleaned large-scale open-domain Chinese dialogue dataset. This project also contains the GPT dialogue pre-trained model trained on this dataset and the generated sample. Everyone is welcome to visit.

NEWS 12.9.2019

- The new project GPT2-chitchat has been released, partly based on the code of this project. It contains the code and training model for training GPT2 dialogue model, as well as generate samples. Everyone is welcome to visit.

NEWS 12.7.2019

- The new project Decoders-Chinese-TF2.0 also supports Chinese training for GPT2, which is simpler to use and does not easily cause various problems. It is still in the testing stage, and everyone is welcome to give their opinions.

NEWS 11.9

- GPT2-ML (no direct association with this project) has been released and contains the 1.5B Chinese GPT2 model. If you are interested or need it, you can convert it into the Pytorch format supported by this project for further training or generation testing.

UPDATE 10.25

- The first pre-trained model of this project has been announced and is a prose generation model. For details, please check the README model sharing section.

Project Status

- At the time of this project's announcement, the Chinese GPT2 resources were almost zero, and the situation is now different. Secondly, the project functions have been basically stable, so this project has been temporarily stopped. My original intention of writing these codes was to practice using Pytorch. Even if I did some work in the later stage, there were still many immature aspects, so please understand.

How to use

- Create a data folder in the project root directory. Put the training corpus into the data directory under the name train.json. train.json is a json list, and each element of the list is the text content of an article to be trained (not a file link) .

- Run the train.py file and check --raw to preprocess the data automatically.

- After the preprocessing is completed, training will be performed automatically.

Generate text

python ./generate.py --length=50 --nsamples=4 --prefix=xxx --fast_pattern --save_samples --save_samples_path=/mnt/xx

- --fast_pattern (contributed by LeeCP8): If the generated length parameter is relatively small and the speed is basically no different, I personally tested length=250, which was 2 seconds faster, so if --fast_pattern is not added, then the fast_pattern method is not used by default.

- --save_samples : By default, the output sample will be printed directly to the console. Pass this parameter and will be saved in samples.txt in the root directory.

- --save_samples_path : You can specify the saved directory yourself. You can create multi-level directories recursively by default. You cannot pass the file name. The file name is samples.txt by default.

File structure

- generate.py and train.py are scripts for generation and training respectively.

- train_single.py is an extension of train.py that can be used for a large list of individual elements (such as training a book on the Sky).

- eval.py is used to evaluate the ppl scores of the generated model.

- generate_texts.py is an extension of generate.py. It can generate several sentences with the starting keywords of a list and output them to a file.

- train.json is a format example of training samples for reference.

- The cache folder contains several BERT vocabs. make_vocab.py is a script that helps create vocabs on a train.json corpus file. vocab.txt is the original BERT vocab, vocab_all.txt has added additional ancient vocab, and vocab_small.txt is the small vocab.

- The tokenizations folder is three types of tokenizers that can be selected, including the default Bert Tokenizer, the participle version of Bert Tokenizer and BPE Tokenizer.

- scripts include sample training and generation scripts

Notice

- This project uses Bert's tokenizer to process Chinese characters.

- If you do not use the tokenizer participle version and do not need to classify words yourself in advance, the tokenizer will help you divide it.

- If you use the tokenizer participle version, it is best to use the make_vocab.py file in the cache folder to create a vocabulary list for your corpus.

- The model needs to be calculated by itself. If you have completed the pre-training, please feel free to communicate.

- If your memory is very large or the corpus is small, you can change the corresponding code in the build files in train.py and preprocess the corpus directly without splitting it.

- If you use BPE Tokenizer, you need to create your own Chinese word list

Materials

- Can be downloaded from here and here.

- The Doupo Sky corpus can be downloaded from here.

FP16 and Gradient Accumulation support

- I have added fp16 and gradient accumulation support to the train.py file. If you install apex and know what fp16 is, you can change the variable fp16=True to enable it. However, currently, FP16 may not converge, for unknown reasons.

Contact the author

Citing

@misc{GPT2-Chinese,

author = {Zeyao Du},

title = {GPT2-Chinese: Tools for training GPT2 model in Chinese language},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {url{https://github.com/Morizeyao/GPT2-Chinese}},

}

Model sharing

| Model name | Model introduction | Shared by | Link address 1 | Link address 2 |

|---|

| Prose Model | Use 130MB of famous prose, emotional prose and prose poetry training. | hughqiu | Baidu Netdisk【fpyu】 | GDrive |

| Poetry Model | The results of training using about 800,000 ancient poems in 180MB. | hhou435 | Baidu Netdisk【7fev】 | GDrive |

| Couplet model | The training income from using about 700,000 couplets of 40MB. | hhou435 | Baidu Netdisk【i5n0】 | GDrive |

| General Chinese model | The results obtained by using CLUECorpusSmall corpus training. | hhou435 | Baidu Netdisk [n3s8] | GDrive |

| General Chinese small model | The results obtained by using CLUECorpusSmall corpus training. | hhou435 | Baidu Netdisk [rpjk] | GDrive |

| Chinese lyrics model | The training results were obtained using about 150,000 Chinese lyrics of 140MB. | hhou435 | Baidu Netdisk【0qnn】 | GDrive |

| Classical Chinese Model | About 3 million classical Chinese training pieces were obtained using 1.8GB. | hhou435 | Baidu Netdisk [ek2z] | GDrive |

Here is a model file obtained from the training of enthusiastic and generous git friends, and it is open to all friends for use. At the same time, all partners are welcome to disclose the model they have trained here.

Demo

- The model trained by user JamesHujy based on the modified code of this warehouse is used as the background of regulated verse and quatrains. The new version of the Nine Songs Poetry Generator has been launched.

- Contributed by leemengtaiwan, it provides an article that provides a direct introduction to GPT-2 and how to visualize self-attention mechanisms. Colab notebooks and models are also provided for any user to generate new examples in one go.

Generate sample

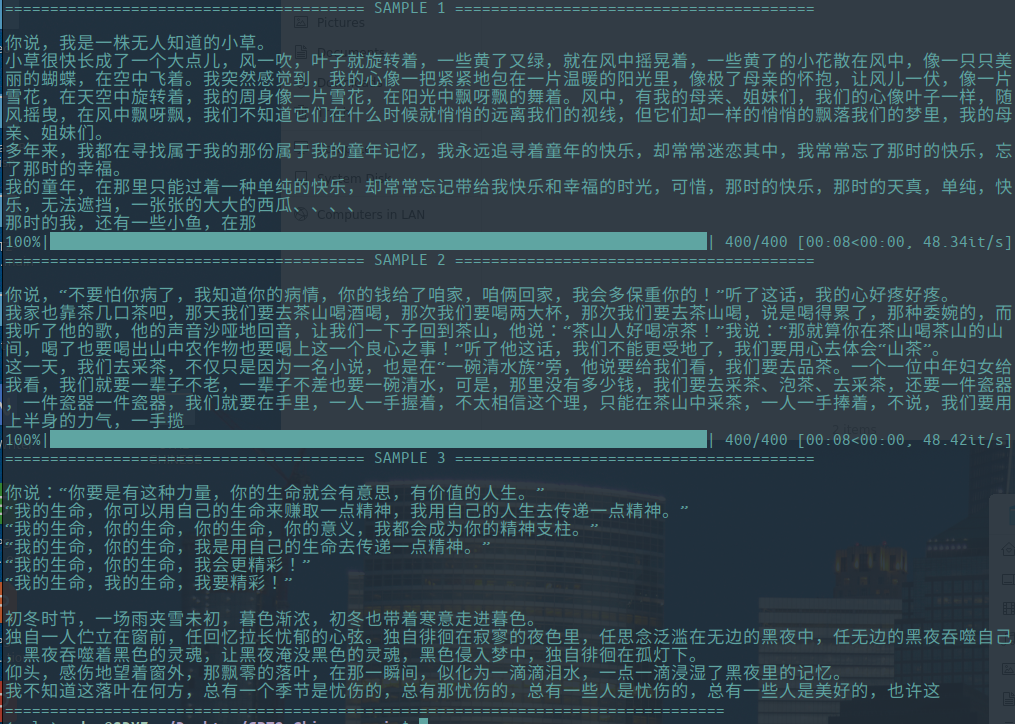

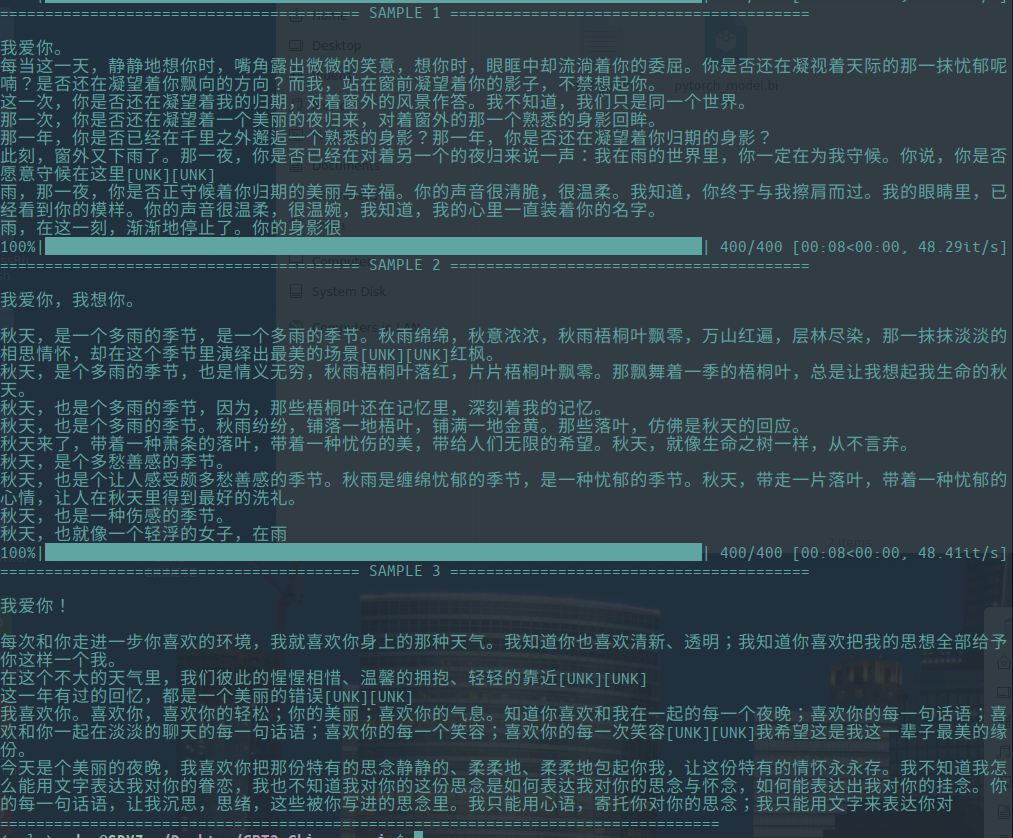

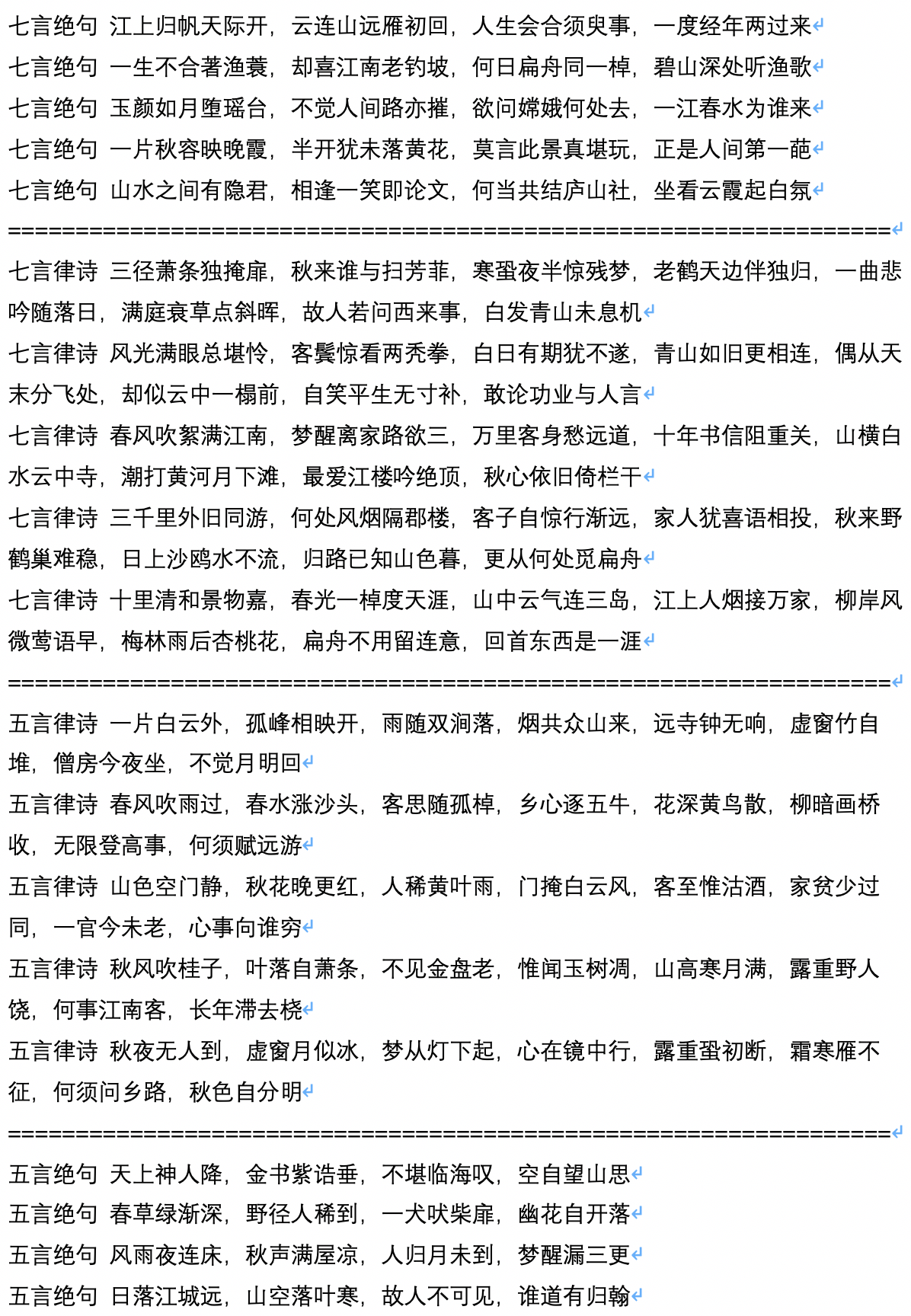

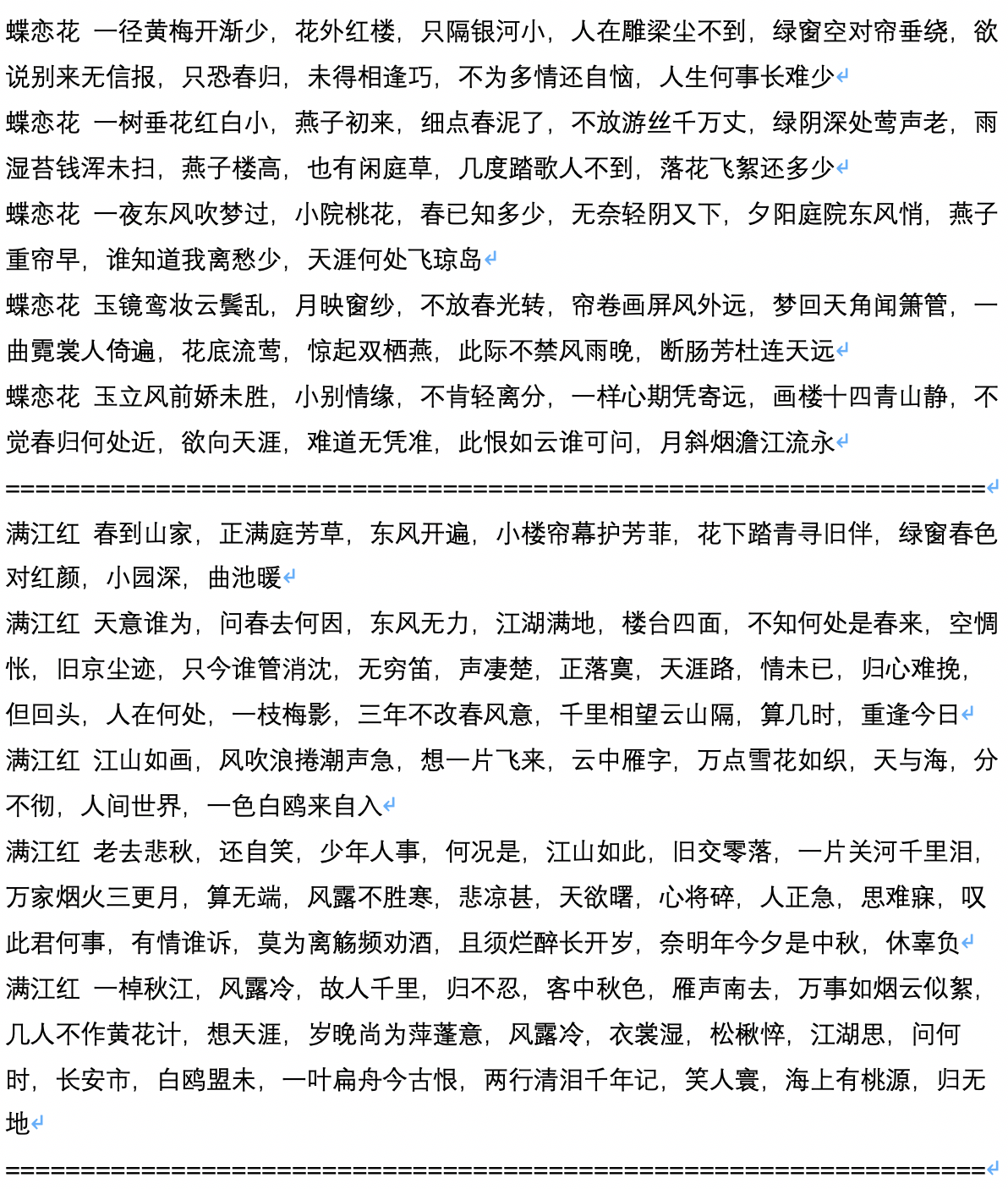

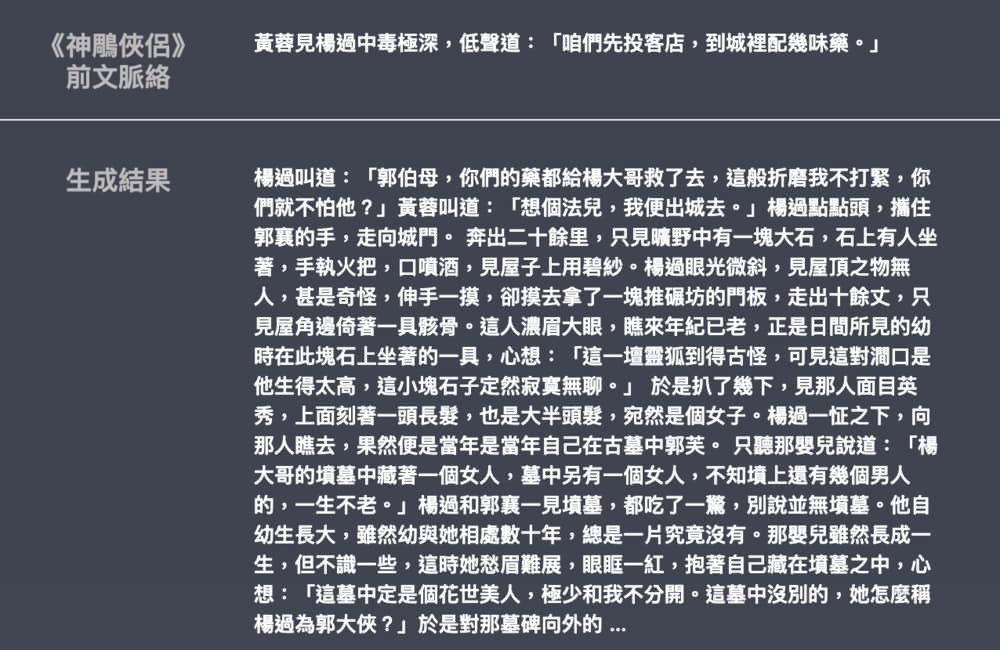

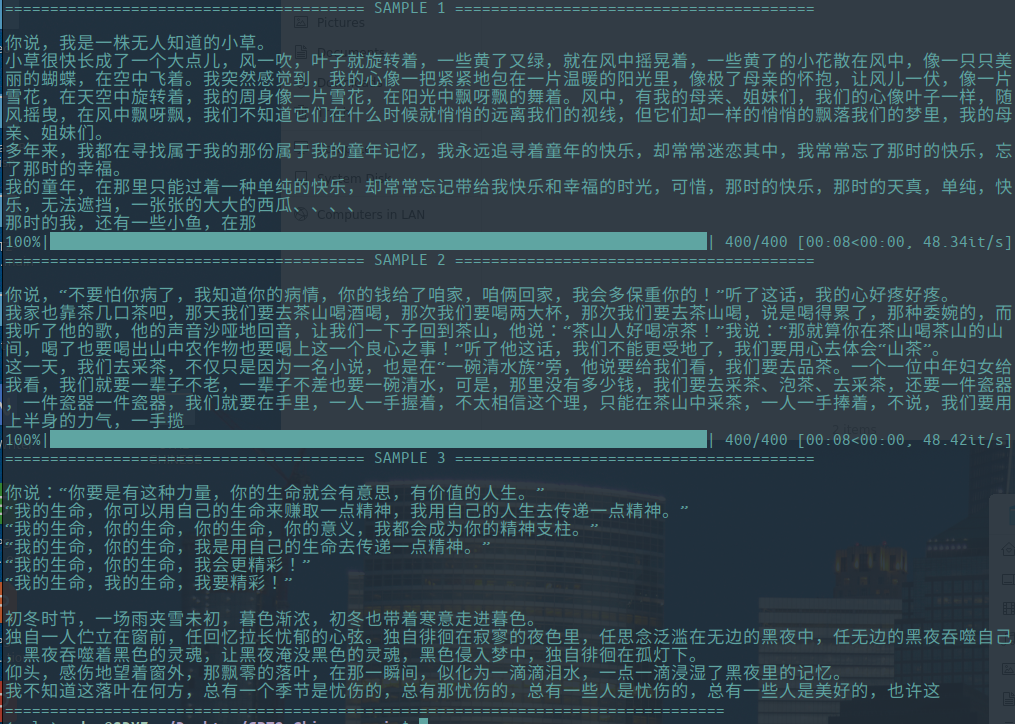

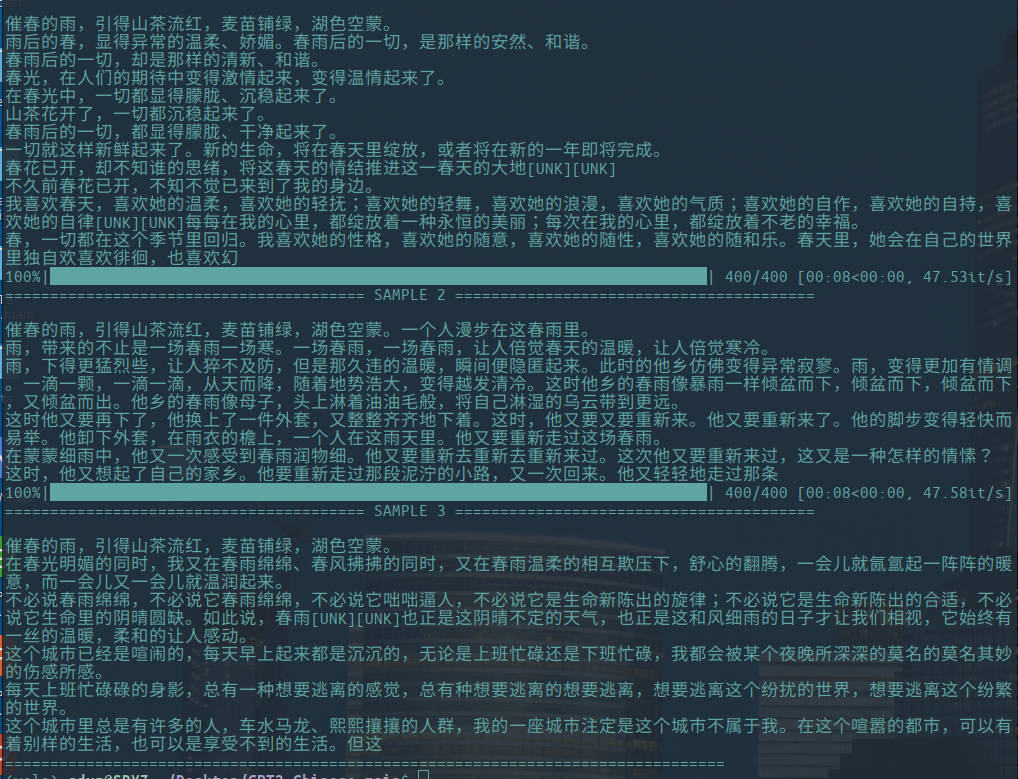

-The following are examples of the generation of literary essays, contributed by Hughqiu, and the model has been shared in the model sharing list. Corpus 130MB, Batch size 16, 10 rounds of training at 10 layers of depth.

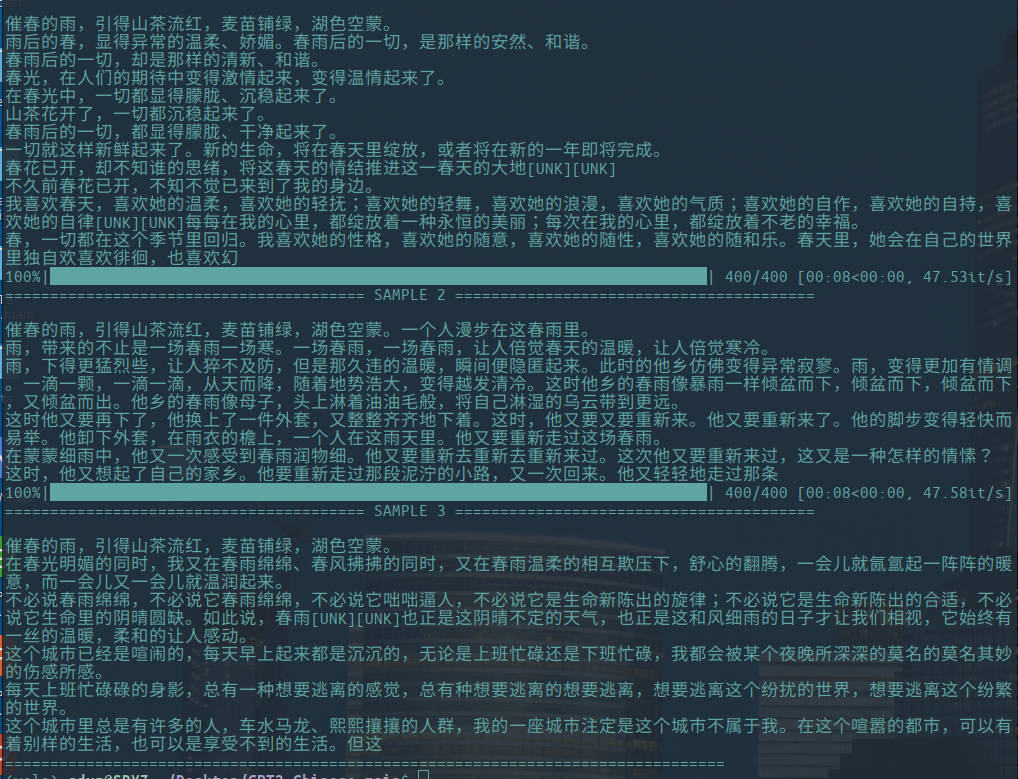

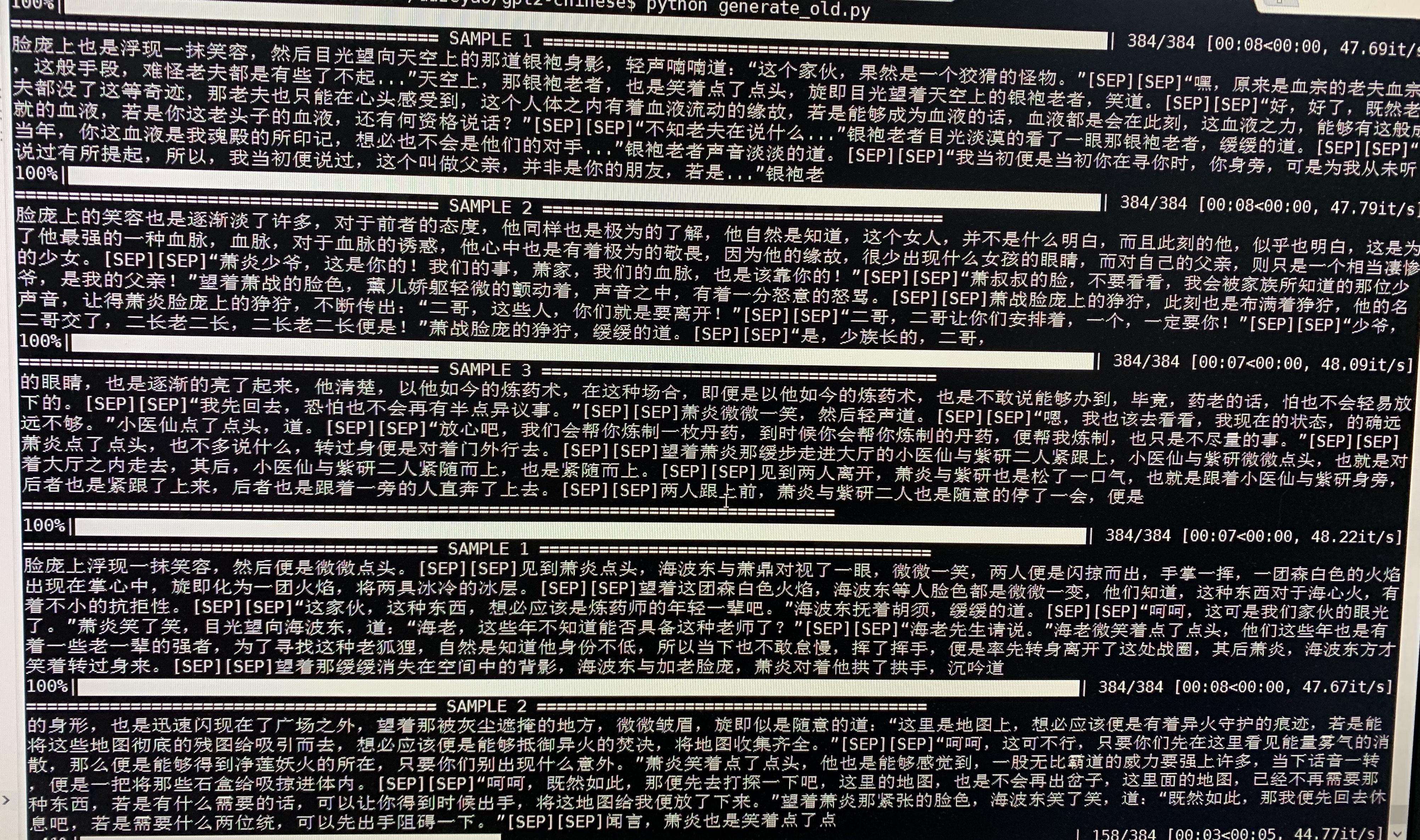

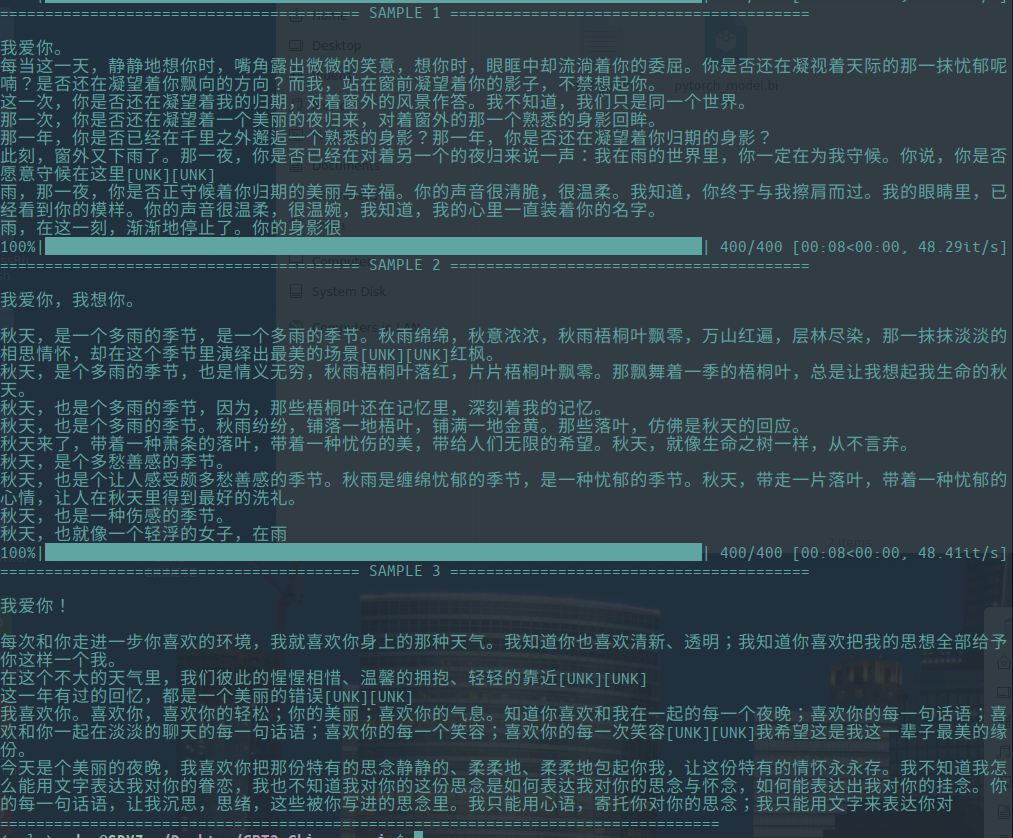

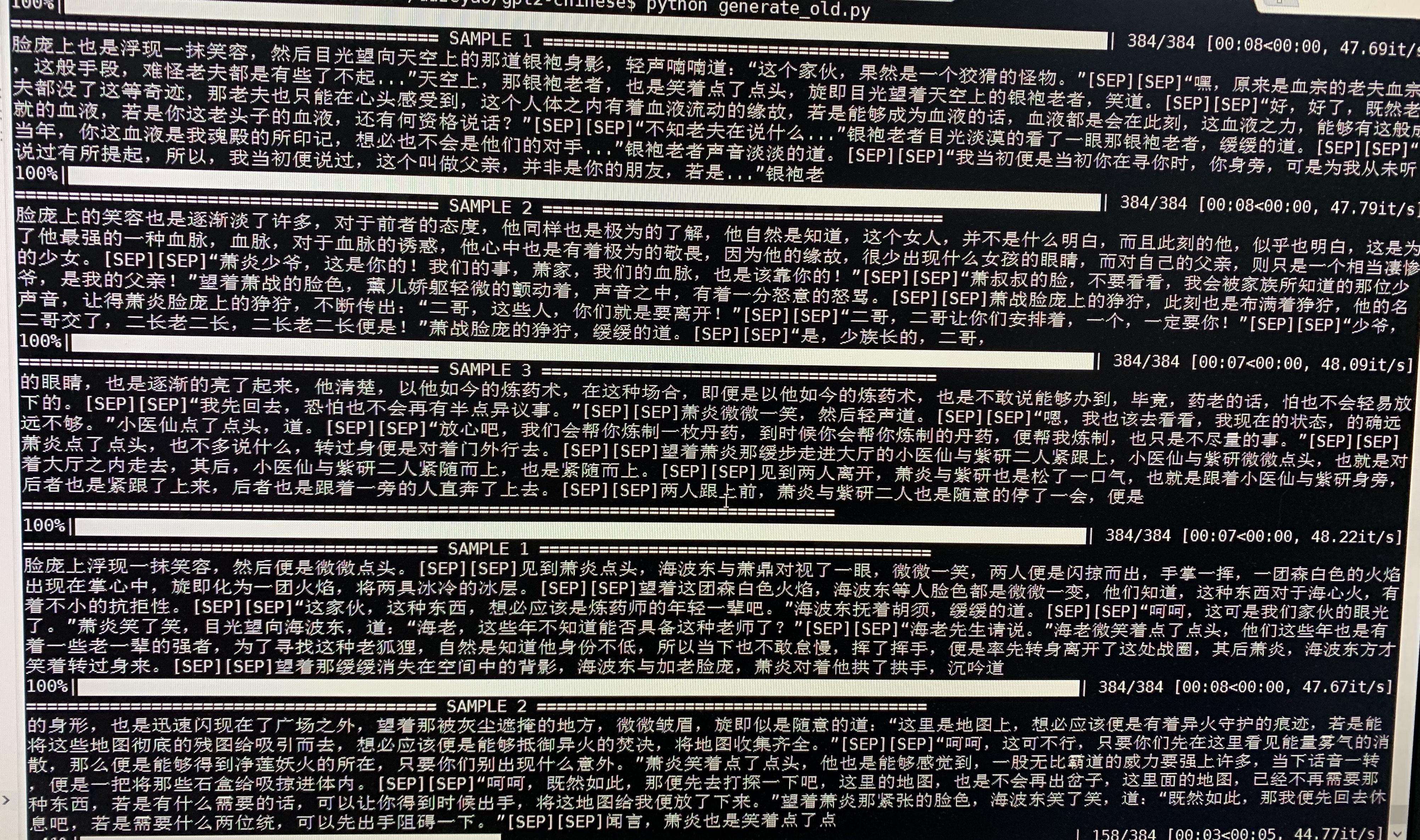

- The following is a sample generation of Doupo Cangqiong. GPT2 with about 50M parameters was trained on 16MB Doupo Cangqiong novel content with 32Batch Size. Here [SEP] means a new line.

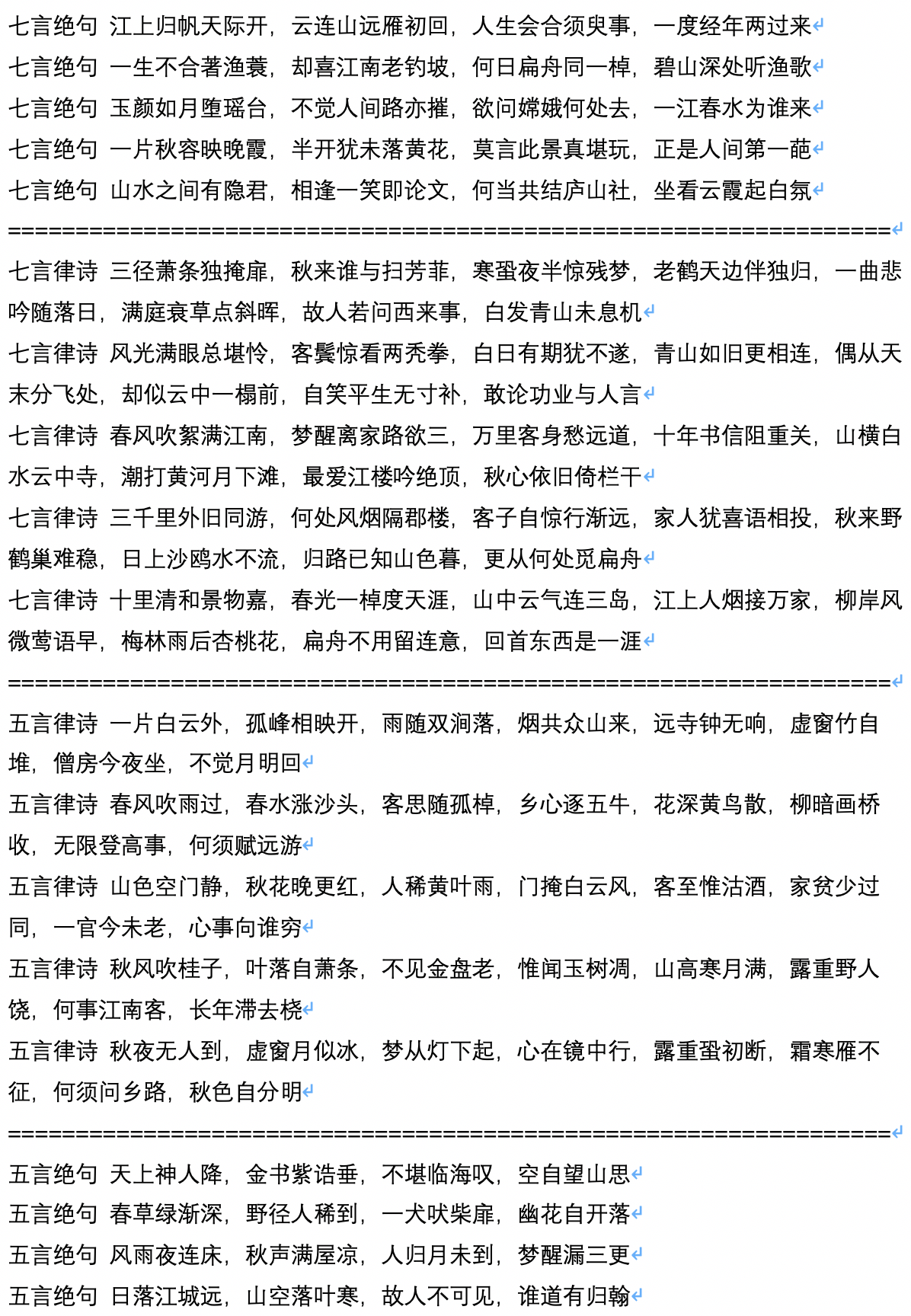

- The following is a sample of the generation of ancient poems, which are calculated and contributed by user JamesHujy.

- The following is a sample generation after the generation of the ancient poem, which is calculated and contributed by the user JamesHujy.

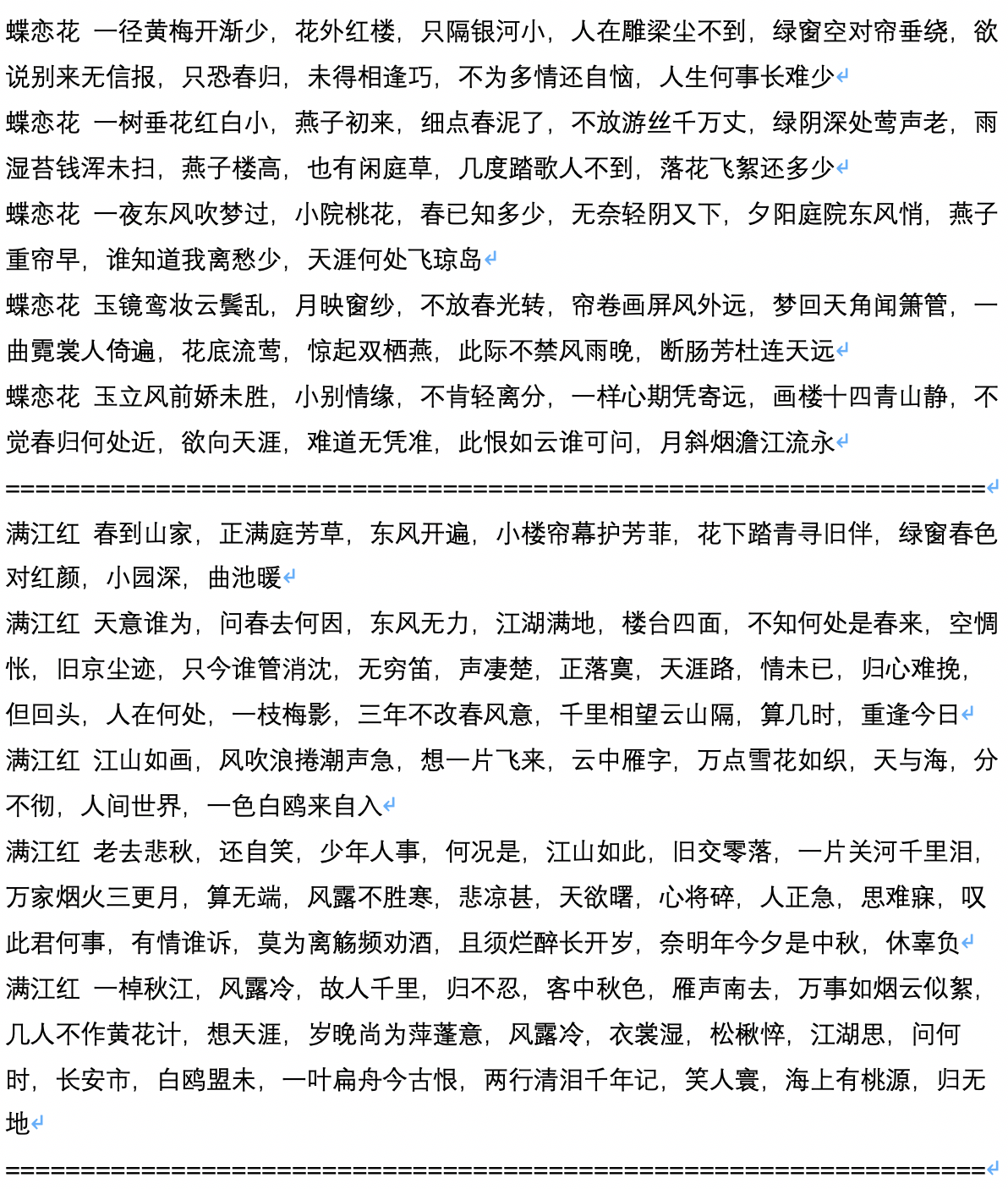

- The following is the sample text of the generated script, which is calculated and contributed by the user chiangandy.

[starttext]The plot of the love game tells the story of the cute love between father and daughter of piano, a audience with hard work and value for reality for life, and obtaining a series of love. The 1980s stock recording media was shared by netizens. It was the brother of the brand director of the brand of Chen Layun, the big country of Shanghai Huaihe River, a major country. Although the youth of the front-line company did not have a career, Lan Zhengshi refused to understand it. The emergence of Lan Yue's help concept has opened up a clear misunderstanding and business has become a love river. In a accidental TV series, the TV series changed its fate. The three of them were assigned to their creations in a car accident. They were asked about the misunderstanding and the low-key talent in the industry. Chen Zhao and Tang Shishiyan started a completely different "2014 relationship". The two had mutual character and had mutual cure. Although they were a small dormitory journey recorded by Beijing Huaqiao University, a post-90s generation, and outstanding young people such as Tang Ru and Sheng, how did people's lives go against their wishes and create together? And why did they have the success and concern of each other? [endtext]

[starttext] Learning Love mainly tells the story of two pairs of Xiaoman. After a ridiculous test, they finally chose three children and started a business together to create four children, and started a successful business in a big city. The two businesses joined Beijing. After a period of time, they got different things after the chaos and differences, and finally gained the true love of their dreams. The opening ceremony of the main entrepreneurial characters such as sponsor ideals, TV series, dramas, etc. was held in Beijing. The drama is based on the TV perspective of three newcomers in Hainan. It tells the story of several young people who have enhanced non-romantics in Beijing and comedy generations. With the unique dual-era young people from Beijing to Beijing, China's urbanization, China's big cities have gone out of development. With the changes in language cities, while their gradual lifestyles have staged such a simple vulgarity for their own direction. It is filmed in contemporary times. How to be in this city? So a calm city is the style of the city. Zhang Jiahe supports work creation, and this is a point that it is necessary to create an airport drama crew meeting. People who transform into chess and cultures are very unique and sensational, intertwined, funny, and come from the beautiful Northeast and the mainland, and the two girls dare to be called mute girls. The people in the interweaving made a joke, and the impressive temperament made people look very comedic. What they knew was a "Northeast" foreign family supporter, which made her look good at reading dramas. After that, Qi Fei, Qi Fei, Fan Er, Chu Yuezi and Bai Tianjie who expressed love for him. The friendship between the two generations seems to be without the combination of cheerful and wonderful expressions. [endtext]

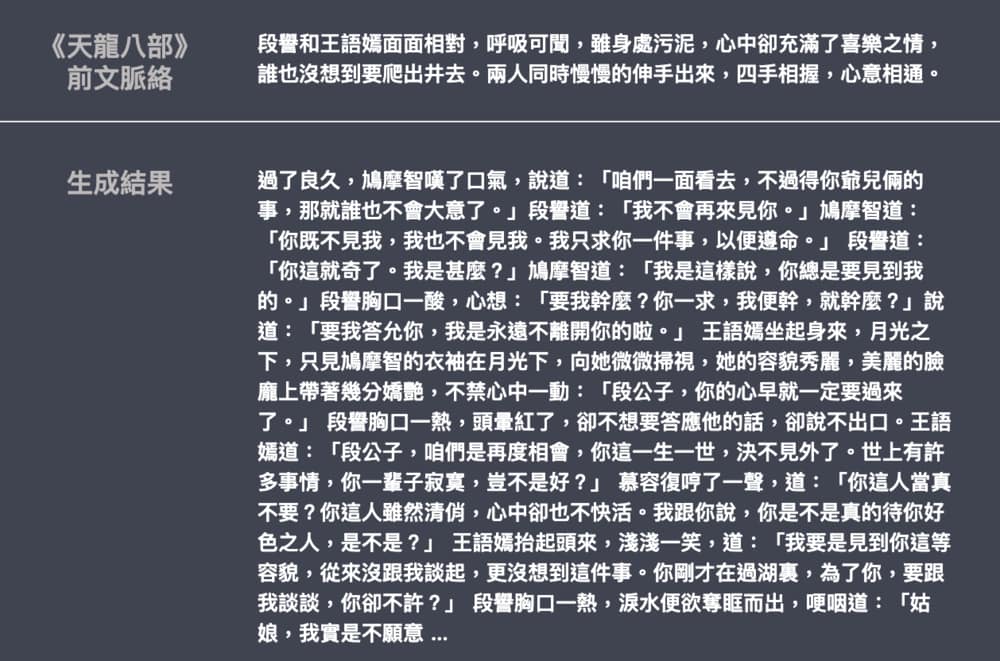

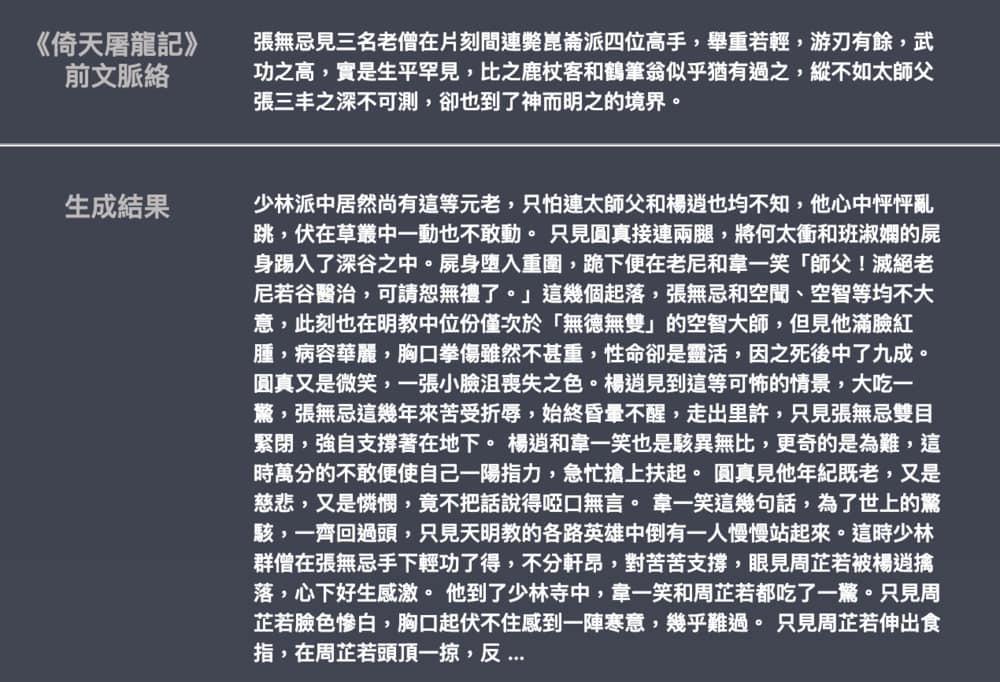

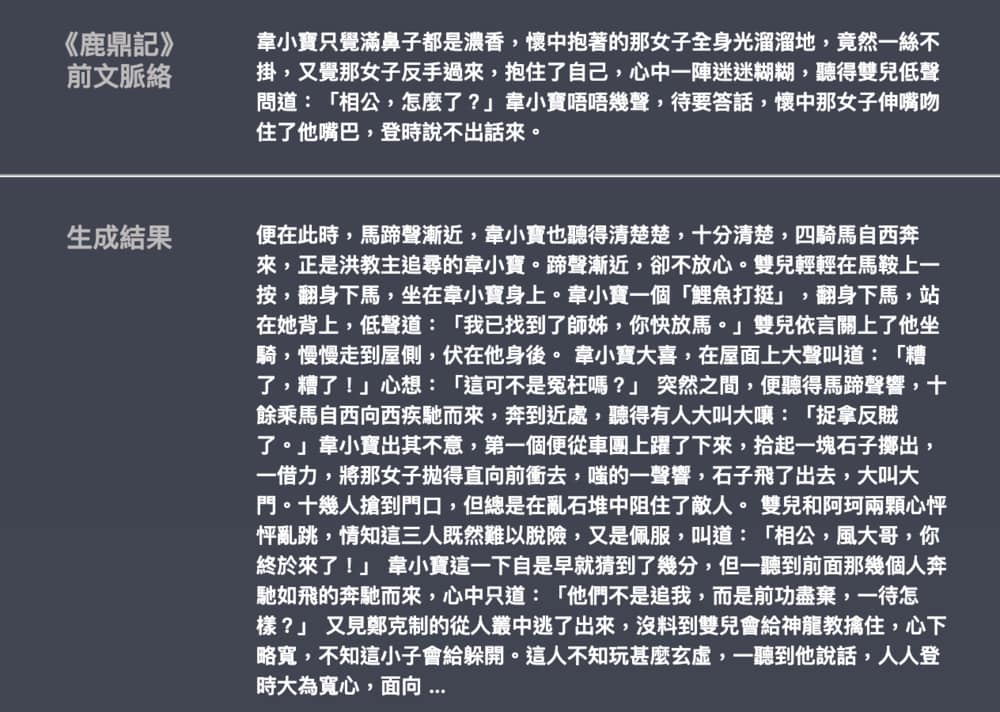

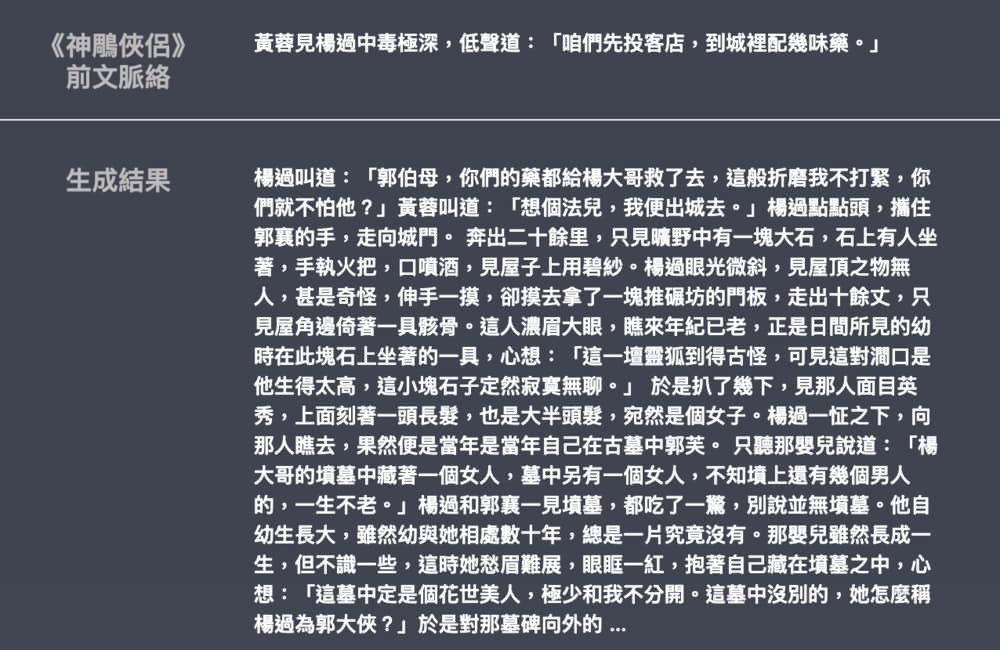

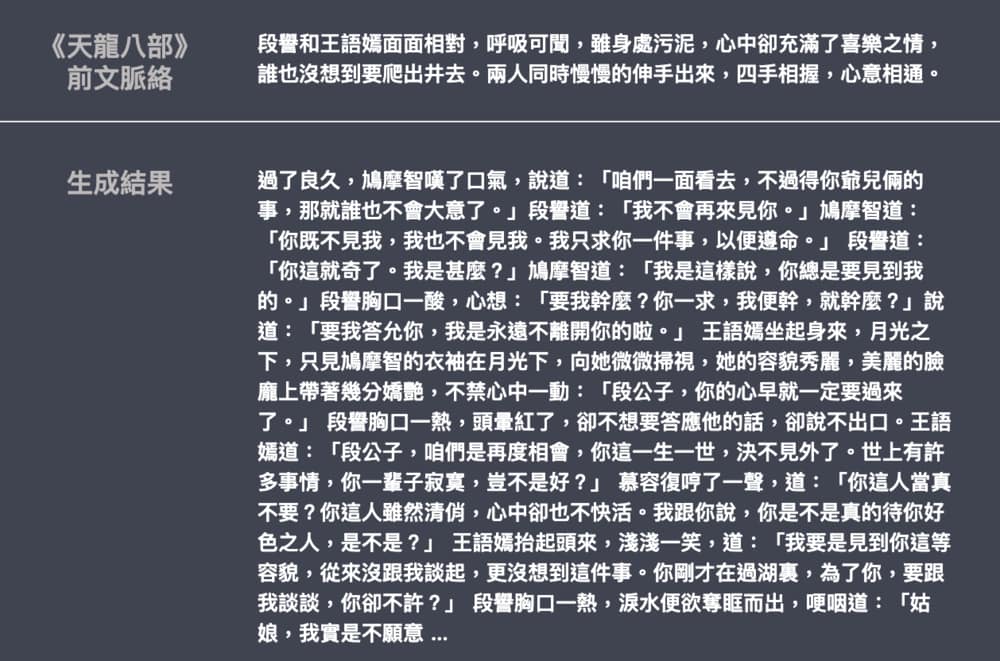

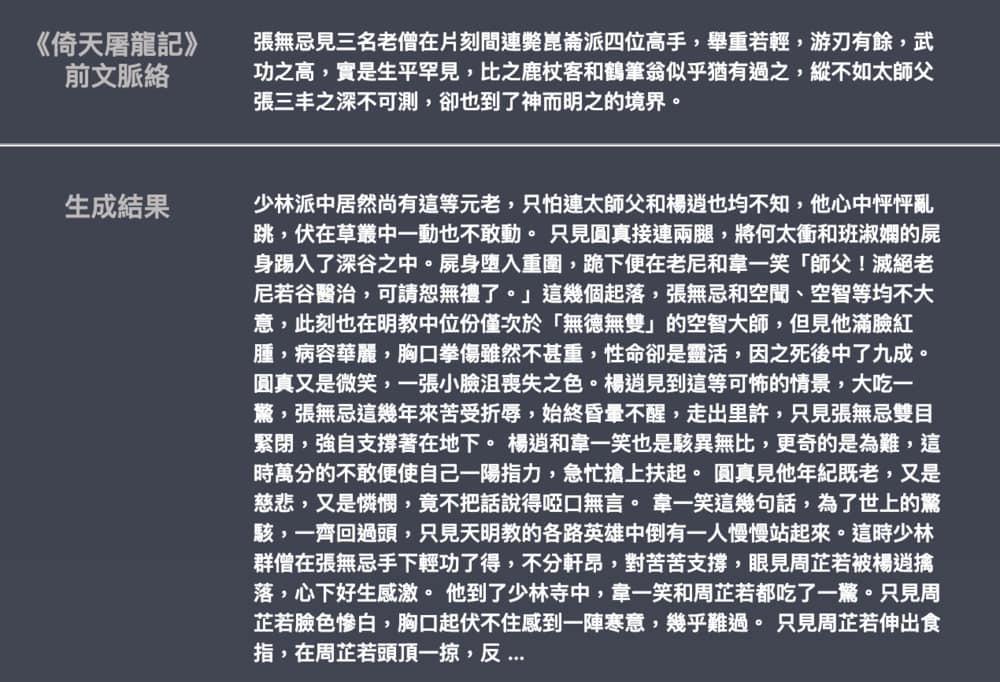

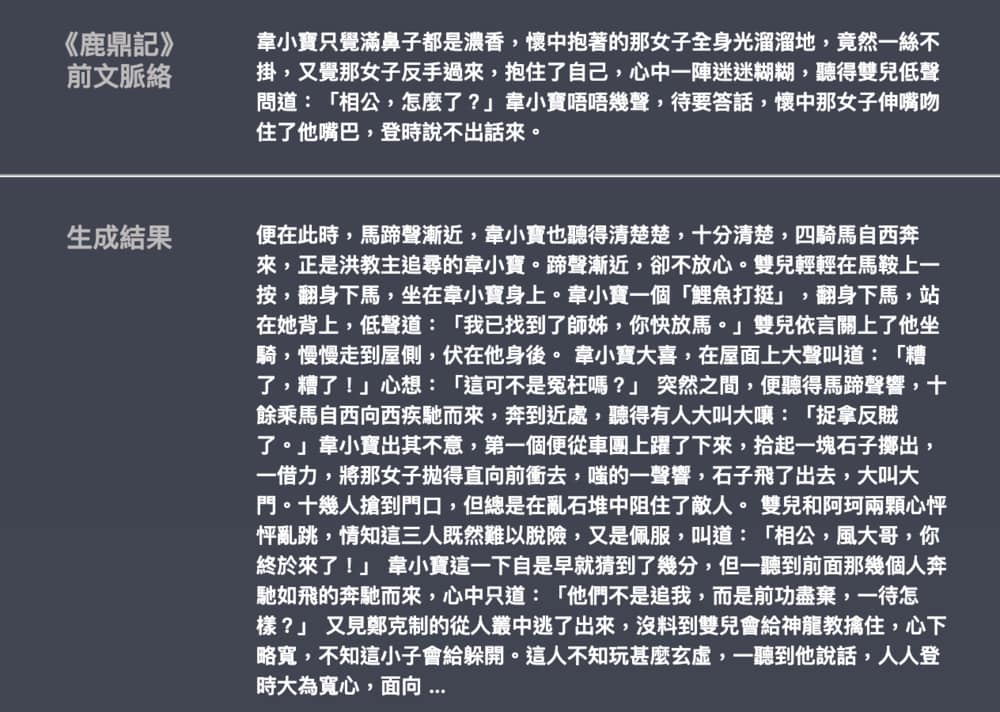

- The following is an example of the generation of Jin Yong and Wu Qi novels, contributed by leemengtaiwan. The model size is about 82M, the language material is 50MB, and the Batch size is 16. Provides an article to introduce GPT-2 and how to visualize self-attention mechanisms. Colab notebooks and models are also provided for any user to generate new examples in one go.