? Hugging Face • ? ModelScope • WeChat • ? Modelers

Baixiaoying APP has been officially launched! AI assistants who know how to search and ask questions, search for Baixiao in major application stores, welcome to download and experience it?

Chinese | English

[2023.12.29] ??? We have released the Baichuan2-13B-Chat v2 version. in:

The release version and download links are shown in the following table:

| Base model | Align the model | Alignment model 4bits quantization | |

|---|---|---|---|

| 7B | ? Baichuan2-7B-Base | ? Baichuan2-7B-Chat | ? Baichuan2-7B-Chat-4bits |

| 13B | ? Baichuan2-13B-Base | ? Baichuan2-13B-Chat | ? Baichuan2-13B-Chat-4bits |

We extensively tested the model on Chinese and English and multilingual authoritative datasets in six fields: general, law, medical, mathematics, code and multilingual translation.

In the general domain we performed 5-shot tests on the following dataset.

| C-Eval | MMLU | CMMLU | Gaokao | AGIEval | BBH | |

|---|---|---|---|---|---|---|

| 5-shot | 5-shot | 5-shot | 5-shot | 5-shot | 3-shot | |

| GPT-4 | 68.40 | 83.93 | 70.33 | 66.15 | 63.27 | 75.12 |

| GPT-3.5 Turbo | 51.10 | 68.54 | 54.06 | 47.07 | 46.13 | 61.59 |

| LLaMA-7B | 27.10 | 35.10 | 26.75 | 27.81 | 28.17 | 32.38 |

| LLaMA2-7B | 28.90 | 45.73 | 31.38 | 25.97 | 26.53 | 39.16 |

| MPT-7B | 27.15 | 27.93 | 26.00 | 26.54 | 24.83 | 35.20 |

| Falcon-7B | 24.23 | 26.03 | 25.66 | 24.24 | 24.10 | 28.77 |

| ChatGLM2-6B | 50.20 | 45.90 | 49.00 | 49.44 | 45.28 | 31.65 |

| Baichuan-7B | 42.80 | 42.30 | 44.02 | 36.34 | 34.44 | 32.48 |

| Baichuan2-7B-Base | 54.00 | 54.16 | 57.07 | 47.47 | 42.73 | 41.56 |

| C-Eval | MMLU | CMMLU | Gaokao | AGIEval | BBH | |

|---|---|---|---|---|---|---|

| 5-shot | 5-shot | 5-shot | 5-shot | 5-shot | 3-shot | |

| GPT-4 | 68.40 | 83.93 | 70.33 | 66.15 | 63.27 | 75.12 |

| GPT-3.5 Turbo | 51.10 | 68.54 | 54.06 | 47.07 | 46.13 | 61.59 |

| LLaMA-13B | 28.50 | 46.30 | 31.15 | 28.23 | 28.22 | 37.89 |

| LLaMA2-13B | 35.80 | 55.09 | 37.99 | 30.83 | 32.29 | 46.98 |

| Vicuna-13B | 32.80 | 52.00 | 36.28 | 30.11 | 31.55 | 43.04 |

| Chinese-Alpaca-Plus-13B | 38.80 | 43.90 | 33.43 | 34.78 | 35.46 | 28.94 |

| XVERSE-13B | 53.70 | 55.21 | 58.44 | 44.69 | 42.54 | 38.06 |

| Baichuan-13B-Base | 52.40 | 51.60 | 55.30 | 49.69 | 43.20 | 43.01 |

| Baichuan2-13B-Base | 58.10 | 59.17 | 61.97 | 54.33 | 48.17 | 48.78 |

In the field of law we used the JEC-QA dataset. The JEC-QA dataset is derived from the China National Judicial Examination. We only retained the single choice questions. We adopted a similar evaluation scheme as C-Eval.

The medical field uses medical-related disciplines, MedQA and MedMCQA in the general domain dataset (C-Eval, MMLU, CMMLU). We adopted a similar evaluation scheme as C-Eval.

We performed 5-shot tests on the above dataset.

| JEC-QA | CEval-MMLU-CMMLU | MedQA-USMLE | MedQA-MCMLE | MedMCQA | |

|---|---|---|---|---|---|

| 5-shot | 5-shot | 5-shot | 5-shot | 5-shot | |

| GPT-4 | 59.32 | 77.16 | 80.28 | 74.58 | 72.51 |

| GPT-3.5 Turbo | 42.31 | 61.17 | 53.81 | 52.92 | 56.25 |

| LLaMA-7B | 27.45 | 33.34 | 24.12 | 21.72 | 27.45 |

| LLaMA2-7B | 29.20 | 36.75 | 27.49 | 24.78 | 37.93 |

| MPT-7B | 27.45 | 26.67 | 16.97 | 19.79 | 31.96 |

| Falcon-7B | 23.66 | 25.33 | 21.29 | 18.07 | 33.88 |

| ChatGLM2-6B | 40.76 | 44.54 | 26.24 | 45.53 | 30.22 |

| Baichuan-7B | 34.64 | 42.37 | 27.42 | 39.46 | 31.39 |

| Baichuan2-7B-Base | 44.46 | 56.39 | 32.68 | 54.93 | 41.73 |

| JEC-QA | CEval-MMLU-CMMLU | MedQA-USMLE | MedQA-MCMLE | MedMCQA | |

|---|---|---|---|---|---|

| 5-shot | 5-shot | 5-shot | 5-shot | 5-shot | |

| GPT-4 | 59.32 | 77.16 | 80.28 | 74.58 | 72.51 |

| GPT-3.5 Turbo | 42.31 | 61.17 | 53.81 | 52.92 | 56.25 |

| LLaMA-13B | 27.54 | 35.14 | 28.83 | 23.38 | 39.52 |

| LLaMA2-13B | 34.08 | 47.42 | 35.04 | 29.74 | 42.12 |

| Vicuna-13B | 28.38 | 40.99 | 34.80 | 27.67 | 40.66 |

| Chinese-Alpaca-Plus-13B | 35.32 | 46.31 | 27.49 | 32.66 | 35.87 |

| XVERSE-13B | 46.42 | 58.08 | 32.99 | 58.76 | 41.34 |

| Baichuan-13B-Base | 41.34 | 51.77 | 29.07 | 43.67 | 39.60 |

| Baichuan2-13B-Base | 47.40 | 59.33 | 40.38 | 61.62 | 42.86 |

In the field of mathematics, we used the OpenCompass evaluation framework to test the GSM8K and MATH datasets 4-shot.

The code field uses HumanEval and MBPP datasets. We used OpenCompass to test HumanEval 0-shot and MBPP dataset 3-shot.

| GSM8K | MATH | HumanEval | MBPP | |

|---|---|---|---|---|

| 4-shot | 4-shot | 0-shot | 3-shot | |

| GPT-4 | 89.99 | 40.20 | 69.51 | 63.60 |

| GPT-3.5 Turbo | 57.77 | 13.96 | 52.44 | 61.40 |

| LLaMA-7B | 9.78 | 3.02 | 11.59 | 14.00 |

| LLaMA2-7B | 16.22 | 3.24 | 12.80 | 14.80 |

| MPT-7B | 8.64 | 2.90 | 14.02 | 23.40 |

| Falcon-7B | 5.46 | 1.68 | - | 10.20 |

| ChatGLM2-6B | 28.89 | 6.40 | 9.15 | 9.00 |

| Baichuan-7B | 9.17 | 2.54 | 9.20 | 6.60 |

| Baichuan2-7B-Base | 24.49 | 5.58 | 18.29 | 24.20 |

| GSM8K | MATH | HumanEval | MBPP | |

|---|---|---|---|---|

| 4-shot | 4-shot | 0-shot | 3-shot | |

| GPT-4 | 89.99 | 40.20 | 69.51 | 63.60 |

| GPT-3.5 Turbo | 57.77 | 13.96 | 52.44 | 61.40 |

| LLaMA-13B | 20.55 | 3.68 | 15.24 | 21.40 |

| LLaMA2-13B | 28.89 | 4.96 | 15.24 | 27.00 |

| Vicuna-13B | 28.13 | 4.36 | 16.46 | 15.00 |

| Chinese-Alpaca-Plus-13B | 11.98 | 2.50 | 16.46 | 20.00 |

| XVERSE-13B | 18.20 | 2.18 | 15.85 | 16.80 |

| Baichuan-13B-Base | 26.76 | 4.84 | 11.59 | 22.80 |

| Baichuan2-13B-Base | 52.77 | 10.08 | 17.07 | 30.20 |

We used the Flores-101 dataset to evaluate the multilingual capability of the model. Flores-101 covers 101 languages around the world. Its data comes from various fields such as news, travel guides and books. We selected the official UN language (Arabic, Chinese, English, French, Russian and Spanish) as well as German and Japanese as the test languages. We used OpenCompass to conduct 8-shot tests on seven subtasks in Flores-101, including Chinese-English, Chinese-French, Chinese-Spain, Chinese-Arab, Chinese-Russia, Chinese-Japan, and Chinese-Germany.

| CN-EN | CN-FR | CN-ES | CN-AR | CN-RU | CN-JP | CN-DE | Average | |

|---|---|---|---|---|---|---|---|---|

| GPT-4 | 29.94 | 29.56 | 20.01 | 10.76 | 18.62 | 13.26 | 20.83 | 20.43 |

| GPT-3.5 Turbo | 27.67 | 26.15 | 19.58 | 10.73 | 17.45 | 1.82 | 19.70 | 17.59 |

| LLaMA-7B | 17.27 | 12.02 | 9.54 | 0.00 | 4.47 | 1.41 | 8.73 | 7.63 |

| LLaMA2-7B | 25.76 | 15.14 | 11.92 | 0.79 | 4.99 | 2.20 | 10.15 | 10.14 |

| MPT-7B | 20.77 | 9.53 | 8.96 | 0.10 | 3.54 | 2.91 | 6.54 | 7.48 |

| Falcon-7B | 22.13 | 15.67 | 9.28 | 0.11 | 1.35 | 0.41 | 6.41 | 7.91 |

| ChatGLM2-6B | 22.28 | 9.42 | 7.77 | 0.64 | 1.78 | 0.26 | 4.61 | 6.68 |

| Baichuan-7B | 25.07 | 16.51 | 12.72 | 0.41 | 6.66 | 2.24 | 9.86 | 10.50 |

| Baichuan2-7B-Base | 27.27 | 20.87 | 16.17 | 1.39 | 11.21 | 3.11 | 12.76 | 13.25 |

| CN-EN | CN-FR | CN-ES | CN-AR | CN-RU | CN-JP | CN-DE | Average | |

|---|---|---|---|---|---|---|---|---|

| GPT-4 | 29.94 | 29.56 | 20.01 | 10.76 | 18.62 | 13.26 | 20.83 | 20.43 |

| GPT-3.5 Turbo | 27.67 | 26.15 | 19.58 | 10.73 | 17.45 | 1.82 | 19.70 | 17.59 |

| LLaMA-13B | 21.75 | 16.16 | 13.29 | 0.58 | 7.61 | 0.41 | 10.66 | 10.07 |

| LLaMA2-13B | 25.44 | 19.25 | 17.49 | 1.38 | 10.34 | 0.13 | 11.13 | 12.17 |

| Vicuna-13B | 22.63 | 18.04 | 14.67 | 0.70 | 9.27 | 3.59 | 10.25 | 11.31 |

| Chinese-Alpaca-Plus-13B | 22.53 | 13.82 | 11.29 | 0.28 | 1.52 | 0.31 | 8.13 | 8.27 |

| XVERSE-13B | 29.26 | 24.03 | 16.67 | 2.78 | 11.61 | 3.08 | 14.26 | 14.53 |

| Baichuan-13B-Base | 30.24 | 20.90 | 15.92 | 0.98 | 9.65 | 2.64 | 12.00 | 13.19 |

| Baichuan2-13B-Base | 30.61 | 22.11 | 17.27 | 2.39 | 14.17 | 11.58 | 14.53 | 16.09 |

The model weights, source codes, and configurations required for inference have been published in Hugging Face. Please see the first table of this document for the download link. We demonstrate a variety of ways of reasoning here. The program will automatically download the required resources from Hugging Face.

pip install -r requirements.txt >> > import torch

>> > from transformers import AutoModelForCausalLM , AutoTokenizer

>> > from transformers . generation . utils import GenerationConfig

>> > tokenizer = AutoTokenizer . from_pretrained ( "baichuan-inc/Baichuan2-13B-Chat" , use_fast = False , trust_remote_code = True )

>> > model = AutoModelForCausalLM . from_pretrained ( "baichuan-inc/Baichuan2-13B-Chat" , device_map = "auto" , torch_dtype = torch . bfloat16 , trust_remote_code = True )

>> > model . generation_config = GenerationConfig . from_pretrained ( "baichuan-inc/Baichuan2-13B-Chat" )

>> > messages = []

>> > messages . append ({ "role" : "user" , "content" : "解释一下“温故而知新”" })

>> > response = model . chat ( tokenizer , messages )

>> > print ( response )

"温故而知新"是一句中国古代的成语,出自《论语·为政》篇。这句话的意思是:通过回顾过去,我们可以发现新的知识和理解。换句话说,学习历史和经验可以让我们更好地理解现在和未来。

这句话鼓励我们在学习和生活中不断地回顾和反思过去的经验,从而获得新的启示和成长。通过重温旧的知识和经历,我们可以发现新的观点和理解,从而更好地应对不断变化的世界和挑战。 >> > from transformers import AutoModelForCausalLM , AutoTokenizer

>> > tokenizer = AutoTokenizer . from_pretrained ( "baichuan-inc/Baichuan2-13B-Base" , trust_remote_code = True )

>> > model = AutoModelForCausalLM . from_pretrained ( "baichuan-inc/Baichuan2-13B-Base" , device_map = "auto" , trust_remote_code = True )

>> > inputs = tokenizer ( '登鹳雀楼->王之涣n夜雨寄北->' , return_tensors = 'pt' )

>> > inputs = inputs . to ( 'cuda:0' )

>> > pred = model . generate ( ** inputs , max_new_tokens = 64 , repetition_penalty = 1.1 )

>> > print ( tokenizer . decode ( pred . cpu ()[ 0 ], skip_special_tokens = True ))

登鹳雀楼- >王之涣

夜雨寄北- >李商隐In the above two codes, the model loads the specified

device_map='auto'and all available graphics cards will be used. If you need to specify the device to use, you can use a method likeexport CUDA_VISIBLE_DEVICES=0,1(the graphics cards 0 and 1 are used).

python cli_demo.pyThis command line tool is designed for Chat scenarios, so we do not support calling Base models using this tool.

Relying on streamlit to run the following command, a web service will be started locally and the address given by the console will be placed into the browser to access. This web demo tool is designed for Chat scenarios, so we do not support calling Base models using this tool.

streamlit run web_demo.py用户:找到下列数组的中位数[3.1,6.2,1.3,8.4,10.5,11.6,2.1],请用python代码完成以上功能

Baichuan 2:def find_median(numbers):

numbers.sort()

length = len(numbers)

if length % 2 == 0:

return (numbers[length // 2 - 1] + numbers[length // 2]) / 2

else:

return numbers[length // 2]

numbers = [3.1, 6.2, 1.3, 8.4, 10.5, 11.6, 2.1]

print(find_median(numbers))

用户:鸡和兔在一个笼子里,共有26个头,68只脚,那么鸡有多少只,兔有多少只?

Baichuan 2:我们可以用代数方法来解决这个问题。假设鸡的数量为x,兔子的数量为y。根据题目给出的信息,我们可以得到以下两个方程:

1. x + y = 26 (头的总数)

2. 2x + 4y = 68 (脚的总数)

现在我们需要解这个二元一次方程组。首先从第一个方程中解出x:

x = 26 - y

然后将x的表达式代入第二个方程:

2(26 - y) + 4y = 68

52 - 2y + 4y = 68

2y = 16

接下来我们求解y的值:

y = 16 / 2

y = 8

现在我们得到了兔子的数量是8只。将y的值代入x的表达式求得鸡的数量:

x = 26 - 8

x = 18

所以鸡有18只,兔子有8只。

用户:从现在开始,请你将我接下来说的每一句话翻译成英语,准备好了吗?

Baichuan 2:I'm ready to translate every word you say next into English.

用户:你好

Baichuan 2:Hello

用户:我是Baichuan 2,很高兴认识大家

Baichuan 2:I am Baichuan 2, and I am very happy to meet everyone.

用户:希望通过大家的共同努力,早日迎接AGI时代的到来

Baichuan 2:I hope through the joint efforts of all of us, we can welcome the arrival of the AGI era as soon as possible.

用户:以下物理常识题目,哪一个是错误的?A.在自然环境下,声音在固体中传播速度最快。B.牛顿第一定律:一个物体如果不受力作用,将保持静止或匀速直线运动的状态。C.牛顿第三定律:对于每个作用力,都有一个相等而反向的反作用力。D.声音在空气中的传播速度为1000m/s。

Baichuan 2:本题考查物理基本概念和原理的理解和应用能力。

A项:声音的传播需要介质,在固体、液体和气体的三种介质中,声音在固体中的传播速度是最快的,故A正确; B项:牛顿第一定律是惯性定律,其内容是:一个物体如果没有受到外力的作用,要么保持静止状态,要么保持匀速直线运动状态,直到有外力迫使它改变运动状态为止,故B正确; C项:牛顿第三定律的内容是:对于每一个作用力,都有一个大小相等、方向相反的反作用力,这两个力分别作用在两个相互作用的物体上,故C错误; D项:声音在空气中以波的形式传播,其传播速度与介质的温度、湿度等因素有关,一般情况下,声音在空气中的传播速度约为340m/s左右,而不是1000m/s,故D错误。 因此,本题的正确答案是 D 。

用户:I'm Mike 。I am going to have a busy weekend。On Saturday,I am going to learn how to swim。I will go with my father。Then we are going to have lunch in the restaurant。In the afternoon,we are going to the zoo。We are going to see the pandas。On Sunday,I will finish my homework in the morning。In the afternoon,my parents and I are going to visit my grandparents。We will have dinner together。

阅读短文,判断正确和错误!正确请写T,错误请写F:

1.Mike is going to have a busy weekend()

2.Mike is going to learn how to swim with his father()

3.They are going to have lunch at home()

Baichuan 2:1. T(正确的)

2. T(正确的)

3. F(错误的)

In order to enable different users and different platforms to run the Baichuan 2 model, we have done corresponding quantitative work for the Baichuan 2 model (including Baichuan2-7B-Chat and Baichuan2-13B-Chat) to facilitate users to quickly and efficiently deploy the Baichuan 2 model on their own platform.

Baichuan 2 adopts the community mainstream quantitative approach: BitsAndBytes. This method can ensure that the quantization effect is basically not lost. It has been integrated into transformers curry and has been widely used in the community. BitsAndBytes supports 8bits and 4bits quantization, of which 4bits supports FP4 and NF4 formats. Baichuan 2 uses NF4 as the data type for 4bits quantization.

Based on this quantization method, Baichuan 2 supports two modes: online quantization and offline quantization.

For online quantization, we support 8bits and 4bits quantization. The usage method is similar to that in Baichuan-13B project. You only need to load the model into the CPU memory, then call quantize() interface quantization, and finally call cuda() function to copy the quantized weight to the GPU video memory. The code to implement the entire model loading is very simple. Let’s take Baichuan2-7B-Chat as an example:

8bits Online Quantification:

model = AutoModelForCausalLM . from_pretrained ( "baichuan-inc/Baichuan2-7B-Chat" , torch_dtype = torch . float16 , trust_remote_code = True )

model = model . quantize ( 8 ). cuda () 4bits online quantization:

model = AutoModelForCausalLM . from_pretrained ( "baichuan-inc/Baichuan2-7B-Chat" , torch_dtype = torch . float16 , trust_remote_code = True )

model = model . quantize ( 4 ). cuda () It should be noted that when using the from_pretrained interface, users will generally add device_map="auto" . When using online quantization, this parameter needs to be removed, otherwise an error will be reported.

In order to facilitate users' use, we provide offline quantized version Baichuan2-7B-Chat-4bits for users to download. It is very simple for the user to load the Baichuan2-7B-Chat-4bits model, just need to be executed:

model = AutoModelForCausalLM . from_pretrained ( "baichuan-inc/Baichuan2-7B-Chat-4bits" , device_map = "auto" , trust_remote_code = True )For 8bits offline quantization, we do not provide the corresponding version, because the Hugging Face transformers library provides the corresponding API interface, which can easily implement the storage and loading of the 8bits quantitative model. Users can realize the 8bits model saving and loading in the following ways:

# Model saving: model_id is the original model directory, and quant8_saved_dir is the directory where the 8bits quantized model is saved.

model = AutoModelForCausalLM . from_pretrained ( model_id , load_in_8bit = True , device_map = "auto" , trust_remote_code = True )

model . save_pretrained ( quant8_saved_dir )

model = AutoModelForCausalLM . from_pretrained ( quant8_saved_dir , device_map = "auto" , trust_remote_code = True )Comparison of video memory usage before and after quantization (GPU Mem in GB):

| Precision | Baichuan2-7B | Baichuan2-13B |

|---|---|---|

| bf16 / fp16 | 15.3 | 27.5 |

| 8bits | 8.0 | 16.1 |

| 4bits | 5.1 | 8.6 |

The results and original versions on each benchmark after quantization are shown below:

| Model 5-shot | C-Eval | MMLU | CMMLU |

|---|---|---|---|

| Baichuan2-13B-Chat | 56.74 | 57.32 | 59.68 |

| Baichuan2-13B-Chat-4bits | 56.05 | 56.24 | 58.82 |

| Baichuan2-7B-Chat | 54.35 | 52.93 | 54.99 |

| Baichuan2-7B-Chat-4bits | 53.04 | 51.72 | 52.84 |

C-Eval is an evaluation conducted on its val set

As you can see, the accuracy loss of 4bits relative to bfloat16 is about 1-2 percentage points.

The Baichuan 2 model supports CPU inference, but it should be emphasized that the CPU inference speed is relatively slow. The model loading method needs to be modified as follows:

# Taking Baichuan2-7B-Chat as an example

model = AutoModelForCausalLM . from_pretrained ( "baichuan-inc/Baichuan2-7B-Chat" , torch_dtype = torch . float32 , trust_remote_code = True ) Since many users have done a lot of optimization work on Baichuan 1 (Baichuan-7B, Baichuan-13B), such as compilation optimization, quantization, etc., in order to apply these work to Baichuan 2 at zero cost, users can perform an offline conversion of the Baichuan 2 model, and after conversion, they can be used as a Baichuan 1 model. Specifically, users only need to use the following script to normalize the last layer of lm_head in Baichuan 2 model offline and replace lm_head.weight . After the replacement, you can compile and optimize the converted model like the Baichuan 1 model.

import torch

import os

ori_model_dir = 'your Baichuan 2 model directory'

# To avoid overwriting the original model, it's best to save the converted model to another directory before replacing it

new_model_dir = 'your normalized lm_head weight Baichuan 2 model directory'

model = torch . load ( os . path . join ( ori_model_dir , 'pytorch_model.bin' ))

lm_head_w = model [ 'lm_head.weight' ]

lm_head_w = torch . nn . functional . normalize ( lm_head_w )

model [ 'lm_head.weight' ] = lm_head_w

torch . save ( model , os . path . join ( new_model_dir , 'pytorch_model.bin' ))git clone https://github.com/baichuan-inc/Baichuan2.git

cd Baichuan2/fine-tune

pip install -r requirements.txtBelow we give a stand-alone training example for fine-tuning Baichuan2-7B-Base.

Training data: data/belle_chat_ramdon_10k.json , the sample data is sampled from multiturn_chat_0.8M, and the format conversion is performed. It mainly shows how to train multiple rounds of data, and does not guarantee the effect.

hostfile= " "

deepspeed --hostfile= $hostfile fine-tune.py

--report_to " none "

--data_path " data/belle_chat_ramdon_10k.json "

--model_name_or_path " baichuan-inc/Baichuan2-7B-Base "

--output_dir " output "

--model_max_length 512

--num_train_epochs 4

--per_device_train_batch_size 16

--gradient_accumulation_steps 1

--save_strategy epoch

--learning_rate 2e-5

--lr_scheduler_type constant

--adam_beta1 0.9

--adam_beta2 0.98

--adam_epsilon 1e-8

--max_grad_norm 1.0

--weight_decay 1e-4

--warmup_ratio 0.0

--logging_steps 1

--gradient_checkpointing True

--deepspeed ds_config.json

--bf16 True

--tf32 TrueFor multi-machine training, you only need to give the hostfile, and the content is similar to the following:

ip1 slots=8

ip2 slots=8

ip3 slots=8

ip4 slots=8

....

At the same time, specify the path to the hosftfile in the training script:

hostfile= " /path/to/hostfile "

deepspeed --hostfile= $hostfile fine-tune.py

--report_to " none "

--data_path " data/belle_chat_ramdon_10k.json "

--model_name_or_path " baichuan-inc/Baichuan2-7B-Base "

--output_dir " output "

--model_max_length 512

--num_train_epochs 4

--per_device_train_batch_size 16

--gradient_accumulation_steps 1

--save_strategy epoch

--learning_rate 2e-5

--lr_scheduler_type constant

--adam_beta1 0.9

--adam_beta2 0.98

--adam_epsilon 1e-8

--max_grad_norm 1.0

--weight_decay 1e-4

--warmup_ratio 0.0

--logging_steps 1

--gradient_checkpointing True

--deepspeed ds_config.json

--bf16 True

--tf32 TrueThe code already supports lightweight fine-tuning such as LoRA. If you want to use it, you only need to add the following parameters to the above script:

--use_lora True The specific configuration of LoRA can be found in the fine-tune.py script.

After fine-tuning with LoRA, you can use the following command to load the model:

from peft import AutoPeftModelForCausalLM

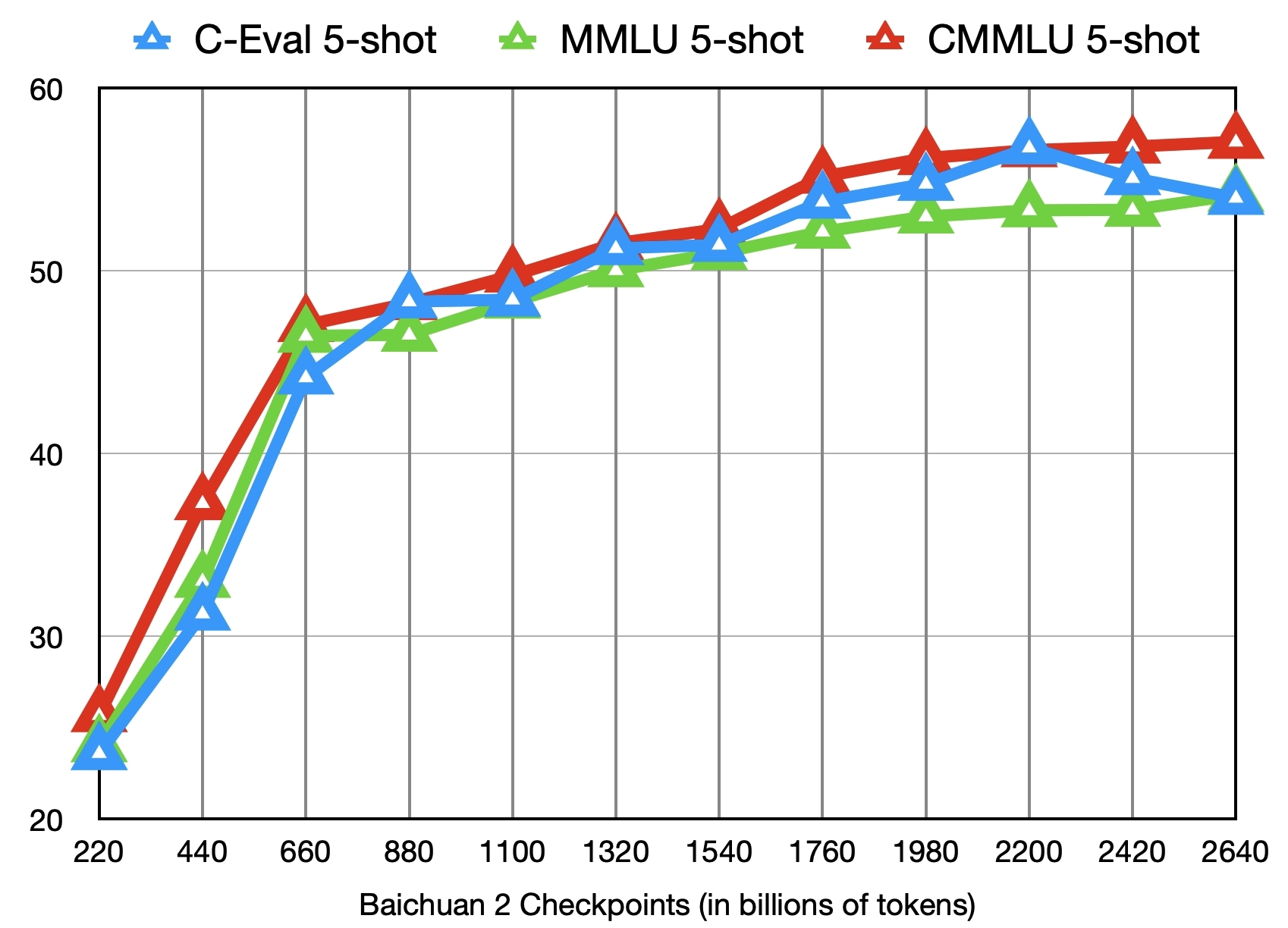

model = AutoPeftModelForCausalLM . from_pretrained ( "output" , trust_remote_code = True )In addition to training the Baichuan2-7B-Base model with 2.6 trillion tokens, we also provide another 11 intermediate checkpoints (each trained about 0.2 to 2.4 trillion tokens respectively) for community research and use (download address). The following figure shows the effect changes of these checkpoints on the three benchmarks of C-Eval, MMLU, and CMMLU:

??? We will continue to update the community and ecosystem support for Baichuan 2 here???

Deploy BaiChuan2 - 7B/Chat, BaiChuan2 - 13B/Chat models using Core™/Xiang® scalable processor or with Ruixuan™ GPUs.

BigDL-LLM (CPU, GPU) is recommended to achieve better inference performance.

Chinese operating manual, including notebook support

Loading, optimization, saving methods, etc.

Model fine-tuning: Baichuan 2 (7B) has natively supported PyTorch (2.1.0) + Transformers (4.36.0) + DeepSpeed (0.12.4) + Accelerate (0.25.0) model fine-tuning, which can be used without additional adaptation.

Inference deployment: Baichuan 2 (7B) has natively supported Ascend NPU inference and can be used without additional adaptation.

MindFormers is a full-process development kit based on the MindSpore framework and supports large-scale model training, fine-tuning, evaluation, reasoning and deployment. Baichuan2-7B / 13B has been integrated into this kit to support users to fine-tuning and deploy models. The specific usage method can be seen in README.

The Shengsi big model platform is based on the Shengsi MindSpore AI framework, MindFormers big model development kit and Ascend hardware computing power, and opens the Baichuan2-7B big model capabilities to the public, and everyone is welcome to experience it online.

LLaMA-Efficient-Tuning has supported fine-tuning and continuing training of Baichuan 2 models.

Baichuan2 (7B/13B) supports Taichu T100 acceleration card reasoning, and the trial channel has been officially opened to the public.

We hereby declare that our development team has not developed any apps based on the Baichuan 2 model, whether on iOS, Android, web pages, or any other platform. We strongly call on all users not to use the Baichuan 2 model to conduct any activities that endanger national social security or illegal. In addition, users are also asked not to use the Baichuan 2 model for Internet services that have not been properly security reviewed and registered. We hope that all users can abide by this principle and ensure that the development of science and technology can be carried out in a standardized and legal environment.

We have done everything we can to ensure compliance with the data used during model training. However, despite our great efforts, there are still some unforeseen problems due to the complexity of the model and data. Therefore, we will not assume any responsibility for any problems arising from the use of the Baichuan 2 open source model, including but not limited to data security issues, public opinion risks, or any risks and problems arising from the misleading, abuse, dissemination or improper use of the model.

The community uses Baichuan 2 models to follow Apache 2.0 and the Baichuan 2 Model Community License Agreement. The Baichuan 2 model is commercially useful. If you plan to use the Baichuan 2 model or its derivatives for commercial purposes, please confirm that your subject meets the following conditions:

If the above conditions are met, you need to submit the application materials required by the Baichuan 2 Model Community License Agreement through the following email address [email protected]. After the review is approved, Baichuan will hereby grant you a non-exclusive, global, non-transferable, non-sublicensable, revocable commercial copyright license.

To quote our work, please use the following reference:

@article{baichuan2023baichuan2,

title={Baichuan 2: Open Large-scale Language Models},

author={Baichuan},

journal={arXiv preprint arXiv:2309.10305},

url={https://arxiv.org/abs/2309.10305},

year={2023}

}