This repository contains scripts and prompts for our paper "TopicGPT: Topic Modeling by Prompting Large Language Models" (NAACL'24). Our topicgpt_python package consists of five main functions:

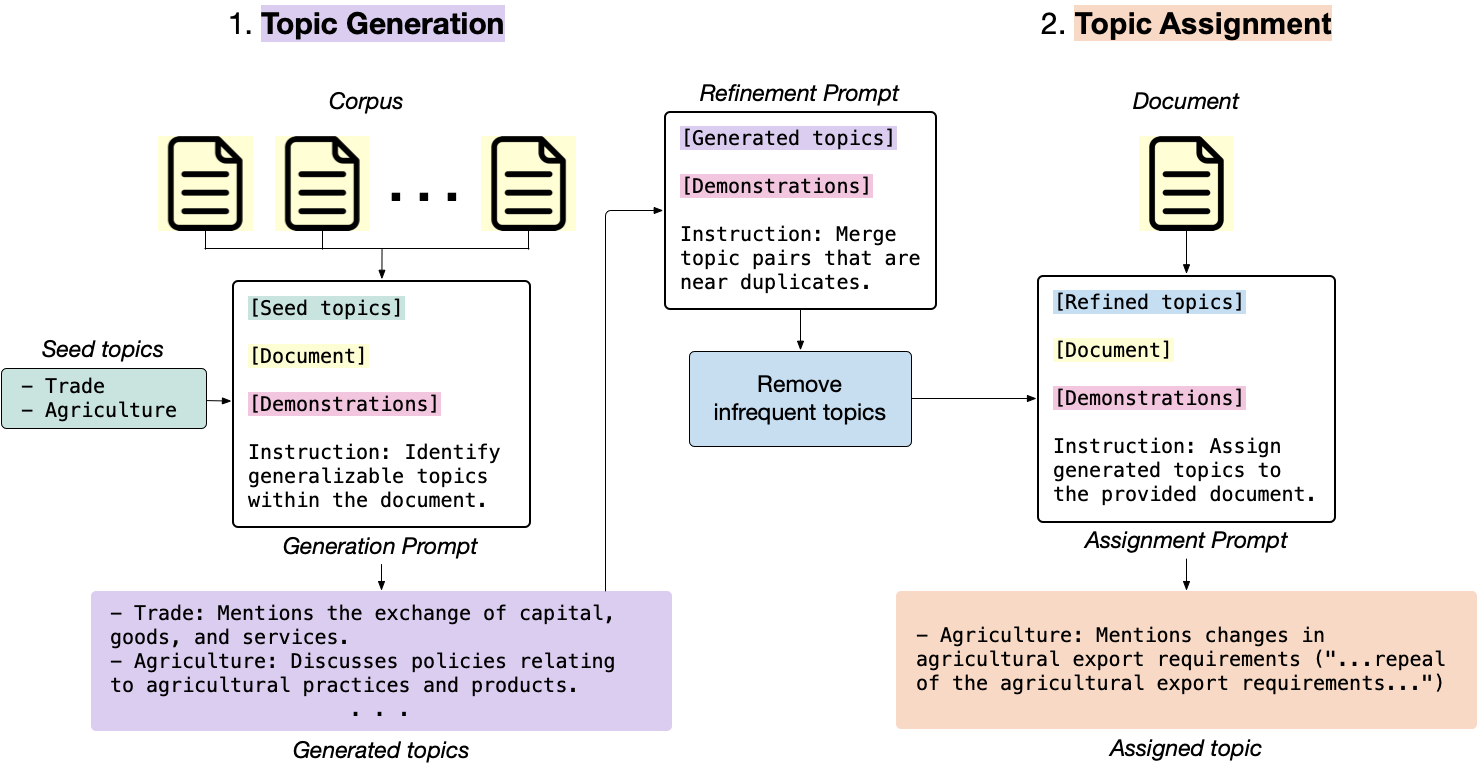

generate_topic_lvl1 generates high-level and generalizable topics.generate_topic_lvl2 generates low-level and specific topics to each high-level topic.refine_topics refines the generated topics by merging similar topics and removing irrelevant topics.assign_topics assigns the generated topics to the input text, along with a quote that supports the assignment.correct_topics corrects the generated topics by reprompting the model so that the final topic assignment is grounded in the topic list.

topicgpt_python is released! You can install it via pip install topicgpt_python. We support OpenAI API, VertexAI, Azure API, Gemini API, and vLLM (requires GPUs for inference). See PyPI.pip install topicgpt_python

# Run in shell

# Needed only for the OpenAI API deployment

export OPENAI_API_KEY={your_openai_api_key}

# Needed only for the Vertex AI deployment

export VERTEX_PROJECT={your_vertex_project} # e.g. my-project

export VERTEX_LOCATION={your_vertex_location} # e.g. us-central1

# Needed only for Gemini deployment

export GEMINI_API_KEY={your_gemini_api_key}

# Needed only for the Azure API deployment

export AZURE_OPENAI_API_KEY={your_azure_api_key}

export AZURE_OPENAI_ENDPOINT={your_azure_endpoint}

.jsonl data file in the following format:

{

"id": "IDs (optional)",

"text": "Documents",

"label": "Ground-truth labels (optional)"

}data/input. There is also a sample data file data/input/sample.jsonl to debug the code.Check out demo.ipynb for a complete pipeline and more detailed instructions. We advise you to try running on a subset with cheaper (or open-source) models first before scaling up to the entire dataset.

(Optional) Define I/O paths in config.yml and load using:

import yaml

with open("config.yml", "r") as f:

config = yaml.safe_load(f)Load the package:

from topicgpt_python import *Generate high-level topics:

generate_topic_lvl1(api, model, data, prompt_file, seed_file, out_file, topic_file, verbose)Generate low-level topics (optional)

generate_topic_lvl2(api, model, seed_file, data, prompt_file, out_file, topic_file, verbose)Refine the generated topics by merging near duplicates and removing topics with low frequency (optional):

refine_topics(api, model, prompt_file, generation_file, topic_file, out_file, updated_file, verbose, remove, mapping_file)Assign and correct the topics, usually with a weaker model if using paid APIs to save cost:

assign_topics(

api, model, data, prompt_file, out_file, topic_file, verbose

)correct_topics(

api, model, data_path, prompt_path, topic_path, output_path, verbose

)

Check out the data/output folder for sample outputs.

We also offer metric calculation functions in topicgpt_python.metrics to evaluate the alignment between the generated topics and the ground-truth labels (Adjusted Rand Index, Harmonic Purity, and Normalized Mutual Information).

@misc{pham2023topicgpt,

title={TopicGPT: A Prompt-based Topic Modeling Framework},

author={Chau Minh Pham and Alexander Hoyle and Simeng Sun and Mohit Iyyer},

year={2023},

eprint={2311.01449},

archivePrefix={arXiv},

primaryClass={cs.CL}

}