veTERETL:矢量數據庫的輕量級ETL框架

VECTERETL按上下文數據是一個模塊化框架,旨在在短短幾分鐘內幫助數據和AI工程師處理其AI應用程序的數據!

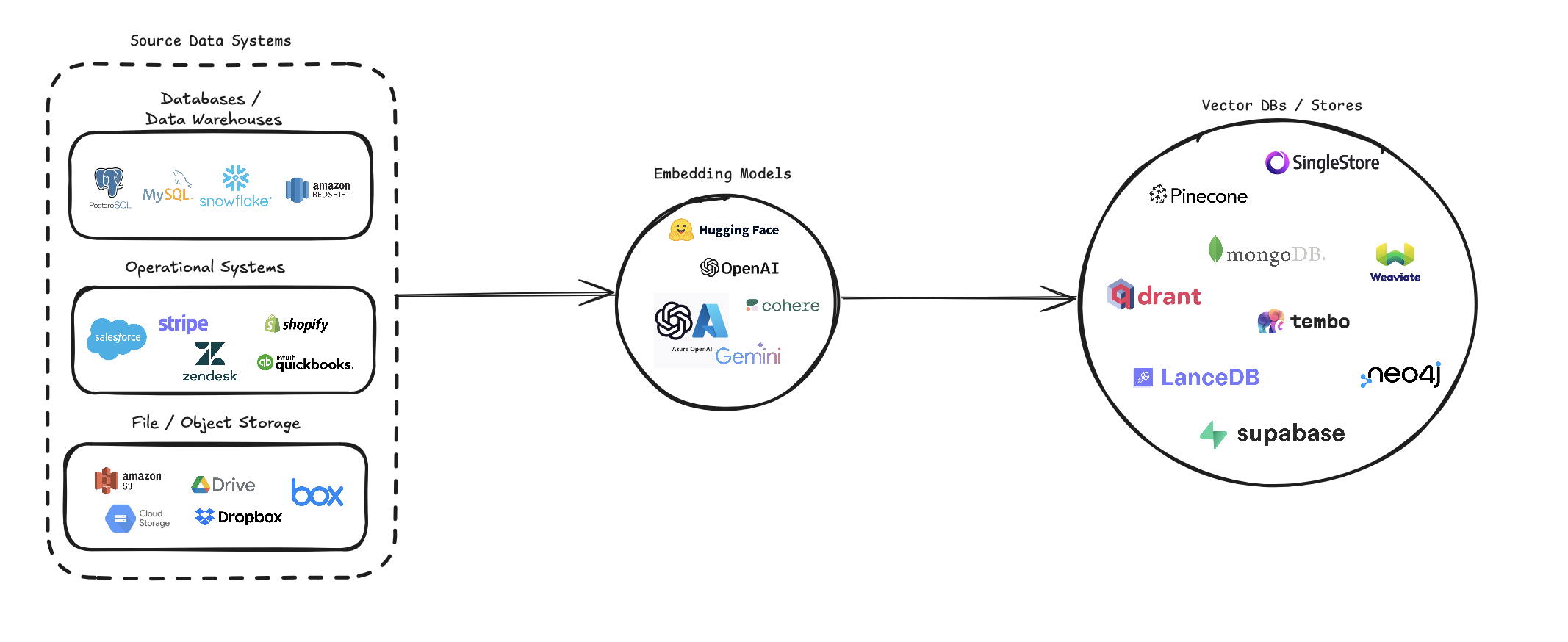

veTORETL簡化了將各種數據源轉換為向量嵌入並將其存儲在各種矢量數據庫中的過程。它支持多個數據源(數據庫,雲存儲和本地文件),各種嵌入模型(包括OpenAI,Cohere和Google Gemini)以及幾個矢量數據庫目標(例如Pinecone,Qdrant和Weaviate)。

該管道旨在簡化矢量搜索系統的創建和管理,使開發人員和數據科學家可以輕鬆地構建和擴展需要語義搜索,推薦系統或其他基於向量的操作的應用程序。

pip install --upgrade vector-etl

或者

pip install git+https://github.com/ContextData/VectorETL.git

本節提供了有關如何為矢量數據庫使用ETL框架的說明。我們將介紹運行,驗證配置並提供一些常見的用法示例。

假設您的配置文件類似於下面的文件。

source :

source_data_type : " database "

db_type : " postgres "

host : " localhost "

database_name : " customer_data "

username : " user "

password : " password "

port : 5432

query : " SELECT * FROM customers WHERE updated_at > :last_updated_at "

batch_size : 1000

chunk_size : 1000

chunk_overlap : 0

embedding :

embedding_model : " OpenAI "

api_key : ${OPENAI_API_KEY}

model_name : " text-embedding-ada-002 "

target :

target_database : " Pinecone "

pinecone_api_key : ${PINECONE_API_KEY}

index_name : " customer-embeddings "

dimension : 1536

metric : " cosine "

embed_columns :

- " customer_name "

- " customer_description "

- " purchase_history "然後,您可以將配置導入到Python項目中,並自動從那裡運行

from vector_etl import create_flow

flow = create_flow ()

flow . load_yaml ( '/path/to/your/config.yaml' )

flow . execute ()使用上面選項2的相同YAML配置文件,您可以直接從命令行運行該過程,而無需將其導入Python應用程序。

要運行ETL框架,請使用以下命令:

vector-etl -c /path/to/your/config.yaml from vector_etl import create_flow

source = {

"source_data_type" : "database" ,

"db_type" : "postgres" ,

"host" : "localhost" ,

"port" : "5432" ,

"database_name" : "test" ,

"username" : "user" ,

"password" : "password" ,

"query" : "select * from test" ,

"batch_size" : 1000 ,

"chunk_size" : 1000 ,

"chunk_overlap" : 0 ,

}

embedding = {

"embedding_model" : "OpenAI" ,

"api_key" : ${ OPENAI_API_KEY },

"model_name" : "text-embedding-ada-002"

}

target = {

"target_database" : "Pinecone" ,

"pinecone_api_key" : ${ PINECONE_API_KEY },

"index_name" : "my-pinecone-index" ,

"dimension" : 1536

}

embed_columns = [ "customer_name" , "customer_description" , "purchase_history" ]

flow = create_flow ()

flow . set_source ( source )

flow . set_embedding ( embedding )

flow . set_target ( target )

flow . set_embed_columns ( embed_columns )

# Execute the flow

flow . execute ()以下是如何將ETL框架用於不同方案的一些示例:

vector-etl -c config/postgres_to_pinecone.yaml postgres_to_pinecone.yaml可能看起來像:

source :

source_data_type : " database "

db_type : " postgres "

host : " localhost "

database_name : " customer_data "

username : " user "

password : " password "

port : 5432

query : " SELECT * FROM customers WHERE updated_at > :last_updated_at "

batch_size : 1000

chunk_size : 1000

chunk_overlap : 0

embedding :

embedding_model : " OpenAI "

api_key : ${OPENAI_API_KEY}

model_name : " text-embedding-ada-002 "

target :

target_database : " Pinecone "

pinecone_api_key : ${PINECONE_API_KEY}

index_name : " customer-embeddings "

dimension : 1536

metric : " cosine "

embed_columns :

- " customer_name "

- " customer_description "

- " purchase_history " vector-etl -c config/s3_to_qdrant.yaml s3_to_qdrant.yaml可能看起來像:

source :

source_data_type : " Amazon S3 "

bucket_name : " my-data-bucket "

prefix : " customer_data/ "

file_type : " csv "

aws_access_key_id : ${AWS_ACCESS_KEY_ID}

aws_secret_access_key : ${AWS_SECRET_ACCESS_KEY}

chunk_size : 1000

chunk_overlap : 200

embedding :

embedding_model : " Cohere "

api_key : ${COHERE_API_KEY}

model_name : " embed-english-v2.0 "

target :

target_database : " Qdrant "

qdrant_url : " https://your-qdrant-cluster-url.qdrant.io "

qdrant_api_key : ${QDRANT_API_KEY}

collection_name : " customer_embeddings "

embed_columns : [] veTORETL(提取,變換,負載)框架是一種功能強大且靈活的工具,旨在簡化從各種來源提取數據,將其轉換為向量嵌入並將這些嵌入到一系列矢量數據庫中的過程。

它以模塊化,可擴展性和易用性為基礎,是希望利用矢量搜索在數據基礎架構中的力量的組織的理想解決方案。

多功能數據提取:該框架支持廣泛的數據源,包括傳統數據庫,雲存儲解決方案(例如Amazon S3和Google Cloud Storage)以及流行的SaaS平台(例如Stripe和Zendesk)。這種多功能性使您可以將來自多個源的數據合併到統一的向量數據庫中。

高級文本處理:對於文本數據,該框架實現了複雜的塊和重疊技術。這樣可以確保在創建向量嵌入時保留文本的語義上下文,從而導致更準確的搜索結果。

最先進的嵌入模型:該系統與領先的嵌入模型集成在一起,包括OpenAI,Cohere,Google Gemini和Azure OpenAI。這使您可以選擇最適合您特定用例和質量要求的嵌入模型。

多重矢量數據庫支持:無論您是使用Pinecone,QDrant,Weaviate,Singlestore,Supabase還是LanceDB,此框架都可以覆蓋。它旨在與這些流行的矢量數據庫無縫接口,使您可以選擇最適合您需求的媒介。

可配置和擴展:通過YAML或JSON配置文件,整個框架都是高度配置的。此外,隨著您的需求,它的模塊化體系結構可輕鬆使用新的數據源,嵌入模型或向量數據庫擴展。

該ETL框架非常適合希望實施或升級其向量搜索功能的組織。

通過自動化提取數據,創建向量嵌入並將其存儲在矢量數據庫中的過程,該框架大大減少了設置矢量搜索系統所涉及的時間和復雜性。它允許數據科學家和工程師專注於得出見解和構建應用程序,而不是擔心數據處理和矢量存儲的複雜性。

ETL框架使用配置文件來指定源,嵌入模型,目標數據庫和其他參數的詳細信息。您可以將YAML或JSON格式用於配置文件。

配置文件分為三個主要部分:

source :指定數據源詳細信息embedding :定義要使用的嵌入模型target :概述目標矢量數據庫embed_columns :定義需要嵌入的列(主要用於結構化數據源) from vector_etl import create_flow

source = {

"source_data_type" : "database" ,

"db_type" : "postgres" ,

"host" : "localhost" ,

"port" : "5432" ,

"database_name" : "test" ,

"username" : "user" ,

"password" : "password" ,

"query" : "select * from test" ,

"batch_size" : 1000 ,

"chunk_size" : 1000 ,

"chunk_overlap" : 0 ,

}

embedding = {

"embedding_model" : "OpenAI" ,

"api_key" : ${ OPENAI_API_KEY },

"model_name" : "text-embedding-ada-002"

}

target = {

"target_database" : "Pinecone" ,

"pinecone_api_key" : ${ PINECONE_API_KEY },

"index_name" : "my-pinecone-index" ,

"dimension" : 1536

}

embed_columns = [ "customer_name" , "customer_description" , "purchase_history" ] source :

source_data_type : " database "

db_type : " postgres "

host : " localhost "

database_name : " mydb "

username : " user "

password : " password "

port : 5432

query : " SELECT * FROM mytable WHERE updated_at > :last_updated_at "

batch_size : 1000

chunk_size : 1000

chunk_overlap : 0

embedding :

embedding_model : " OpenAI "

api_key : " your-openai-api-key "

model_name : " text-embedding-ada-002 "

target :

target_database : " Pinecone "

pinecone_api_key : " your-pinecone-api-key "

index_name : " my-index "

dimension : 1536

metric : " cosine "

cloud : " aws "

region : " us-west-2 "

embed_columns :

- " column1 "

- " column2 "

- " column3 " {

"source" : {

"source_data_type" : " database " ,

"db_type" : " postgres " ,

"host" : " localhost " ,

"database_name" : " mydb " ,

"username" : " user " ,

"password" : " password " ,

"port" : 5432 ,

"query" : " SELECT * FROM mytable WHERE updated_at > :last_updated_at " ,

"batch_size" : 1000 ,

"chunk_size" : 1000 ,

"chunk_overlap" : 0

},

"embedding" : {

"embedding_model" : " OpenAI " ,

"api_key" : " your-openai-api-key " ,

"model_name" : " text-embedding-ada-002 "

},

"target" : {

"target_database" : " Pinecone " ,

"pinecone_api_key" : " your-pinecone-api-key " ,

"index_name" : " my-index " ,

"dimension" : 1536 ,

"metric" : " cosine " ,

"cloud" : " aws " ,

"region" : " us-west-2 "

},

"embed_columns" : [ " column1 " , " column2 " , " column3 " ]

}source部分根據source_data_type變化。這是不同源類型的示例:

{

"source_data_type" : " database " ,

"db_type" : " postgres " , # or "mysql", "snowflake", "salesforce"

"host" : " localhost " ,

"database_name" : " mydb " ,

"username" : " user " ,

"password" : " password " ,

"port" : 5432 ,

"query" : " SELECT * FROM mytable WHERE updated_at > :last_updated_at " ,

"batch_size" : 1000 ,

"chunk_size" : 1000 ,

"chunk_overlap" : 0

} source :

source_data_type : " database "

db_type : " postgres " # or "mysql", "snowflake", "salesforce"

host : " localhost "

database_name : " mydb "

username : " user "

password : " password "

port : 5432

query : " SELECT * FROM mytable WHERE updated_at > :last_updated_at "

batch_size : 1000

chunk_size : 1000

chunk_overlap : 0 {

"source_data_type" : " Amazon S3 " ,

"bucket_name" : " my-bucket " ,

"key" : " path/to/files/ " ,

"file_type" : " .csv " ,

"aws_access_key_id" : " your-access-key " ,

"aws_secret_access_key" : " your-secret-key "

} source :

source_data_type : " Amazon S3 "

bucket_name : " my-bucket "

key : " path/to/files/ "

file_type : " .csv "

aws_access_key_id : " your-access-key "

aws_secret_access_key : " your-secret-key " {

"source_data_type" : " Google Cloud Storage " ,

"credentials_path" : " /path/to/your/credentials.json " ,

"bucket_name" : " myBucket " ,

"prefix" : " prefix/ " ,

"file_type" : " csv " ,

"chunk_size" : 1000 ,

"chunk_overlap" : 0

} source :

source_data_type : " Google Cloud Storage "

credentials_path : " /path/to/your/credentials.json "

bucket_name : " myBucket "

prefix : " prefix/ "

file_type : " csv "

chunk_size : 1000

chunk_overlap : 0 從0.1.6.3版本開始,用戶現在可以利用非結構化的無服務器API從基於眾多的文件源中提取數據。

注意:這僅限於非結構化的無用API,不應用於非結構化的開源框架

這僅限於[pdf,docx,doc,txt]文件

為了使用非結構化,您需要三個其他參數

use_unstructured :( true/false)指示器告訴框架使用非結構化APIunstructured_api_key :輸入您的非結構化API密鑰unstructured_url :從您的非結構化儀表板輸入您的API URL # Example using Local file

source :

source_data_type : " Local File "

file_path : " /path/to/file.docx "

file_type : " docx "

use_unstructured : True

unstructured_api_key : ' my-unstructured-key '

unstructured_url : ' https://my-domain.api.unstructuredapp.io '

# Example using Amazon S3

source :

source_data_type : " Amazon S3 "

bucket_name : " myBucket "

prefix : " Dir/Subdir/ "

file_type : " pdf "

aws_access_key_id : " your-access-key "

aws_secret_access_key : " your-secret-access-key "

use_unstructured : True

unstructured_api_key : ' my-unstructured-key '

unstructured_url : ' https://my-domain.api.unstructuredapp.io ' embedding部分指定要使用的嵌入模型:

embedding :

embedding_model : " OpenAI " # or "Cohere", "Google Gemini", "Azure OpenAI", "Hugging Face"

api_key : " your-api-key "

model_name : " text-embedding-ada-002 " # model name varies by provider target部分根據所選矢量數據庫而變化。這是Pinecone的一個例子:

target :

target_database : " Pinecone "

pinecone_api_key : " your-pinecone-api-key "

index_name : " my-index "

dimension : 1536

metric : " cosine "

cloud : " aws "

region : " us-west-2 " embed_columns列表指定了哪些來自源數據的列應用於生成嵌入(僅適用於數據庫源):

embed_columns :

- " column1 "

- " column2 "

- " column3 " embed_columns列表僅用於結構化數據源(例如PostgreSQL,MySQL,Snowflake)。對於所有其他來源,請使用一個空列表

embed_columns : []為了保護諸如API密鑰和密碼之類的敏感信息,請考慮使用環境變量或安全的秘密管理系統。然後,您可以在配置文件中引用這些:

embedding :

api_key : ${OPENAI_API_KEY}這使您可以將配置文件保留在版本控制中,而無需公開敏感數據。

請記住,根據您的特定數據源,嵌入模型和目標數據庫調整配置。請參閱每個服務的文檔,以確保您提供所有必需的參數。

我們歡迎對矢量數據庫的ETL框架的貢獻!無論您是修復錯誤,改進文檔還是提出新功能,都要感謝您的努力。您可以做出貢獻:

如果您遇到錯誤或有改善ETL框架的建議:

我們一直在尋找使ETL框架更好的方法。如果您有想法:

我們積極歡迎您的拉請求:

main創建您的分支。為了在整個項目中保持一致性,請遵守這些編碼標準:

始終感謝改進文檔:

如果您正在考慮添加一個新功能:

source_mods目錄中添加一個新文件。source_mods/__init__.py中更新get_source_class函數。embedding_mods目錄中添加新文件。embedding_mods/__init__.py中的get_embedding_model函數。target_mods目錄中添加一個新文件。target_mods/__init__.py中更新get_target_database函數。我們鼓勵所有用戶加入我們的Discord服務器,與上下文數據開發團隊和其他貢獻者合作,以建議升級,新集成和問題。