Perform a series of ablation experiments on yolov5 to make it lighter (smaller Flops, lower memory, and fewer parameters) and faster (add shuffle channel, yolov5 head for channel reduce. It can infer at least 10+ FPS On the Raspberry Pi 4B when input the frame with 320×320) and is easier to deploy (removing the Focus layer and four slice operations, reducing the model quantization accuracy to an acceptable 范围)。

| ID | 模型 | input_size | 拖鞋 | 参数 | 尺寸(m) | [email protected] | 地图@.5:0.95 |

|---|---|---|---|---|---|---|---|

| 001 | YOLO-FARST | 320×320 | 0.25克 | 0.35m | 1.4 | 24.4 | - |

| 002 | yolov5-lite e我们 | 320×320 | 0.73克 | 78m | 1.7 | 35.1 | - |

| 003 | Nanodet-M | 320×320 | 0.72克 | 0.95m | 1.8 | - | 20.6 |

| 004 | YOLO-FASTEST-XL | 320×320 | 0.72克 | 0.92m | 3.5 | 34.3 | - |

| 005 | Yolox Nano | 416×416 | 1.08克 | 0.91m | 7.3(fp32) | - | 25.8 |

| 006 | yolov3小 | 416×416 | 6.96克 | 6.06m | 23.0 | 33.1 | 16.6 |

| 007 | yolov4小 | 416×416 | 5.62克 | 886万 | 33.7 | 40.2 | 21.7 |

| 008 | yolov5-lite s我们的 | 416×416 | 1.66克 | 164m | 3.4 | 42.0 | 25.2 |

| 009 | yolov5-lite c我们 | 512×512 | 5.92克 | 4.57万 | 9.2 | 50.9 | 32.5 |

| 010 | 纳米效率Lite2 | 512×512 | 7.12克 | 4.71m | 18.3 | - | 32.6 |

| 011 | yolov5s(6.0) | 640×640 | 16.5g | 723万 | 14.0 | 56.0 | 37.2 |

| 012 | yolov5-lite g outs | 640×640 | 15.6g | 539m | 10.9 | 57.6 | 39.1 |

请参阅Wiki:https://github.com/ppogg/yolov5-lite/wiki/test-the-map-of-models-about-coco

| 设备 | 计算后端 | 系统 | 输入 | 框架 | v5lite-e | v5lite-s | V5lite-C | V5lite-g | yolov5s |

|---|---|---|---|---|---|---|---|---|---|

| 间 | @i5-10210u | 窗口(x86) | 640×640 | Openvino | - | - | 46ms | - | 131ms |

| Nvidia | @RTX 2080TI | Linux(x86) | 640×640 | 火炬 | - | - | - | 15ms | 14ms |

| Redmi K30 | @snapdragon 730g | Android(ARMV8) | 320×320 | NCNN | 27ms | 38ms | - | - | 163ms |

| 小米10 | @snapdragon 865 | Android(ARMV8) | 320×320 | NCNN | 10ms | 14ms | - | - | 163ms |

| Raspberrypi 4b | @arm Cortex-A72 | Linux(ARM64) | 320×320 | NCNN | - | 84ms | - | - | 371ms |

| Raspberrypi 4b | @arm Cortex-A72 | Linux(ARM64) | 320×320 | MNN | - | 71ms | - | - | 356ms |

| Axera-pi | Cortex A7@CPU 3.6 tops @npu | Linux(ARM64) | 640×640 | axpi | - | - | - | 22ms | 22ms |

https://zhuanlan.zhihu.com/p/672633849

入群答案:剪枝或蒸馏或量化或低秩分解(任意其一均可)

| 模型 | 尺寸 | 骨干 | 头 | 框架 | 设计 |

|---|---|---|---|---|---|

| v5lite-e.pt | 17m | ShuffleNetV2(MEGVII) | v5litee头 | Pytorch | ARM-CPU |

| v5lite-e.bin v5lite-e.param | 17m | ShuffLenetV2 | v5litee头 | NCNN | ARM-CPU |

| v5lite-e-int8.bin v5lite-e-int8.param | 0.9m | ShuffLenetV2 | v5litee头 | NCNN | ARM-CPU |

| v5lite-e-fp32.mnn | 3.0m | ShuffLenetV2 | v5litee头 | MNN | ARM-CPU |

| v5lite-e-fp32.tnnmodel v5lite-e-fp32.tnnproto | 29m | ShuffLenetV2 | v5litee头 | TNN | ARM-CPU |

| v5lite-e-320.onnx | 3.1m | ShuffLenetV2 | v5litee头 | Onnxruntime | x86-cpu |

| 模型 | 尺寸 | 骨干 | 头 | 框架 | 设计 |

|---|---|---|---|---|---|

| v5lite-s.pt | 3.4m | ShuffleNetV2(MEGVII) | v5lites头 | Pytorch | ARM-CPU |

| v5lite-s.bin v5lite-s.param | 3.3m | ShuffLenetV2 | v5lites头 | NCNN | ARM-CPU |

| v5lite-s-int8.bin v5lite-s-int8.param | 17m | ShuffLenetV2 | v5lites头 | NCNN | ARM-CPU |

| v5lite-s.mnn | 3.3m | ShuffLenetV2 | v5lites头 | MNN | ARM-CPU |

| v5lite-s-int4.mnn | 987k | ShuffLenetV2 | v5lites头 | MNN | ARM-CPU |

| v5lite-s-fp16.bin v5lite-s-fp16.xml | 3.4m | ShuffLenetV2 | v5lites头 | OpenVivo | x86-cpu |

| v5lite-s-fp32.bin v5lite-s-fp32.xml | 68m | ShuffLenetV2 | v5lites头 | OpenVivo | x86-cpu |

| v5lite-s-fp16.tflite | 3.3m | ShuffLenetV2 | v5lites头 | tflite | ARM-CPU |

| v5lite-s-fp32.tflite | 6.7m | ShuffLenetV2 | v5lites头 | tflite | ARM-CPU |

| v5lite-s-int8.tflite | 18m | ShuffLenetV2 | v5lites头 | tflite | ARM-CPU |

| v5lite-S-416.Onnx | 6.4m | ShuffLenetV2 | v5lites头 | Onnxruntime | x86-cpu |

| 模型 | 尺寸 | 骨干 | 头 | 框架 | 设计 |

|---|---|---|---|---|---|

| v5lite-c.pt | 9m | pplcnet(百杜) | V5s头 | Pytorch | X86-CPU / X86-VPU |

| v5lite-c.bin v5lite-c.xml | 87m | pplcnet | V5s头 | OpenVivo | X86-CPU / X86-VPU |

| V5lite-C-512.ONNX | 18m | pplcnet | V5s头 | Onnxruntime | x86-cpu |

| 模型 | 尺寸 | 骨干 | 头 | 框架 | 设计 |

|---|---|---|---|---|---|

| v5lite-g.pt | 109m | repvgg(tsinghua) | v5liteg头 | Pytorch | X86-GPU / ARM-GPU / ARM-NPU |

| v5lite-g-int8.Engine | 85m | repvgg-yolov5 | v5liteg头 | 张力 | X86-GPU / ARM-GPU / ARM-NPU |

| v5lite-g-int8.tmfile | 87m | repvgg-yolov5 | v5liteg头 | tengine | ARM-NPU |

| v5lite-g-640.onnx | 21m | repvgg-yolov5 | Yolov5头 | Onnxruntime | x86-cpu |

| v5lite-g-640.关节 | 7.1m | repvgg-yolov5 | Yolov5头 | axpi | ARM-NPU |

v5lite-e.pt:|百度驱动器| Google Drive ||─────7NCNN

ncnn-fp16:|百度驱动器| Google Drive |

| - ─—ncnn-int8:|百度驱动器| Google Drive |

| - ─—─mnn-e_bf16:| Google Drive |

|─..phinnmnn-d_bf16:| | | | | | | Google Drive |

└└onnx-fp32:|百度驱动器| Google Drive |

v5lite-s.pt:|百度驱动器| Google Drive ||─────7NCNN

ncnn-fp16:|百度驱动器| Google Drive |

| - ─—ncnn-int8:|百度驱动器| Google Drive |

└前└└└-ttengine-fp32:|百度驱动器| Google Drive |

v5lite-c.pt:百度驱动器| Google Drive |└└前达基 - ─-

openvino-fp16:|百度驱动器| Google Drive |

v5lite-g.pt:|百度驱动器| Google Drive |└ -

axpi-int8:Google Drive |

百度驱动器密码: pogg

https://github.com/pinto0309/pinto_model_zoo/tree/main/main/180_yolov5-lite

python> = 3.6.0都需要所有要求。TXT安装了包括pytorch> = 1.7 :

$ git clone https://github.com/ppogg/YOLOv5-Lite

$ cd YOLOv5-Lite

$ pip install -r requirements.txtdetect.py在各种来源上运行推断,从最新的yolov5-lite发行版自动下载模型,并保存结果来runs/detect 。

$ python detect.py --source 0 # webcam

file.jpg # image

file.mp4 # video

path/ # directory

path/ * .jpg # glob

' https://youtu.be/NUsoVlDFqZg ' # YouTube

' rtsp://example.com/media.mp4 ' # RTSP, RTMP, HTTP stream$ python train.py --data coco.yaml --cfg v5lite-e.yaml --weights v5lite-e.pt --batch-size 128

v5lite-s.yaml v5lite-s.pt 128

v5lite-c.yaml v5lite-c.pt 96

v5lite-g.yaml v5lite-g.pt 64如果您使用多GPU。好几次:

$ python -m torch.distributed.launch --nproc_per_node 2 train.py训练集和测试集分布((xx.jpg)的路径

train: ../coco/images/train2017/

val: ../coco/images/val2017/├── images # xx.jpg example

│ ├── train2017

│ │ ├── 000001.jpg

│ │ ├── 000002.jpg

│ │ └── 000003.jpg

│ └── val2017

│ ├── 100001.jpg

│ ├── 100002.jpg

│ └── 100003.jpg

└── labels # xx.txt example

├── train2017

│ ├── 000001.txt

│ ├── 000002.txt

│ └── 000003.txt

└── val2017

├── 100001.txt

├── 100002.txt

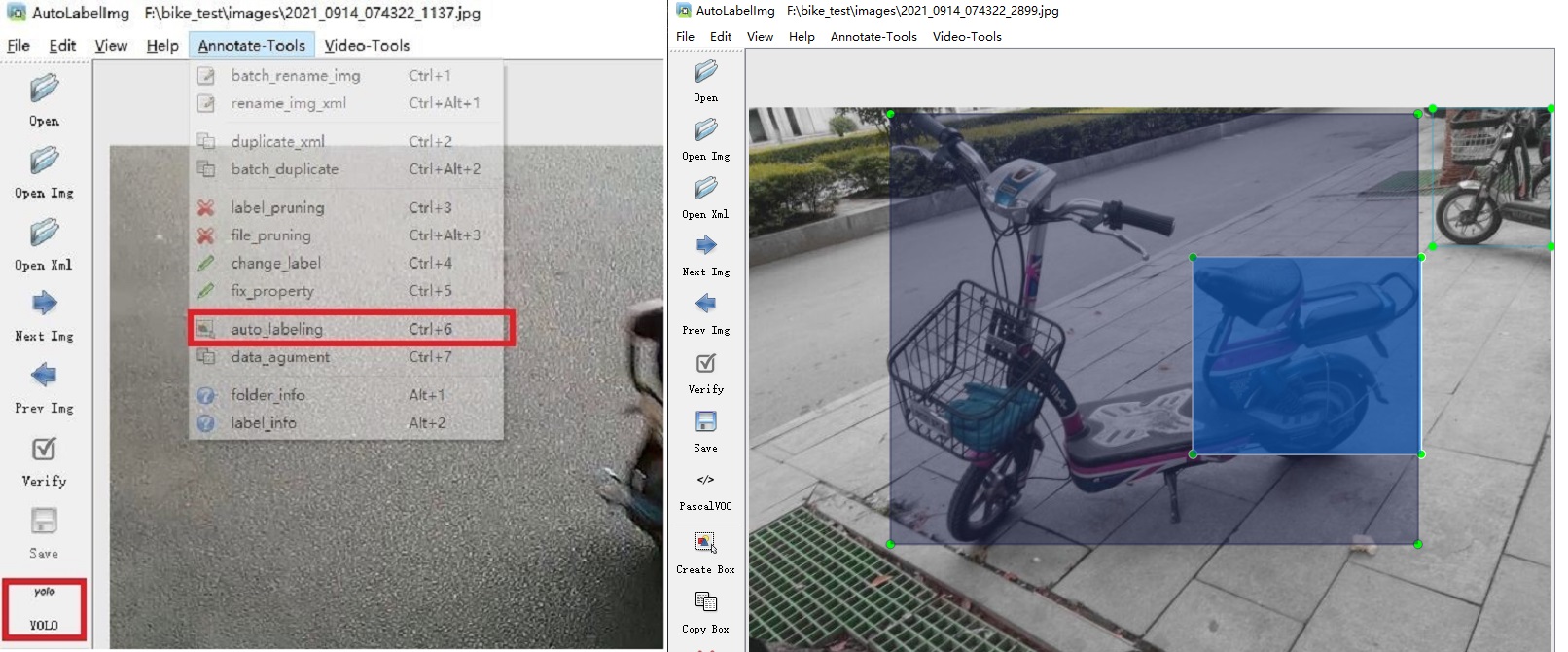

└── 100003.txt链接:https://github.com/ppogg/autolabelimg

您可以使用基于labelimg的yolov5-5.0和yolov5-lite进行自动ambiubiubiu

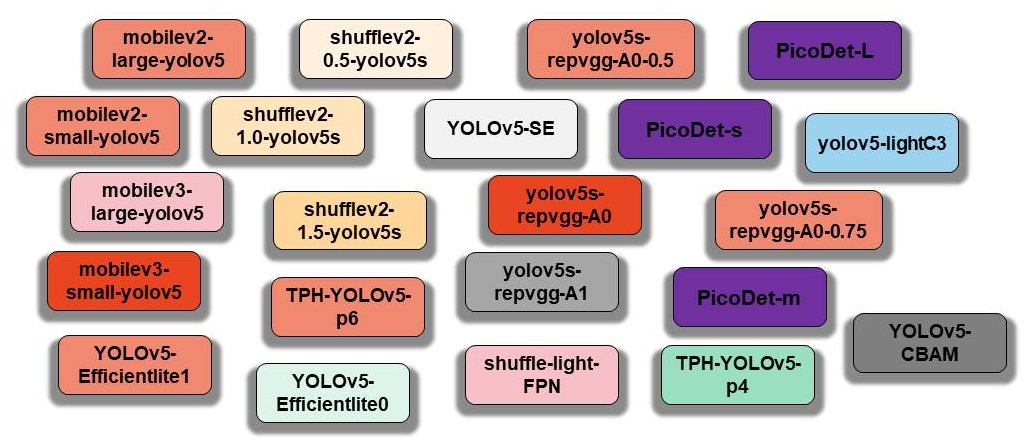

在这里,Yolov5的原始组件和Yolov5-Lite的复制组件被组织并存储在模型中心中:

$ python main.py --type all

更新...

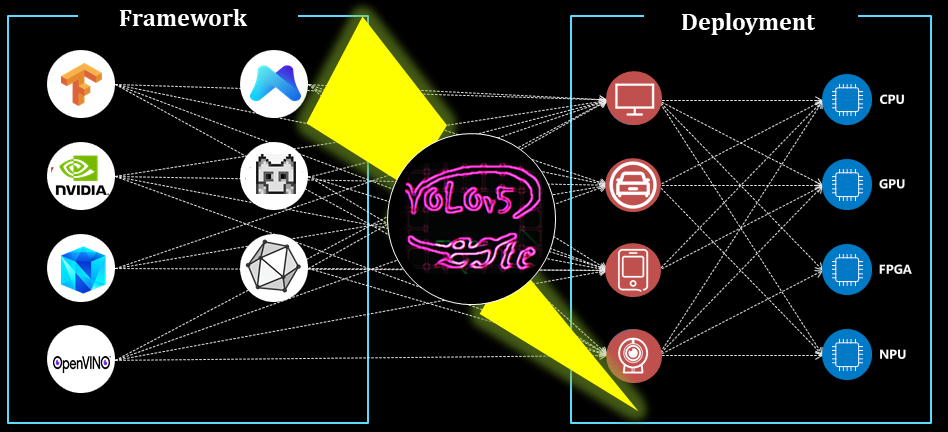

NCNN用于ARM-CPU

MNN用于ARM-CPU

OpenVino X86-CPU或X86-VPU

ARM-GPU或ARM-NPU或X86-GPU的Tensorrt(C ++)

用于ARM-GPU或ARM-NPU或X86-GPU的Tensorrt(Python)

Android用于ARM-CPU

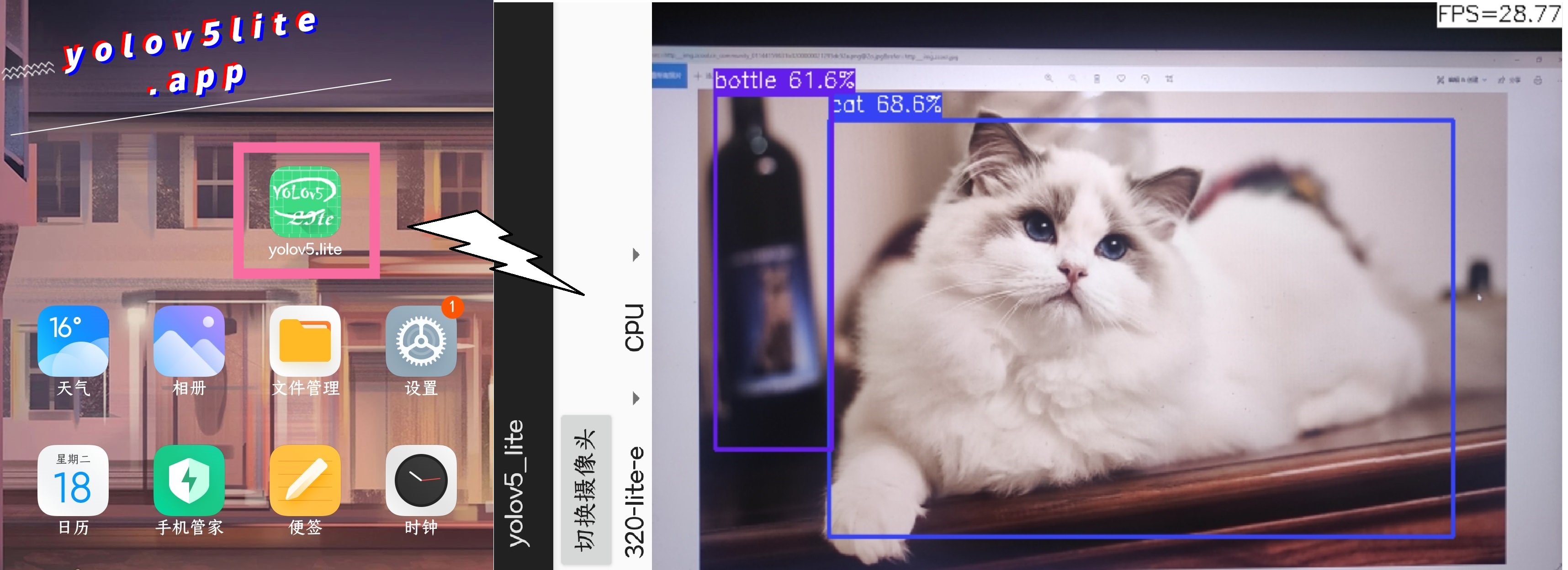

这是一款Redmi手机,处理器是Snapdragon 730G,并且使用Yolov5-Lite进行检测。性能如下:

链接:https://github.com/ppogg/yolov5-lite/tree/master/android_demo/ncnn-android-v5lite

android_v5lite-S:https://drive.google.com/file/d/1ctohy68n2b9xyuqflitp-nd2kufwgaur/view?usp = sharing

android_v5lite-g:https://drive.google.com/file/d/1fnvkwxxp_azwhi000xjiuhj_ohqoujcj/view?usp = sharing

新的Android应用程序:[链接] https://pan.baidu.com/s/1prhw4fi1jq8vbopyishciq [关键字] pogg

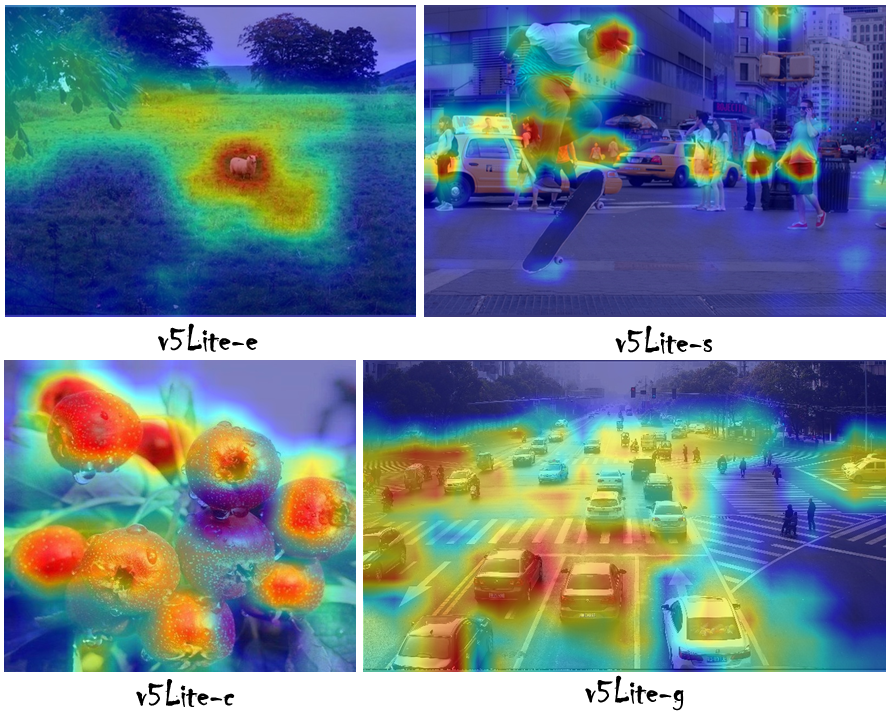

什么是yolov5-lite s/e模型:zhihu链接(中文):https://zhuanlan.zhihu.com/p/400545131

什么是yolov5-lite c型号:zhihu link(中文):https://zhuanlan.zhihu.com/p/420737659

什么是yolov5-lite g模型:zhihu link(中文):https://zhuanlan.zhihu.com/p/4108744403

如何使用fp16或int8在NCNN上部署:CSDN链接(中文):https://blog.csdn.net/weixin_45829462/article/deticle/details/1197878840

如何使用fp16或int8在MNN上部署:zhihu链接(中文):https://zhuanlan.zhihu.com/p/672633849

如何在OnnxRuntime上部署:Zhihu链接(中文):https://zhuanlan.zhihu.com/p/476533259(dold版本)

如何部署在Tensorrt:Zhihu Link(中文):https://zhuanlan.zhihu.com/p/478630138

如何优化张力:Zhihu链接(中文):https://zhuanlan.zhihu.com/p/463074494

https://github.com/ultralytics/yolov5

https://github.com/megvii-model/shufflenet-series

https://github.com/tencent/ncnn

如果您在研究中使用Yolov5-Lite,请引用我们的工作并给明星:

@misc{yolov5lite2021,

title = {YOLOv5-Lite: Lighter, faster and easier to deploy},

author = {Xiangrong Chen and Ziman Gong},

doi = {10.5281/zenodo.5241425}

year={2021}

}