semantic ai

v0.0.6.1

检索调查系统(RAG)的开源框架使用语义搜索来检索预期的结果并借助LLM(大语言模型)生成人类可读的对话响应。

语义AI库文档在这里文档

Python 3.10+异步

# Using pip

$ python -m pip install semantic-ai

# Manual install

$ python -m pip install .在.env文件中设置凭据。仅给出一个连接器,一个索引器和一个LLM模型配置的凭据。其他字段放为空

# Default

FILE_DOWNLOAD_DIR_PATH= # default directory name 'download_file_dir'

EXTRACTED_DIR_PATH= # default directory name 'extracted_dir'

# Connector (SharePoint, S3, GCP Bucket, GDrive, Confluence etc.,)

CONNECTOR_TYPE= " connector_name " # sharepoint

SHAREPOINT_CLIENT_ID= " client_id "

SHAREPOINT_CLIENT_SECRET= " client_secret "

SHAREPOINT_TENANT_ID= " tenant_id "

SHAREPOINT_HOST_NAME= ' <tenant_name>.sharepoint.com '

SHAREPOINT_SCOPE= ' https://graph.microsoft.com/.default '

SHAREPOINT_SITE_ID= " site_id "

SHAREPOINT_DRIVE_ID= " drive_id "

SHAREPOINT_FOLDER_URL= " folder_url " # /My_folder/child_folder/

# Indexer

INDEXER_TYPE= " <vector_db_name> " # elasticsearch, qdrant, opensearch

ELASTICSEARCH_URL= " <elasticsearch_url> " # give valid url

ELASTICSEARCH_USER= " <elasticsearch_user> " # give valid user

ELASTICSEARCH_PASSWORD= " <elasticsearch_password> " # give valid password

ELASTICSEARCH_INDEX_NAME= " <index_name> "

ELASTICSEARCH_SSL_VERIFY= " <ssl_verify> " # True or False

# Qdrant

QDRANT_URL= " <qdrant_url> "

QDRANT_INDEX_NAME= " <index_name> "

QDRANT_API_KEY= " <apikey> "

# Opensearch

OPENSEARCH_URL= " <opensearch_url> "

OPENSEARCH_USER= " <opensearch_user> "

OPENSEARCH_PASSWORD= " <opensearch_password> "

OPENSEARCH_INDEX_NAME= " <index_name> "

# LLM

LLM_MODEL= " <llm_model> " # llama, openai

LLM_MODEL_NAME_OR_PATH= " " # model name

OPENAI_API_KEY= " <openai_api_key> " # if using openai

# SQL

SQLITE_SQL_PATH= " <database_path> " # sqlit db path

# MYSQL

MYSQL_HOST= " <host_name> " # localhost or Ip Address

MYSQL_USER= " <user_name> "

MYSQL_PASSWORD= " <password> "

MYSQL_DATABASE= " <database_name> "

MYSQL_PORT= " <port> " # default port is 3306

方法1:加载.env文件。 env文件应具有凭据

%load_ext dotenv

%dotenv

%dotenv relative/or/absolute/path/to/.env

(or)

dotenv -f .env run -- python方法2:

from semantic_ai . config import Settings

settings = Settings () import asyncio

import semantic_ai await semantic_ai . download ()

await semantic_ai . extract ()

await semantic_ai . index ()下载,提取和索引完成后,我们可以从索引矢量数据库中生成答案。该代码下面给出。

search_obj = await semantic_ai . search ()

query = ""

search = await search_obj . generate ( query )假设作业运行很长时间,我们可以观看处理的文件数,文件数量失败以及存储在“ extract_dir_path/meta”目录中的文本文件中的文件名。

连接源并获取连接对象。我们可以在示例文件夹中看到这一点。示例:SharePoint连接器

from semantic_ai . connectors import Sharepoint

CLIENT_ID = '<client_id>' # sharepoint client id

CLIENT_SECRET = '<client_secret>' # sharepoint client seceret

TENANT_ID = '<tenant_id>' # sharepoint tenant id

SCOPE = 'https://graph.microsoft.com/.default' # scope

HOST_NAME = "<tenant_name>.sharepoint.com" # for example 'contoso.sharepoint.com'

# Sharepoint object creation

connection = Sharepoint (

client_id = CLIENT_ID ,

client_secret = CLIENT_SECRET ,

tenant_id = TENANT_ID ,

host_name = HOST_NAME ,

scope = SCOPE

) import asyncio

import semantic_ai from semantic_ai . connectors import Sqlite

file_path = < database_file_path >

sql = Sqlite ( sql_path = file_path ) from semantic_ai . connectors import Mysql

sql = Mysql (

host = < host_name > ,

user = < user_name > ,

password = < password > ,

database = < database > ,

port = < port_number > # 3306 is default port

) query = ""

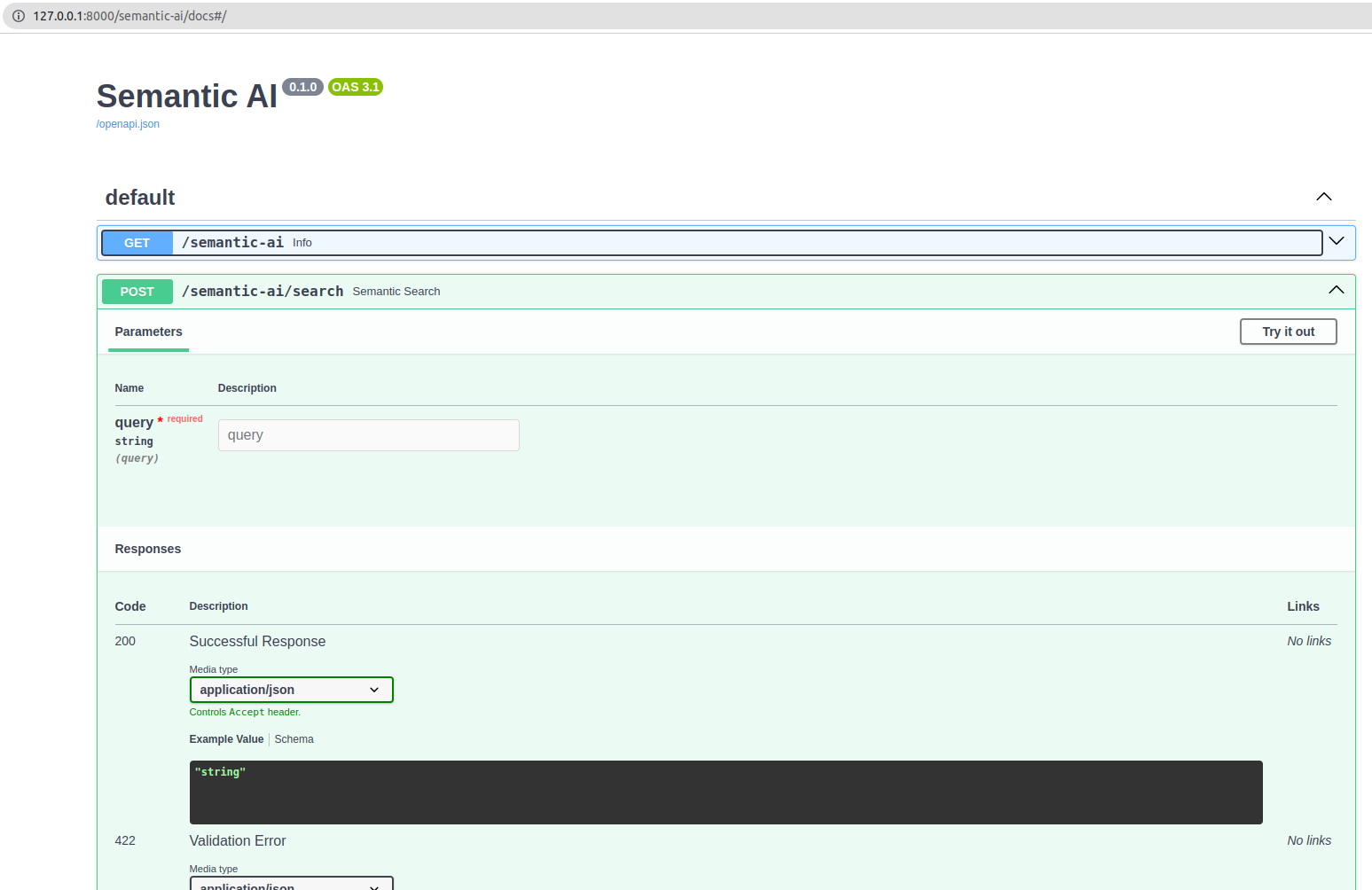

search_obj = await semantic_ai . db_search ( query = query )$ semantic_ai serve -f .env

INFO: Loading environment from ' .env '

INFO: Started server process [43973]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)在http://127.0.0.1:8000/semantic-ai上打开浏览器

现在访问http://127.0.0.1:8000/docs。您将看到自动交互式API文档(由Swagger UI提供):