Kerangka kerja open-source untuk Sistem Pengambilan-Agung (RAG) menggunakan pencarian semantik untuk mengambil hasil yang diharapkan dan menghasilkan respons percakapan yang dapat dibaca manusia dengan bantuan LLM (model bahasa besar).

Dokumentasi dokumentasi perpustakaan AI semantik di sini

Python 3.10+ Asyncio

# Using pip

$ python -m pip install semantic-ai

# Manual install

$ python -m pip install .Atur kredensial dalam file .env. Hanya berikan kredensial untuk konektor satu, pengindeks satu dan konfigurasi model satu LLM. Bidang lain ditempatkan sebagai kosong

# Default

FILE_DOWNLOAD_DIR_PATH= # default directory name 'download_file_dir'

EXTRACTED_DIR_PATH= # default directory name 'extracted_dir'

# Connector (SharePoint, S3, GCP Bucket, GDrive, Confluence etc.,)

CONNECTOR_TYPE= " connector_name " # sharepoint

SHAREPOINT_CLIENT_ID= " client_id "

SHAREPOINT_CLIENT_SECRET= " client_secret "

SHAREPOINT_TENANT_ID= " tenant_id "

SHAREPOINT_HOST_NAME= ' <tenant_name>.sharepoint.com '

SHAREPOINT_SCOPE= ' https://graph.microsoft.com/.default '

SHAREPOINT_SITE_ID= " site_id "

SHAREPOINT_DRIVE_ID= " drive_id "

SHAREPOINT_FOLDER_URL= " folder_url " # /My_folder/child_folder/

# Indexer

INDEXER_TYPE= " <vector_db_name> " # elasticsearch, qdrant, opensearch

ELASTICSEARCH_URL= " <elasticsearch_url> " # give valid url

ELASTICSEARCH_USER= " <elasticsearch_user> " # give valid user

ELASTICSEARCH_PASSWORD= " <elasticsearch_password> " # give valid password

ELASTICSEARCH_INDEX_NAME= " <index_name> "

ELASTICSEARCH_SSL_VERIFY= " <ssl_verify> " # True or False

# Qdrant

QDRANT_URL= " <qdrant_url> "

QDRANT_INDEX_NAME= " <index_name> "

QDRANT_API_KEY= " <apikey> "

# Opensearch

OPENSEARCH_URL= " <opensearch_url> "

OPENSEARCH_USER= " <opensearch_user> "

OPENSEARCH_PASSWORD= " <opensearch_password> "

OPENSEARCH_INDEX_NAME= " <index_name> "

# LLM

LLM_MODEL= " <llm_model> " # llama, openai

LLM_MODEL_NAME_OR_PATH= " " # model name

OPENAI_API_KEY= " <openai_api_key> " # if using openai

# SQL

SQLITE_SQL_PATH= " <database_path> " # sqlit db path

# MYSQL

MYSQL_HOST= " <host_name> " # localhost or Ip Address

MYSQL_USER= " <user_name> "

MYSQL_PASSWORD= " <password> "

MYSQL_DATABASE= " <database_name> "

MYSQL_PORT= " <port> " # default port is 3306

Metode 1: Untuk memuat file .env. File Env harus memiliki kredensial

%load_ext dotenv

%dotenv

%dotenv relative/or/absolute/path/to/.env

(or)

dotenv -f .env run -- pythonMetode 2:

from semantic_ai . config import Settings

settings = Settings () import asyncio

import semantic_ai await semantic_ai . download ()

await semantic_ai . extract ()

await semantic_ai . index ()Setelah menyelesaikan unduhan, ekstrak dan indeks, kami dapat menghasilkan jawaban dari vektor db yang diindeks. Kode yang diberikan di bawah ini.

search_obj = await semantic_ai . search ()

query = ""

search = await search_obj . generate ( query )Misalkan pekerjaan berjalan untuk waktu yang lama, kita dapat menonton jumlah file yang diproses, jumlah file gagal, dan nama file yang disimpan dalam file teks yang diproses dan gagal dalam direktori 'Extracted_dir_path/Meta'.

Untuk menghubungkan sumber dan mendapatkan objek koneksi. Kita bisa melihatnya di folder Contoh. Contoh: Konektor SharePoint

from semantic_ai . connectors import Sharepoint

CLIENT_ID = '<client_id>' # sharepoint client id

CLIENT_SECRET = '<client_secret>' # sharepoint client seceret

TENANT_ID = '<tenant_id>' # sharepoint tenant id

SCOPE = 'https://graph.microsoft.com/.default' # scope

HOST_NAME = "<tenant_name>.sharepoint.com" # for example 'contoso.sharepoint.com'

# Sharepoint object creation

connection = Sharepoint (

client_id = CLIENT_ID ,

client_secret = CLIENT_SECRET ,

tenant_id = TENANT_ID ,

host_name = HOST_NAME ,

scope = SCOPE

) import asyncio

import semantic_ai from semantic_ai . connectors import Sqlite

file_path = < database_file_path >

sql = Sqlite ( sql_path = file_path ) from semantic_ai . connectors import Mysql

sql = Mysql (

host = < host_name > ,

user = < user_name > ,

password = < password > ,

database = < database > ,

port = < port_number > # 3306 is default port

) query = ""

search_obj = await semantic_ai . db_search ( query = query )$ semantic_ai serve -f .env

INFO: Loading environment from ' .env '

INFO: Started server process [43973]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)Buka browser Anda di http://127.0.0.1:8000/semantic-ai

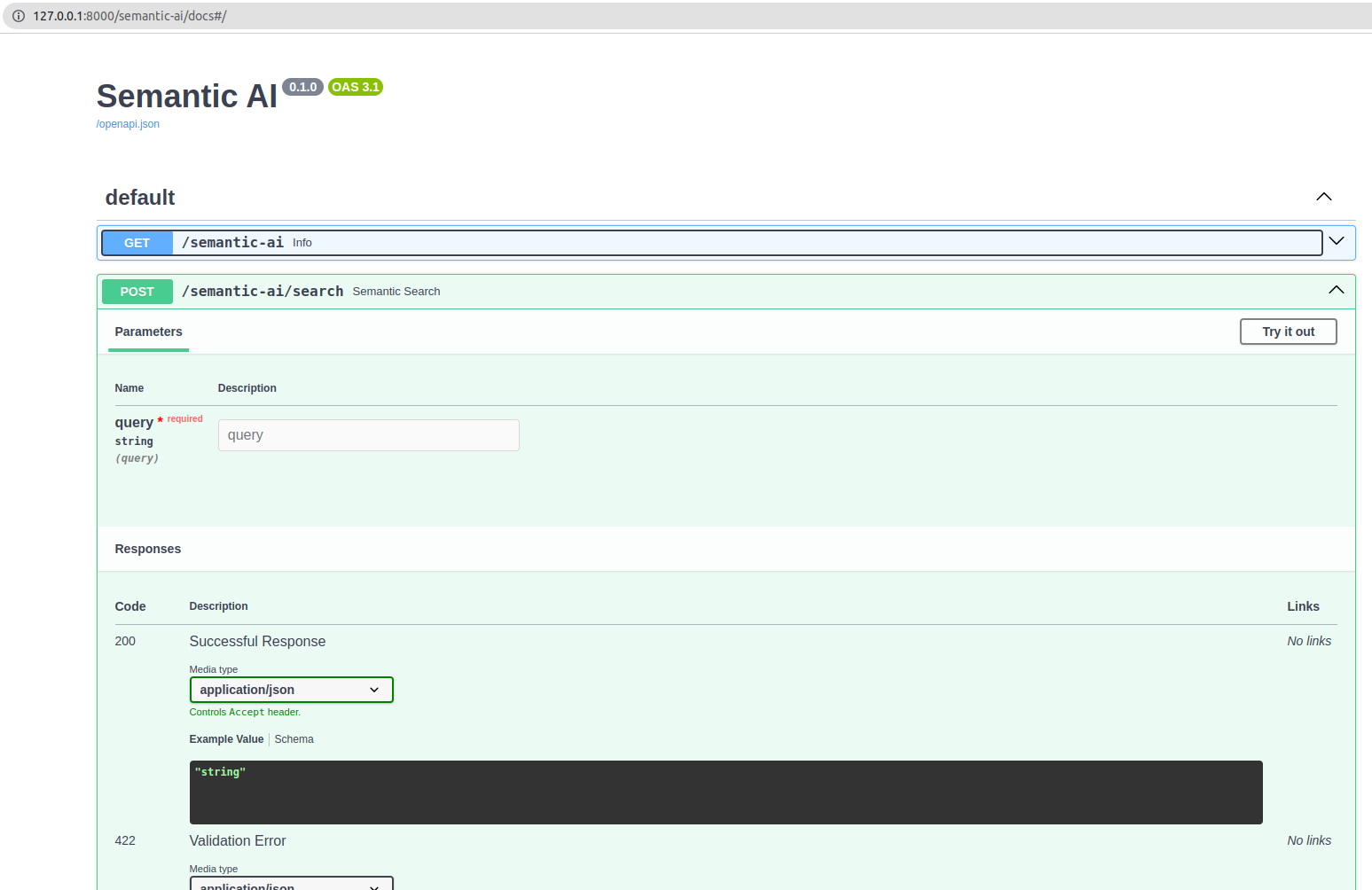

Sekarang buka http://127.0.0.1:8000/docs. Anda akan melihat dokumentasi API interaktif otomatis (disediakan oleh Swagger UI):