Kunlun Wanwei officially announced today that the Skywork R1V multimodal inference model they created has been officially open source! This is not only China's first multimodal inference model open source in the industry, but also marks a milestone step for China's AI power in the field of multimodal understanding and reasoning! From now on, the model weights and technical reports will be completely open to the outside world!

Imagine that an AI model can not only understand pictures, but also perform logical reasoning like humans and solve complex visual problems - this is no longer a scene in science fiction movies, but a capability that Skywork R1V is implementing! This model is like a "Solmes in the AI world". It is good at stripping the threads and deciphering the deep meaning from massive visual information through multi-step logical analysis, and finally gives an accurate answer. Whether it is solving visual logic puzzles, solving difficult visual math problems, analyzing scientific phenomena in images, or even assisting with diagnostic inferences of medical images, Skywork R1V can show amazing strength.

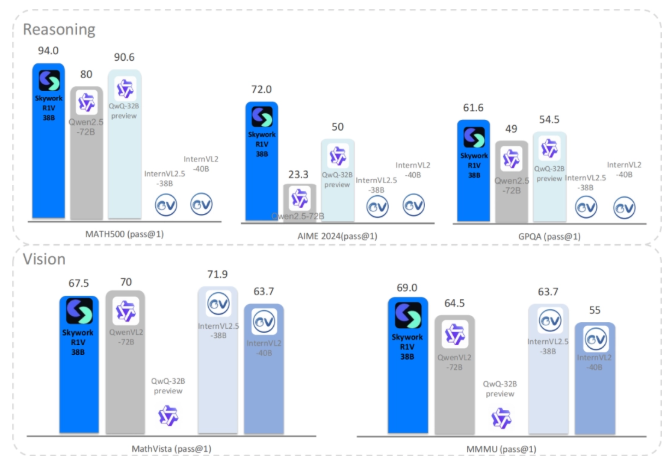

To measure the "IQ" of an AI model, the data is the most convincing! In terms of Reasoning reasoning ability, Skywork R1V scored 94.0 and 72.0 in the authoritative MATH500 and AIME benchmarks respectively! This means that Skywork R1V can easily do it whether it is solving complex mathematical problems or conducting rigorous logical reasoning. What's even more amazing is that it has successfully "grafted" its powerful reasoning ability into the field of vision, and achieved high scores of 69 and 67.5 in visual reasoning benchmark tests such as MMMU and MathVista! These hard-core data directly prove that Skywork R1V has top logical reasoning and mathematical analysis capabilities!

Kunlun Wanwei proudly stated that behind the Skywork R1V model, there are three key technological innovations:

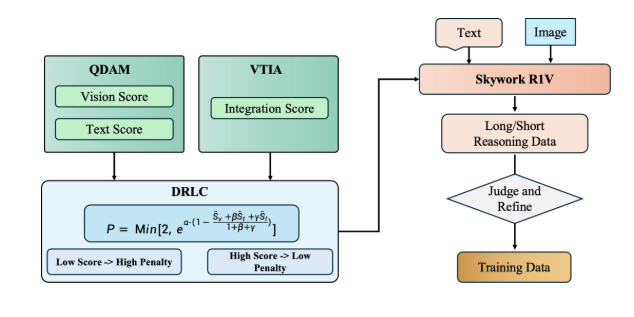

The first is the multimodal efficient migration of text reasoning capabilities. The Kunlun Wanwei team took a unique approach and cleverly used Skywork-VL's visual projector, without spending huge amounts of money to retrain the language model and visual encoder. Just like "The Great Shift of the World", it perfectly moved its original powerful text reasoning ability to visual tasks, and did not affect its original text reasoning skills at all!

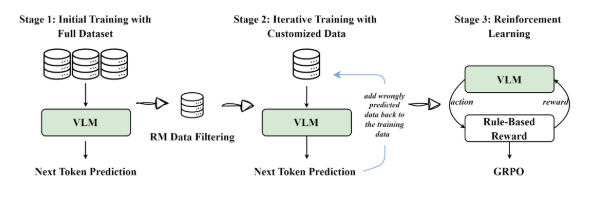

The second is multimodal hybrid training (Iterative SFT+GRPO). This training method is like feeding the model a "mixed nutritious meal". Through the clever combination of iterative supervision fine-tuning and GRPO reinforcement learning, the visual-text representation is aligned in stages and strategically, and the efficient fusion of cross-modal tasks is finally achieved, and the cross-modal capabilities of the model have also made great progress! In the MMMU and MathVista benchmark tests, the performance of Skywork R1V can even be comparable to a larger-scale closed-source model!

Finally, adaptive length thinking chain distillation. Kunlun Wanwei team innovatively proposed a "intelligent brake" mechanism. The model can adaptively adjust the length of the inference chain according to the complexity of the visual-text to avoid "overthinking", thereby greatly improving the inference efficiency while ensuring the accuracy of the reasoning! In addition, with the multi-stage self-distillation strategy, the data generation and inference quality of the model are improved to a higher level, and it is more at ease in complex multimodal tasks!

The open source of Skywork R1V will undoubtedly provide a powerful multimodal reasoning "weapon" for AI researchers and developers in China and even the world. Its emergence will not only accelerate the innovation and application of multimodal AI technology, but will also promote the deep integration of AI technology in all walks of life, opening up a smarter and better future for us!