Recently, the Xiaomi big model team has made breakthrough progress in the field of audio inference, successfully applying reinforcement learning algorithms to multimodal audio comprehension tasks, with an accuracy rate of 64.5%. This achievement has enabled it to win the first place in the internationally authoritative MMAU audio comprehension evaluation. Behind this achievement, the team’s inspiration for DeepSeek-R1 is inseparable.

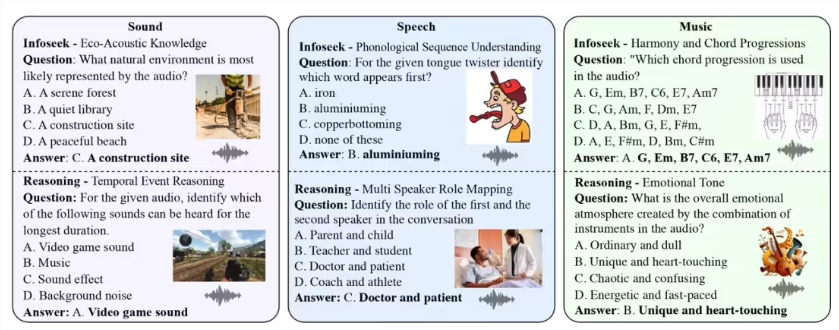

The MMAU (Massive Multi-Task Audio Understanding and Reasoning) evaluation set is an important criterion for measuring audio inference capabilities. By analyzing a variety of audio samples including speech, ambient sound and music, the performance of the model in complex inference tasks is tested. The accuracy rate of human experts is 82.23%, while the best performing model on the current list is OpenAI's GPT-4o with an accuracy rate of 57.3%. Against this background, the performance of Xiaomi team is particularly eye-catching.

In the team's experiment, they adopted the DeepSeek-R1 Group Relative Policy Optimization (GRPO) method, which allows the model to evolve independently and demonstrates reflection and reasoning skills similar to humans through the "trial and error-reward" mechanism. It is worth noting that with the support of reinforcement learning, even though only 38,000 training samples were used, the Xiaomi team's model can still achieve 64.5% accuracy on the MMAU evaluation set, nearly 10 percentage points higher than the current first place.

In addition, experiments also found that the traditional explicit thinking chain output method will actually lead to a decrease in model accuracy, showing the advantages of implicit reasoning in training. Despite the remarkable achievements, the Xiaomi team still realizes that it is still a bit far from the level of human experts. The team said it will continue to optimize reinforcement learning strategies in order to achieve better reasoning capabilities.

The success of this research not only demonstrates the potential of reinforcement learning in the field of audio comprehension, but also paves the way for the future era of intelligent hearing. As machines can not only "hear" sounds, but also "understand" the causal logic behind it, intelligent audio technology will usher in new development opportunities. The Xiaomi team will also open source training code and model parameters to facilitate further research and exchanges in the academic and industrial circles.

Training code: https://github.com/xiaomi-research/r1-aqa

Model parameters: https://huggingface.co/mispeech/r1-aqa

Technical report: https://arxiv.org/abs/2503.11197

Interaction Demo: https://120.48.108.147:7860/