ByteDance recently announced the open source of its latest AIBrix reasoning system, a move that marks its further breakthrough in the field of artificial intelligence. The AIBrix system is designed for the vLLM inference engine to provide enterprises with a scalable and cost-effective inference control surface to meet the growing AI needs. The launch of this system not only demonstrates ByteDance's deep accumulation in AI technology, but also provides enterprises with more efficient AI solutions.

The launch of AIBrix marks a new stage of development for the AI reasoning infrastructure. The project team hopes to lay a solid foundation for building a scalable inference infrastructure through this open source project. The system provides a complete set of cloud-native solutions dedicated to optimizing the deployment, management and scaling capabilities of large language models. In particular, it deeply adapts enterprise-level needs to ensure that users can enjoy more efficient services when using them. This innovation not only improves the operation efficiency of AI models, but also provides enterprises with more flexible AI application scenarios.

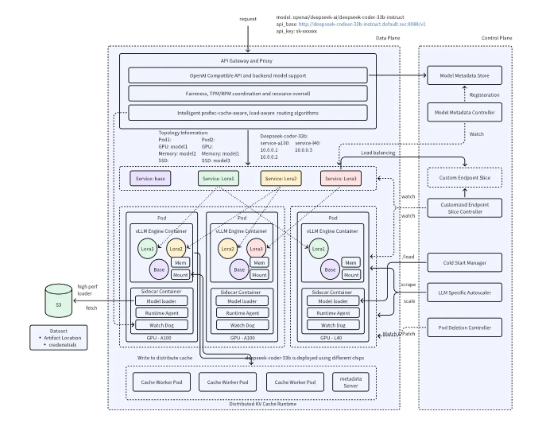

In terms of functionality, the first version of AIBrix focuses on several core features. The first is high-density LoRA (Low Rank Adaptation) management, a feature designed to simplify the adaptation support of lightweight models, allowing users to manage models more easily. Second, AIBrix provides LLM gateway and routing capabilities that efficiently manage and allocate traffic to multiple models and replicas, ensuring requests can reach the target model quickly and accurately. In addition, automatic extenders for LLM applications can also dynamically adjust inference resources according to real-time requirements, improving system flexibility and response speed. The combination of these functions makes AIBrix have significant advantages in the field of AI reasoning.

ByteDance's AIBrix team said that they plan to continue to promote the evolution and optimization of the system by extending distributed KV cache, introducing traditional resource management principles, and improving computing efficiency based on performance analysis. This series of technological innovations will not only further improve the performance of AIBrix, but will also provide enterprises with more possibilities in AI applications. In the future, AIBrix is expected to become an important tool in the field of AI reasoning, promoting the widespread application and popularization of AI technology.