A recent study led by Yann LeCun, chief scientist of Meta’s AI, revealed how artificial intelligence develops basic physics understanding by watching videos. The study, done by scientists from Meta FAIR, the University of Paris and EHESS, shows that AI systems can gain intuitive physics knowledge through self-supervised learning without preset rules.

The research team adopted a new approach called the Video Joint Embedded Prediction Architecture (V-JEPA), which works more closely to the human brain's information processing method than generative AI models such as OpenAI's Sora. V-JEPA does not pursue the generation of perfect pixel predictions, but focuses on making predictions in an abstract representation space. In this way, AI systems can learn basic physical concepts.

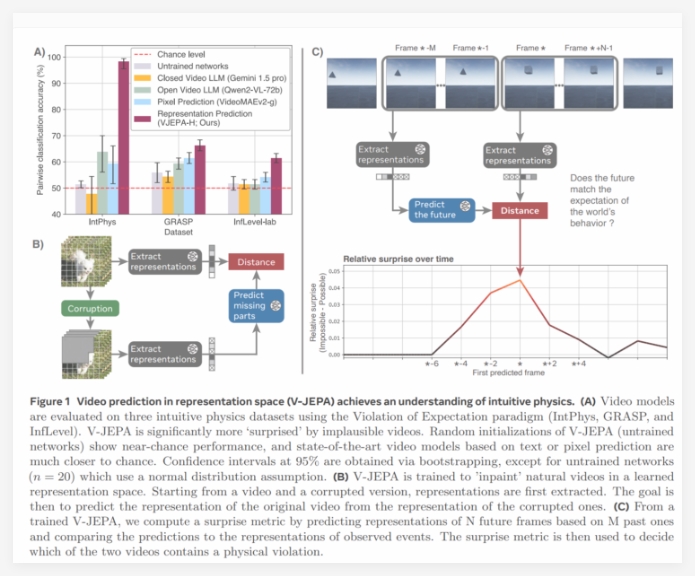

In the study, the team borrowed a “expectation violation” assessment method from developmental psychology, which was originally used to test infants’ physical comprehension abilities. Researchers show AI two similar scenarios—one physically possible and the other physically impossible (e.g., a ball passes through a wall), and the AI’s physical understanding ability can be evaluated by measuring its response to these physical violations.

V-JEPA was tested on three datasets: IntPhys (basic physical concepts), GRASP (complex interactions), and InfLevel (realistic environments). The results show that V-JEPA performs particularly well in object constancy, continuity, and shape consistency, while large multimodal language models such as Gemini1.5Pro and Qwen2-VL-72B perform almost comparable to random guesses.

The efficiency of V-JEPA learning is also eye-catching. The system can master basic physics concepts by just watching 128 hours of video. Moreover, even the small model with 115 million parameters has shown strong results. Research shows that V-JEPA is able to effectively identify motion patterns and identify physically unreasonable events with high accuracy, laying the foundation for AI to truly understand the future of the world.

This study challenges a fundamental assumption in many AI studies that systems require preset “core knowledge” to understand the laws of physics. V-JEPA’s findings show that observational learning can help AI gain knowledge in this area, similar to the process by which infants, primates and even young birds understand physics. The research is in line with Meta's long-term exploration of JEPA architecture, aiming to create a comprehensive world model that enables autonomous AI systems to have a deeper understanding of their environment.

Research shows that AI learns physics knowledge through video without preset rules. V-JEPA outperforms large language models in understanding physics and shows stronger learning abilities. Meta drives new AI development directions, aiming to create a more comprehensive environmental understanding model.