AI visual target positioning technology has always faced accuracy bottlenecks. Traditional algorithms are like "myopia", and can only roughly select targets and are difficult to capture details. Researchers at Illinois Tech, Cisco Research Institute and the University of Central Florida have developed the SegVG framework to solve this problem and give AI "high-definition vision." The core of SegVG is pixel-level detail processing, converting bounding box information into segmentation signals, just like wearing "high-definition glasses" for AI, allowing it to clearly identify every pixel of the target.

In the field of AI vision, target positioning has always been a difficult problem. Traditional algorithms are like "myopia", which can only roughly circle the target with "frames", but cannot see the details inside. It’s like when you describe a person to a friend and just talk about his or her approximate height and body shape. It’s strange if your friend finds a person!

In order to solve this problem, a group of bigwigs from Illinois University of Technology, Cisco Research Institute and the University of Central Florida have developed a new visual positioning framework called SegVG, claiming to let AI bid farewell to "myopia" from now on!

The core secret of SegVG is: "pixel-level" details! Traditional algorithms only use bounding box information to train AI, which is equivalent to only giving AI a vague shadow. SegVG converts bounding box information into segmentation signals, which is equivalent to putting "high-definition glasses" on AI, allowing AI to see every pixel of the target clearly!

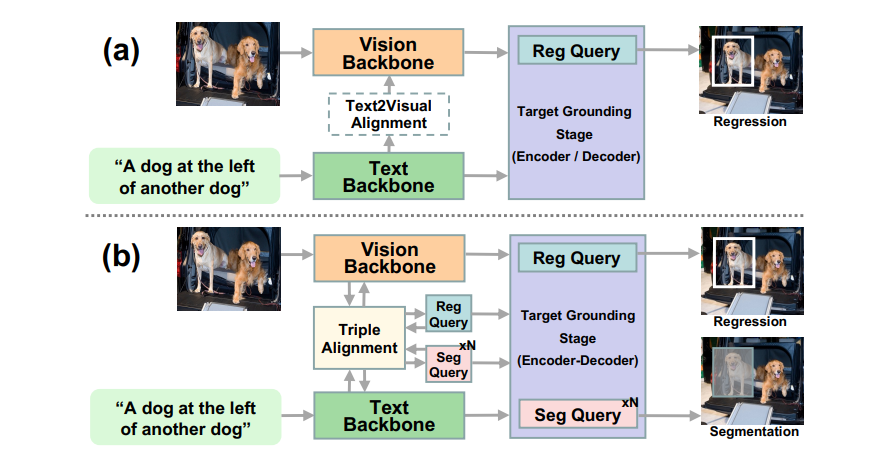

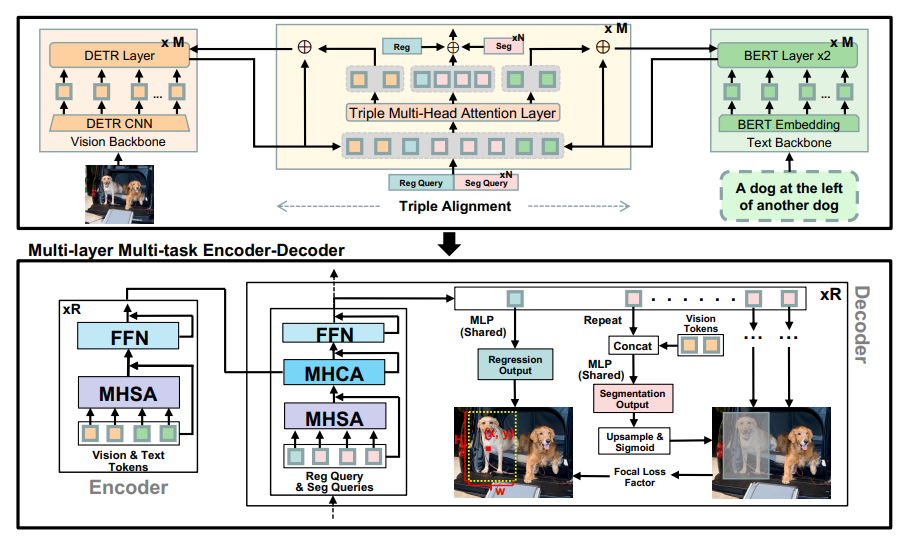

Specifically, SegVG adopts a "multi-layer multitasking encoder-decoder". The name sounds complicated, but you can actually understand it as a super-precision "microscope" containing queries for regression and multiple queries for segmentation. Simply put, it is to use different "lenses" to perform bounding box regression and segmentation tasks, repeatedly observe the target, and extract more refined information.

What's even more amazing is that SegVG also introduced a "ternal alignment module", which is equivalent to equip AI with a "translator" to specifically solve the problem of "language blockage" between model pre-training parameters and query embedding. Through the ternary attention mechanism, this "translator" can "translate" the query, text and visual features to the same channel, allowing AI to better understand the target information.

What is the effect of SegVG? The big guys conducted experiments on five commonly used data sets, and found that the performance of SegVG beats a number of traditional algorithms! Especially in the two famous "difficulties" RefCOCO+ and RefCOCOg "In the dataset, SegVG has achieved breakthrough results!

In addition to precise positioning, SegVG can also output the confidence score predicted by the model. Simply put, AI will tell you how confident it is to judge itself. This is very important in practical applications. For example, if you want to use AI to identify medical images, if the confidence of AI is not high, you need manual review to avoid misdiagnosis.

The open source of SegVG is a major benefit for the entire field of AI vision! I believe that more and more developers and researchers will join the SegVG camp in the future to jointly promote the development of AI vision technology.

Paper address: https://arxiv.org/pdf/2407.03200

Code link: https://github.com/WeitaiKang/SegVG/tree/main

The emergence of SegVG marks a significant progress in AI visual target positioning technology. Its high precision, high confidence positioning capabilities and open source characteristics will bring innovations to many fields, and it is worth looking forward to its future application and development.