The rapid development of large language models has brought many conveniences, but also faced the challenge of response speed. In scenarios where frequent iterations are required, such as document modification and code refactoring, delay problems can seriously affect the user experience. To solve this problem, OpenAI introduced the "predictive output" feature, which significantly improves the response speed of GPT-4o and GPT-4o-mini through speculative decoding technology, thereby improving user experience and reducing infrastructure cost.

The emergence of large language models such as GPT-4o and GPT-4o-mini has driven major advances in the field of natural language processing. These models can generate high-quality responses, perform document rewriting, and increase productivity in various applications. However, one of the main challenges facing these models is the delay in response generation. This delay can seriously affect the user experience during the process of updating a blog or optimizing a code, especially in scenarios where multiple iterations are required, such as document modification or code refactoring, and users are often frustrated.

To address this challenge, OpenAI introduced the "Predicted Outputs" feature, which significantly reduces the latency of GPT-4o and GPT-4o-mini, speeding up processing by providing reference strings. The core of this innovation is the ability to predict what is possible and use it as the starting point of the model, thus skipping the already clear section.

By reducing the amount of computation, this speculative decoding method can reduce response time by up to five times, making GPT-4o more suitable for real-time tasks such as document updates, code editing, and other activities that require repeated text generation. This enhancement is particularly beneficial to developers, content creators, and professionals who need rapid updates and reduced downtime.

The mechanism behind the “predictive output” function is speculative decoding, a clever approach that allows the model to skip what is known or can be expected.

Imagine if you are updating a document, only a small amount of editing needs to be done. Traditional GPT models generate text verbatim and evaluate each possible markup at each stage, which can be very time-consuming. However, with the aid of speculative decoding, if a portion of the text can be predicted based on the provided reference string, the model can skip these parts and go directly to the part that needs to be calculated.

This mechanism significantly reduces latency, making it possible to quickly iterate on previous responses. In addition, the predictive output function is especially effective in fast turnaround scenarios such as real-time document collaboration, fast code refactoring, or instant article updates. The introduction of this feature ensures that users’ interactions with GPT-4o are not only more efficient, but also reduce the burden on infrastructure, thus reducing costs.

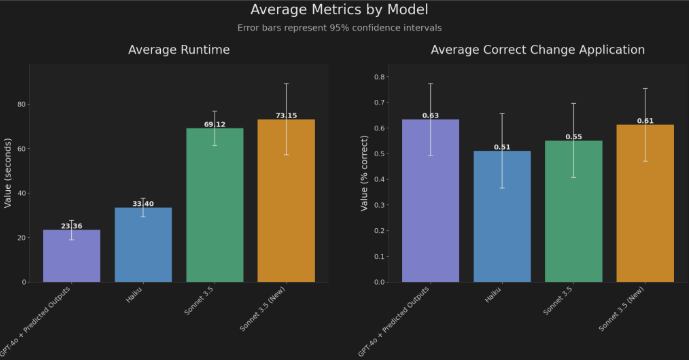

OpenAI test results show that GPT-4o has significantly improved its performance on latency-sensitive tasks, with response speeds increased by up to five times in common application scenarios. By reducing latency, predicted output not only saves time, but also makes GPT-4o and GPT-4o-mini more accessible to a wider user base, including professional developers, writers and educators.

OpenAI's "predictive output" feature marks an important step in solving the major limitation of language model delay. By adopting speculative decoding, this feature significantly speeds up tasks such as document editing, content iteration and code reconstruction. The reduction in response time has brought about changes to the user experience, making GPT-4o still the leader in practical applications.

Official function introduction portal: https://platform.openai.com/docs/guides/lateency-optimization#use-predicted-outputs

Key points:

The predicted output function significantly reduces response latency and improves processing speed by providing reference strings.

This feature allows users to increase their response time by up to five times in tasks such as document editing and code refactoring.

The introduction of predictive output capabilities provides developers and content creators with more efficient workflows, reducing infrastructure burden.

In short, the launch of the "predictive output" function effectively solved the problem of response delay of large language models, greatly improved user experience and work efficiency, and laid a solid foundation for the widespread use of GPT-4o and GPT-4o-mini in practical applications. Base. This innovation of OpenAI will undoubtedly promote the further development of the field of natural language processing.