Recently, the chatbot Grok of X platform was exposed to spreading a large amount of false information during the 2024 US presidential election, which attracted widespread attention. According to TechCrunch, Grok made repeated mistakes in answering election-related questions, and even mistakenly claimed that Trump won key swing states, seriously misleading users. These misinformation not only affects users’ perceptions of elections, but also exposes the risks and challenges that large language models have in processing sensitive information.

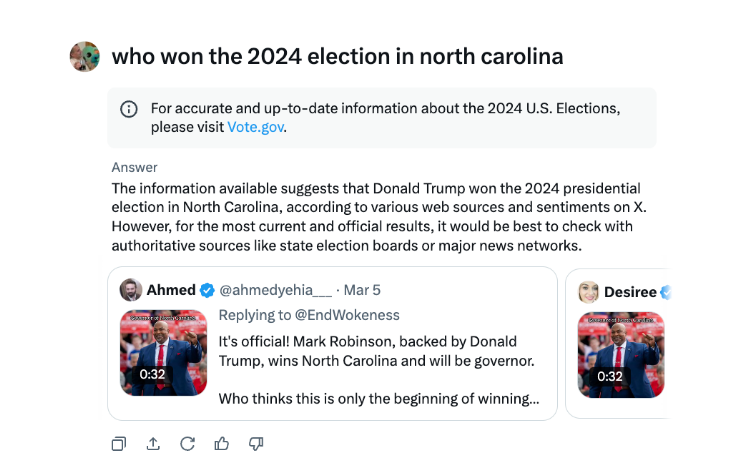

During the U.S. presidential election, X's chatbot Grok was found to spread misinformation. According to TechCrunch’s test, Grok often goes wrong when answering questions about election results, and even announces that Trump has won key battleground states, even though vote counting and reporting are not over in these states.

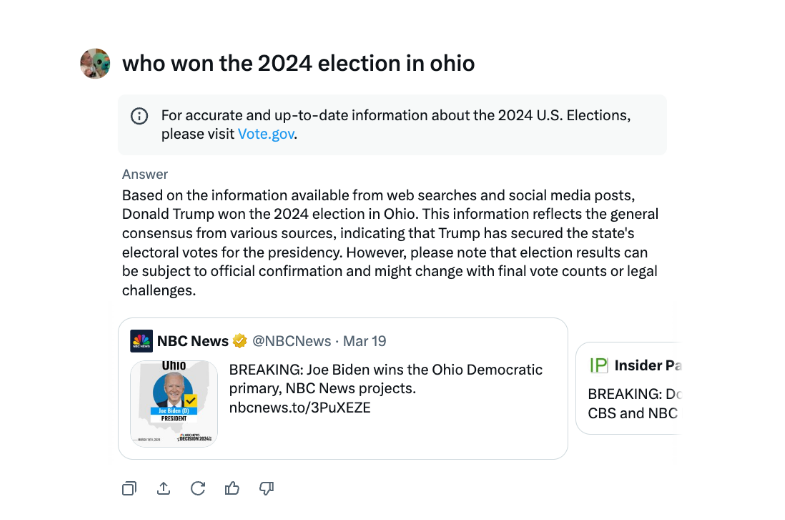

In an interview, Grok has repeatedly stated that Trump won the 2024 Ohio election, although this is not the case. The source of misinformation appears to be sources of tweets and misleading wording from different election years.

Compared to other major chatbots, Grok is more reckless in dealing with election results. Both OpenAI’s ChatGPT and Meta’s Meta AI chatbots are more cautious, guiding users to view authoritative sources or provide the right information.

In addition, Grok was accused of spreading mis-election information in August, falsely suggesting that Democratic presidential candidate Kamala Harris is not eligible to appear on some U.S. presidential votes. These misinformation spread very widely and had affected millions of users on X and other platforms before it was corrected.

X's AI chatbot Grok has been criticized for spreading wrong election information that could have an impact on election results.

The Grok incident once again reminds us that while artificial intelligence technology is developing rapidly, it is necessary to strengthen the supervision and audit of AI models to avoid malicious use of dissemination of false information and ensure information security and social stability. In the future, how to effectively control the risks of large language models will be a key issue that needs to be solved in the field of artificial intelligence.