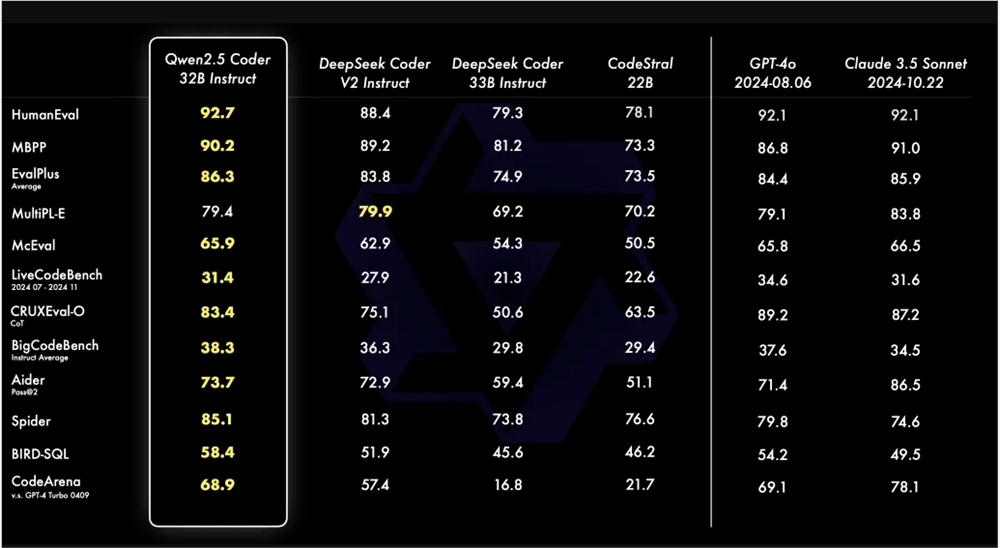

Tongyi Qianwen team has opened sourced its latest code model Qwen2.5-Coder series, including four sizes of models: 0.5B/3B/14B/32B, as well as two versions of Base and Instruct. This move aims to promote the development of open source code models and provide developers with more choices. The Qwen2.5-Coder-32B-Instruct model has performed excellently in code generation, repair and inference, and has reached SOTA levels in multiple benchmarks, which is comparable to GPT-4o, especially in programming languages such as Haskell and Racket. . The model has also been tested by internal code preference evaluation benchmark Code Arena, which proves its advantage in human preference alignment.

Qwen2.5-Coder supports over 40 programming languages and scores 65.9 points on McEval and scores 75.2 on the MdEval benchmark, ranking first. Its unique data cleaning and proportioning in the pre-training stage is one of the key factors for its excellent performance. The 0.5B/1.5B/7B/14B/32B model is licensed by Apache2.0, and the 3B model is licensed by Research Only. The team verified the effectiveness of Scaling on Code LLMs by evaluating the performance of different size models. The open source of Qwen2.5-Coder will undoubtedly promote the development and application of programming language model technology.

The Qwen2.5-Coder series open source this time provides developers with a powerful and easy-to-use programming model choice, further promoting the development of the open source community. Models of different sizes meet different needs, while Base and Instruct versions serve model fine-tuning and direct application respectively. The model link is provided, and developers are welcome to download and use it and contribute to the open source community.

Qwen2.5-Coder model link: https://modelscope.cn/collections/Qwen25-Coder-9d375446e8f5814a