The editor of Downcodes learned that an open source multi-modal artificial intelligence model called Molmo has attracted widespread attention recently. It is based on Qwen2-72B and uses OpenAI's CLIP as a visual processing engine. With its efficient performance and innovative pointing functions, it has demonstrated strong competitiveness in the field of multi-modal AI and even challenged the leadership of traditional business models. Its compact design not only improves efficiency, but also enhances deployment flexibility, bringing more possibilities to AI applications.

Recently, an open source multi-modal artificial intelligence model called Molmo has attracted widespread attention in the industry. This AI system, which is based on Qwen2-72B and uses OpenAI's CLIP as a visual processing engine, is challenging the dominance of traditional business models with its excellent performance and innovative functions.

Molmo's outstanding feature is its efficient performance. Despite its relatively small size, it rivals rivals that are ten times larger in terms of processing power. This small and sophisticated design concept not only improves the efficiency of the model, but also provides greater flexibility for its deployment in various application scenarios.

Compared with traditional multi-modal models, Molmo's innovation lies in the pointing function it introduces. This feature enables models to interact more deeply with real and virtual environments, opening up new possibilities for applications such as human-computer interaction and augmented reality. This design not only improves the practicality of the model, but also lays the foundation for the deep integration of AI and the real world in the future.

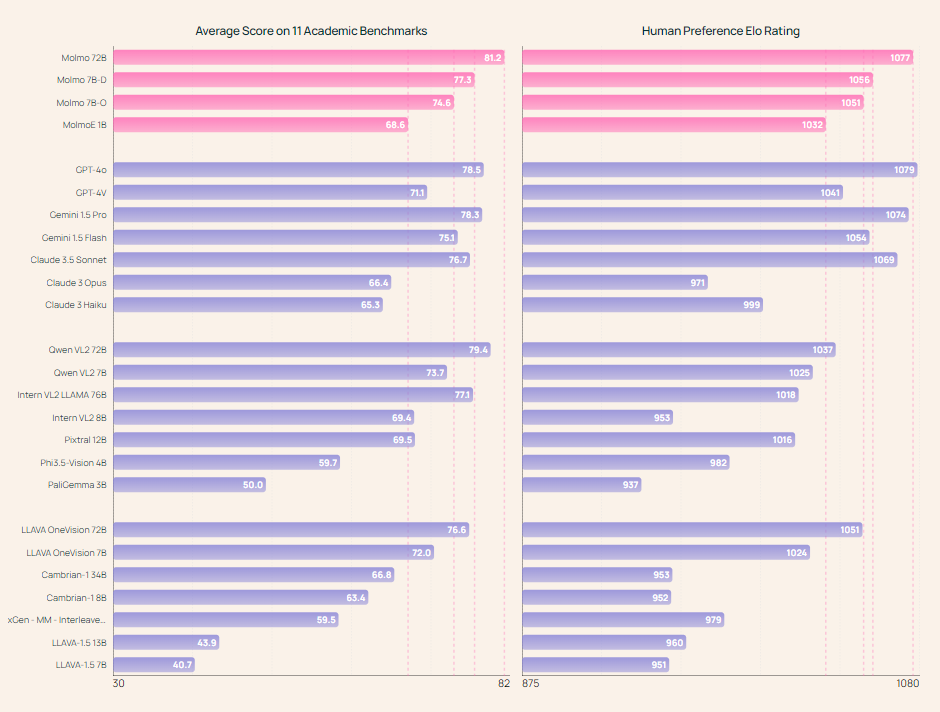

In terms of performance evaluation, Molmo-72B performed particularly well. It set new records on multiple academic benchmarks and ranked second behind GPT-4o in human evaluation. This achievement fully proves Molmo's excellent performance in practical applications.

Another highlight of Molmo is its open source nature. The weights, code, data and evaluation methods of the model are all made public, which not only reflects the open source spirit, but also makes an important contribution to the development of the entire AI community. This open attitude will help promote rapid iteration and innovation of AI technology.

In terms of specific functions, Molmo shows comprehensive capabilities. It not only generates high-quality image descriptions, but also accurately understands image content and answers related questions. In terms of multi-modal interaction, Molmo supports simultaneous input of text and images, and can enhance interactivity with visual content through 2D pointing interaction. These functions greatly expand the possibilities of AI in practical applications.

Molmo's success is largely due to its high-quality training data. The R&D team adopted an innovative data collection method to obtain more detailed content information through voice description of images. This method not only avoids the common simplistic problems of text descriptions, but also collects a large amount of high-quality and diverse training data.

In terms of diversity, Molmo's data sets cover a wide range of scenarios and content and support multiple user interaction methods. This allows Molmo to excel at specific tasks, such as answering image-related questions, improving OCR tasks, etc.

It is worth mentioning that Molmo performs well in comparisons with other models, especially in academic benchmarks and human evaluations. This not only proves the strength of Molmo, but also provides a new reference for AI evaluation methods.

Molmo’s success once again proves that data quality is more important than quantity in AI development. Using less than 1 million pairs of image and text data, Molmo demonstrated amazing training efficiency and performance. This provides new ideas for the development of future AI models.

Project address: https://molmo.allenai.org/blog

All in all, Molmo has shown great potential in the field of multi-modal artificial intelligence with its efficient performance, innovative pointing functions and open source features, providing new directions and ideas for future AI development. The editor of Downcodes looks forward to its application and further development in more fields.