beyond_vector_search

1.0.0

Python == 3.9.18을 사용했으며 가상 환경을 사용하여 필요한 패키지를 설치하는 것이 좋습니다.

pip install -r requirements.txt

notebooks/parsing_json.ipynb : arxiv의 필터 데이터notebooks/parsing_cnn_news.ipynb : CNN 뉴스의 필터 데이터notebooks/parsing_wiki_movies.ipynb : 위키 영화의 필터 데이터12,926 개의 샘플이 포함 된 Kaggle의 ARXIV 데이터 세트의 서브 세트를 사용했으며 피클 파일은 "Better_Vector_Search/Data/Filtered_Data.Pickle"에 제공됩니다.

make_vectordb.py: a script to build a vector database from a "data/filtered_data.pickle"

utils/

- build_graph.py: a script containing helper functions for building the knowledge graph

- parse_arxiv.py: a script containing helper functions for parsing the arxiv dataset

vector_graph/

- bipartite_graph_dict.py: A custom implementation of the bipartite graph

- bipartite_graph_networkx.py: An experimental implementation of the bipartite graph using networkx

- embedding_models.py: A custom implementation of the embedding models for generating the text embeddings

workloads

- keyword_extractor.py

- query_gen.py: A script for generating the text queries given paper data points

- workload_gen.sh: This is the script for generating the workloads we described in the report

testing

- inference.py: A script for executing our various search query engines on the generated workloads

zy_testing

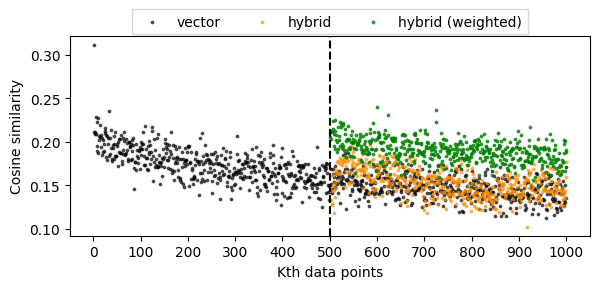

- compute_metrics_cos.py: A script for computing the accuracy of our results utilizing various performance compute_metrics_cos