This project is an E-commerce Chatbot built using a Retrieval-Augmented Generation (RAG) approach. RAG combines the power of information retrieval and generative language models, enabling the chatbot to provide accurate and context-aware responses based on extensive product-related information stored in a vector database. We used LangChain as the framework to manage the chatbot’s components and orchestrate the retrieval-generation flow efficiently.

The chatbot employs LLaMA3.1-8B, a large language model known for its ability to understand nuanced context and generate coherent responses. To enhance retrieval performance, the project leverages embeddings generated by HuggingFace's sentence-transformers/all-mpnet-base-v2 model. These embeddings encode semantic meaning, enabling the chatbot to retrieve relevant product data quickly based on user queries. The embeddings are stored and managed in AstraDB, which serves as a high-performance vector database.

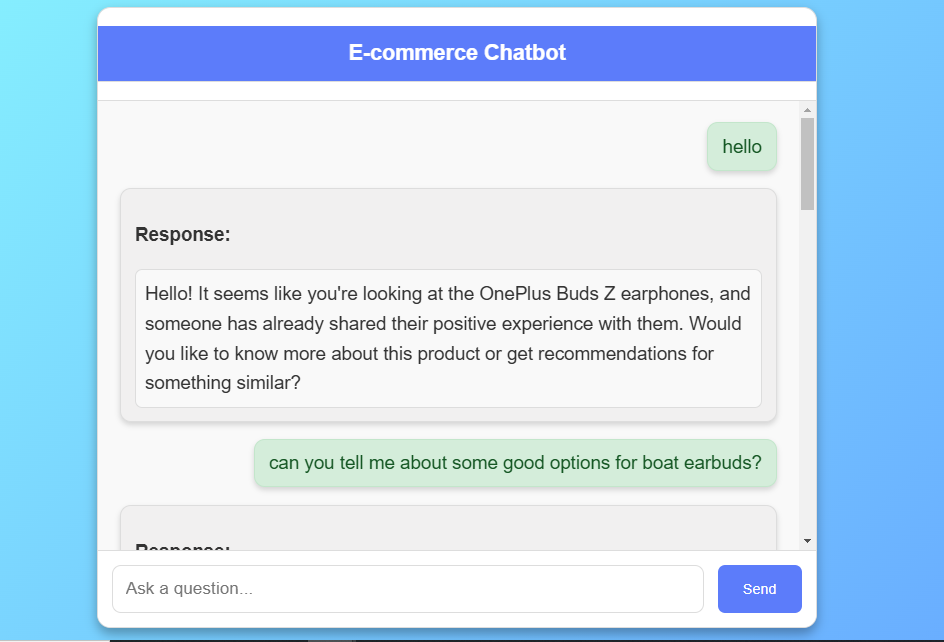

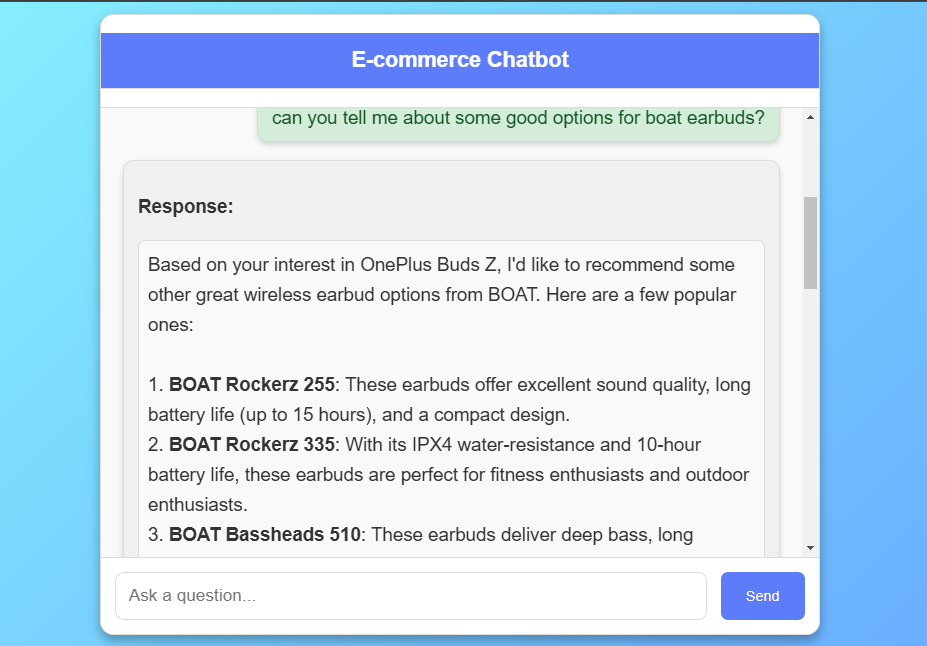

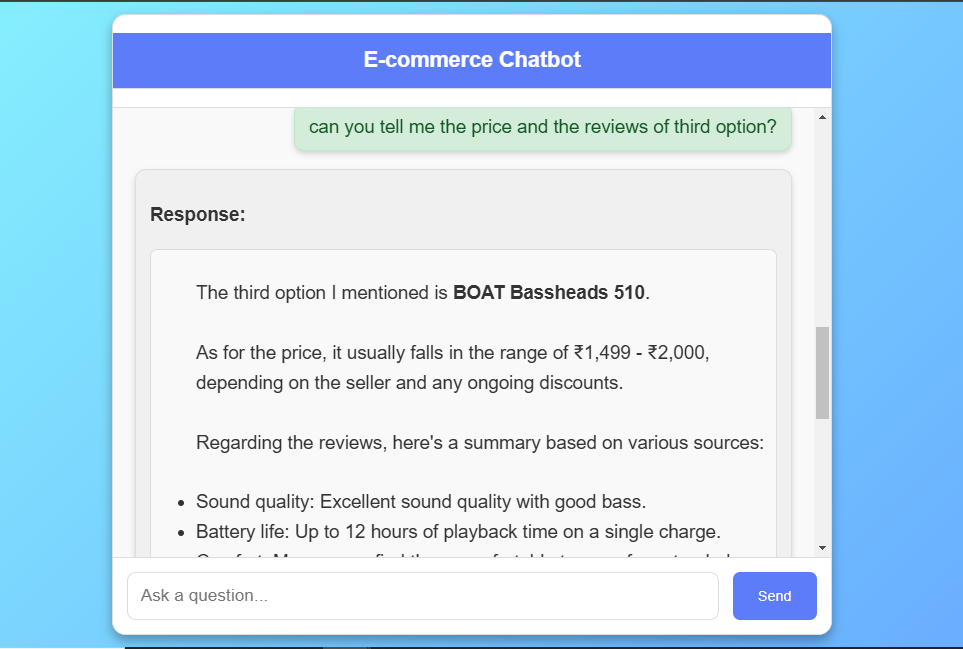

To provide coherent, context-aware responses, the chatbot uses a history-aware RAG approach. By incorporating chat history into each interaction, the model can understand references to previous messages and maintain continuity across multiple turns. LangChain’s history management features make this process seamless by allowing stateful management of chat history for each user session.

The dataset used in this project comprises product reviews sourced from Flipkart, an e-commerce platform. The dataset includes product titles, ratings, and detailed reviews, offering a comprehensive view of customer feedback across various products. The primary purpose of this dataset is to power the chatbot's retrieval capabilities, enabling it to reference real-world product sentiments, features, and customer experiences. Each review is stored as a Document object within LangChain, containing the review as content and the product name as metadata. The dataset is ingested into AstraDB as a vector store, enabling similarity searches that match user queries with relevant reviews, enhancing the chatbot's recommendations and responses.

The frontend is a responsive web interface created using HTML, CSS, and JavaScript, designed to provide an intuitive chat experience. Users can interact with the chatbot to inquire about product details and receive personalized recommendations. The interface is styled with CSS, featuring a modern gradient background and a structured chat box where user messages and bot responses are displayed in real-time.

Given the model’s large size, generating responses with LLaMA 3.1-8b can occasionally exceed the default 1-minute server timeout limit. To manage this, we implemented Redis as a message broker and Celery for background task management. When a user submits a query, the chatbot triggers a Celery task that processes the response asynchronously, allowing the frontend to periodically poll for the response status. This method effectively prevents server timeout errors while ensuring users receive responses without interruptions.

The chatbot is deployed on AWS EC2, providing a scalable and robust environment for running the model, handling user interactions, and managing retrievals from the database.

Dataset for this Project is taken from Kaggle. Here is the Dataset Link. The dataset used in this project contains 450 product reviews about different brands of headphones, erabuds and others collected from Flipkart, including the following key features:

Dataset Name : flipkart_dataset Number of Columns : 5 Number of Records : 450

The Code is written in Python 3.10.15. If you don't have Python installed you can find it here. If you are using a lower version of Python you can upgrade using the pip package, ensuring you have the latest version of pip.

git clone https://github.com/jatin-12-2002/E-Commerce_ChatBotcd E-Commerce_ChatBotconda create -p env python=3.10 -ysource activate ./envpip install -r requirements.txtASTRA_DB_API_ENDPOINT=""

ASTRA_DB_APPLICATION_TOKEN=""

ASTRA_DB_KEYSPACE=""

HF_TOKEN=""curl -fsSL https://ollama.com/install.sh | shollama serveollama pull llama3.1:8bsudo apt-get updatesudo apt-get install redis-serversudo service redis-server startredis-cli pingcelery -A app.celery worker --loglevel=infogunicorn -w 2 -b 0.0.0.0:8000 app:apphttp://localhost:8000/

Use t2.large or greater size instances only as it is a GenerativeAI using LLMs project.sudo apt-get updatesudo apt update -ysudo apt install git nginx -ysudo apt install git curl unzip tar make sudo vim wget -ygit clone https://github.com/jatin-12-2002/E-Commerce_ChatBotcd E-Commerce_ChatBottouch .envvi .envASTRA_DB_API_ENDPOINT=""

ASTRA_DB_APPLICATION_TOKEN=""

ASTRA_DB_KEYSPACE=""

HF_TOKEN=""cat .envsudo apt install python3-pippip3 install -r requirements.txtOR

pip3 install -r requirements.txt --break-system-packagesgunicorn -w 2 -b 0.0.0.0:8000 app:appsudo nano /etc/nginx/sites-available/defaultserver {

listen 80;

server_name your-ec2-public-ip;

location / {

proxy_pass http://127.0.0.1:8000;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}sudo systemctl restart nginxsudo nano /etc/systemd/system/gunicorn.service[Unit]

Description=Gunicorn instance to serve my project

After=network.target

[Service]

User=ubuntu

Group=www-data

WorkingDirectory=/home/ubuntu/E-Commerce_ChatBot_Project

ExecStart=/usr/bin/gunicorn --workers 4 --bind 127.0.0.1:8000 app:app

[Install]

WantedBy=multi-user.targetsudo systemctl start gunicornsudo systemctl enable gunicorngunicorn -w 2 -b 0.0.0.0:8000 app:appPublic_Address:8080This E-commerce chatbot provides an intelligent, interactive shopping experience through a RAG approach that combines retrieval and generation, offering relevant product recommendations based on actual customer reviews.

LLaMA 3.1's large language model and HuggingFace embeddings enable nuanced responses, enhancing user engagement with contextually aware conversations.

Efficient response handling with Redis and Celery addresses the demands of a high-performing application, ensuring stable, responsive user experiences even with large LLMs.

A fully scalable AWS EC2 deployment allows seamless integration into e-commerce platforms, offering robust infrastructure for high-traffic environments.

This project showcases a powerful application of Large Language Models, pushing the boundaries of chatbot capabilities in the e-commerce domain.