By training the text with existing tags, the classification of new text is realized.

2019.3.25: The project was originally a public opinion analysis business of the company, but later it participated in some competitions and added some small functions. At that time, I just wanted to integrate some simple models of machine learning and deep learning to exercise my engineering skills. After communicating with some netizens, I felt that there was no need to build a general module (no one uses it anyway, haha~). I happened to be quite leisurely recently, so I deleted all the useless fancy parameters and functions for the purpose of being simpler, and only preprocessing and convolutional networks were retained.

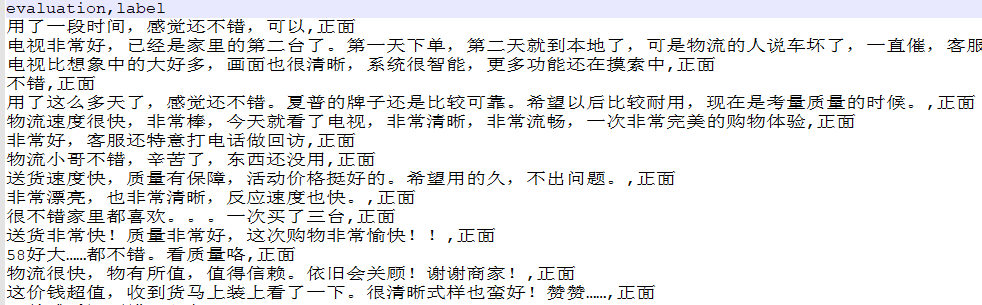

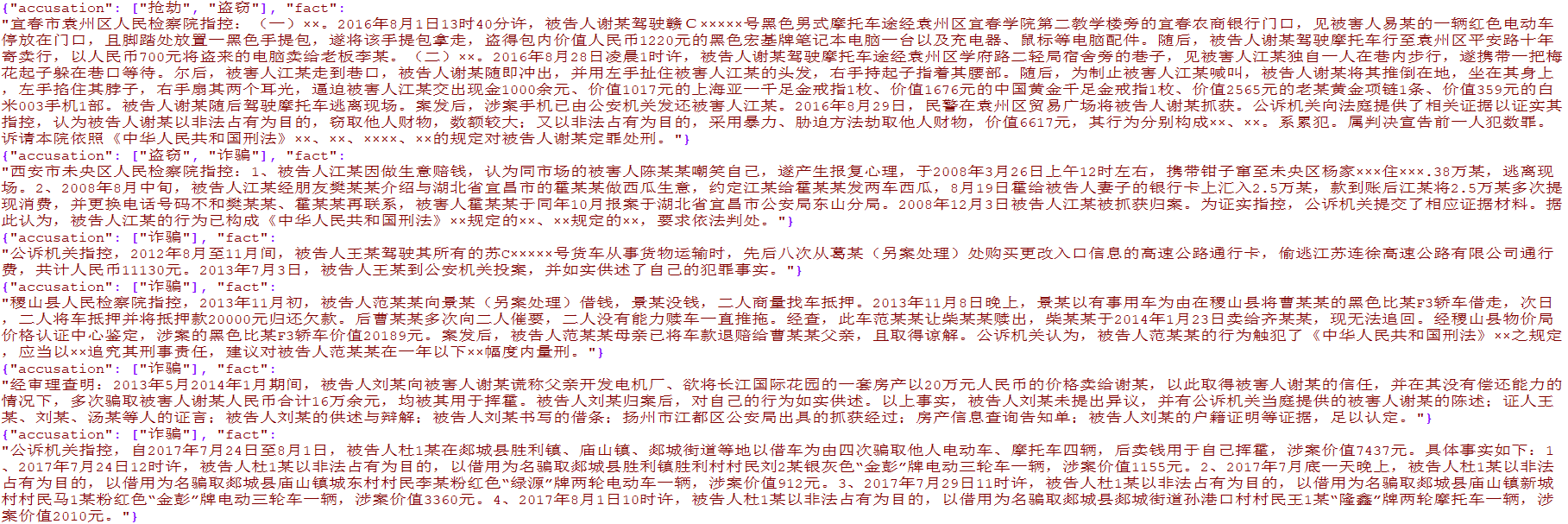

More than 4,000 single-label e-commerce data and more than 15,000 multi-label judicial crime data have been prepared. The data are for academic research only and commercial dissemination is prohibited.

from TextClassification . load_data import load_data

# 单标签

data = load_data ( 'single' )

x = data [ 'evaluation' ]

y = [[ i ] for i in data [ 'label' ]]

# 多标签

data = load_data ( 'multiple' )

x = [ i [ 'fact' ] for i in data ]

y = [ i [ 'accusation' ] for i in data ]

Used to preprocess the original text data, including word segmentation, conversion encoding, length uniformity and other methods, which have been encapsulated into TextClassification.py

preprocess = DataPreprocess ()

# 处理文本

texts_cut = preprocess . cut_texts ( texts , word_len )

preprocess . train_tokenizer ( texts_cut , num_words )

texts_seq = preprocess . text2seq ( texts_cut , sentence_len )

# 得到标签

preprocess . creat_label_set ( labels )

labels = preprocess . creat_labels ( labels ) Integrate preprocessing, network training, and network prediction. Please refer to two demo scripts for demo

The method is as follows:

from TextClassification import TextClassification

clf = TextClassification ()

texts_seq , texts_labels = clf . get_preprocess ( x_train , y_train ,

word_len = 1 ,

num_words = 2000 ,

sentence_len = 50 )

clf . fit ( texts_seq = texts_seq ,

texts_labels = texts_labels ,

output_type = data_type ,

epochs = 10 ,

batch_size = 64 ,

model = None )

# 保存整个模块,包括预处理和神经网络

with open ( './%s.pkl' % data_type , 'wb' ) as f :

pickle . dump ( clf , f )

# 导入刚才保存的模型

with open ( './%s.pkl' % data_type , 'rb' ) as f :

clf = pickle . load ( f )

y_predict = clf . predict ( x_test )

y_predict = [[ clf . preprocess . label_set [ i . argmax ()]] for i in y_predict ]

score = sum ( y_predict == np . array ( y_test )) / len ( y_test )

print ( score ) # 0.9288