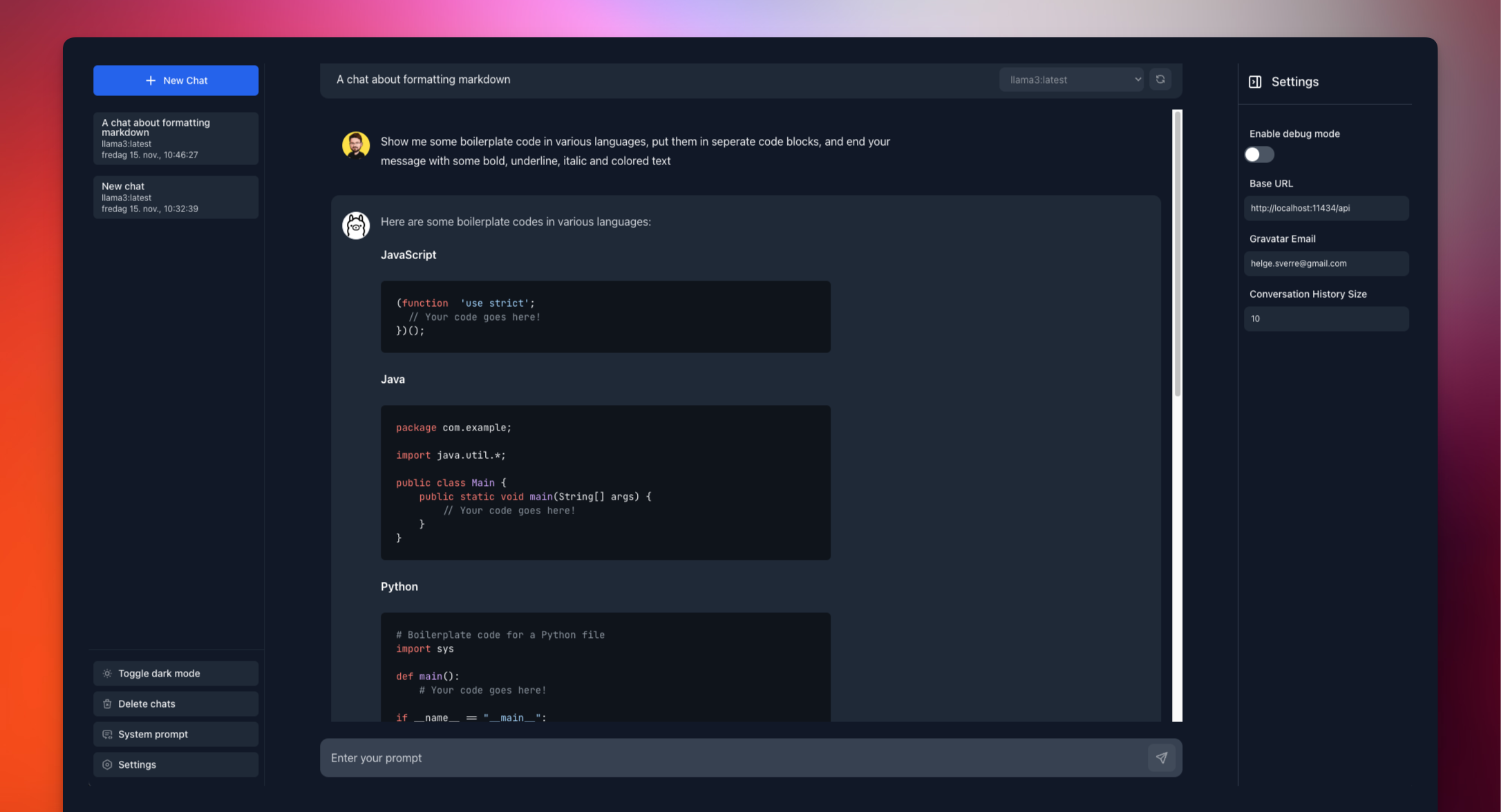

ollama gui

1.0.0

A modern web interface for chatting with your local LLMs through Ollama

# Start Ollama server with your preferred model

ollama pull mistral # or any other model

ollama serve

# Clone and run the GUI

git clone https://github.com/HelgeSverre/ollama-gui.git

cd ollama-gui

yarn install

yarn devTo use the hosted version, run Ollama with:

OLLAMA_ORIGINS=https://ollama-gui.vercel.app ollama serve# Build the image

docker build -t ollama-gui .

# Run the container

docker run -p 8080:80 ollama-gui

# Access at http://localhost:8080Released under the MIT License.