PaperBrain

1.0.0

Paperbrain是一個智能的研究論文問答系統,將矢量搜索和大型語言模型結合在一起,為與研究相關的問題提供上下文感知的答案。它處理學術論文,理解其內容,並以適當的引用和背景產生結構化的,內容豐富的回應。

# System requirements

- Python 3.9+

- Docker

- 4GB+ RAM for LLM operations

- Disk space for paper storagegit clone https://github.com/ansh-info/PaperBrain.git

cd PaperBrain # Using conda

conda create --name PaperBrain python=3.11

conda activate PaperBrain

# Using venv

python -m venv env

source env/bin/activate # On Windows: .envScriptsactivatepip install -r requirements.txtdocker-compose up -d # If you want other models

docker exec ollama ollama pull llama3.2:1b

docker exec -it ollama ollama pull mistral

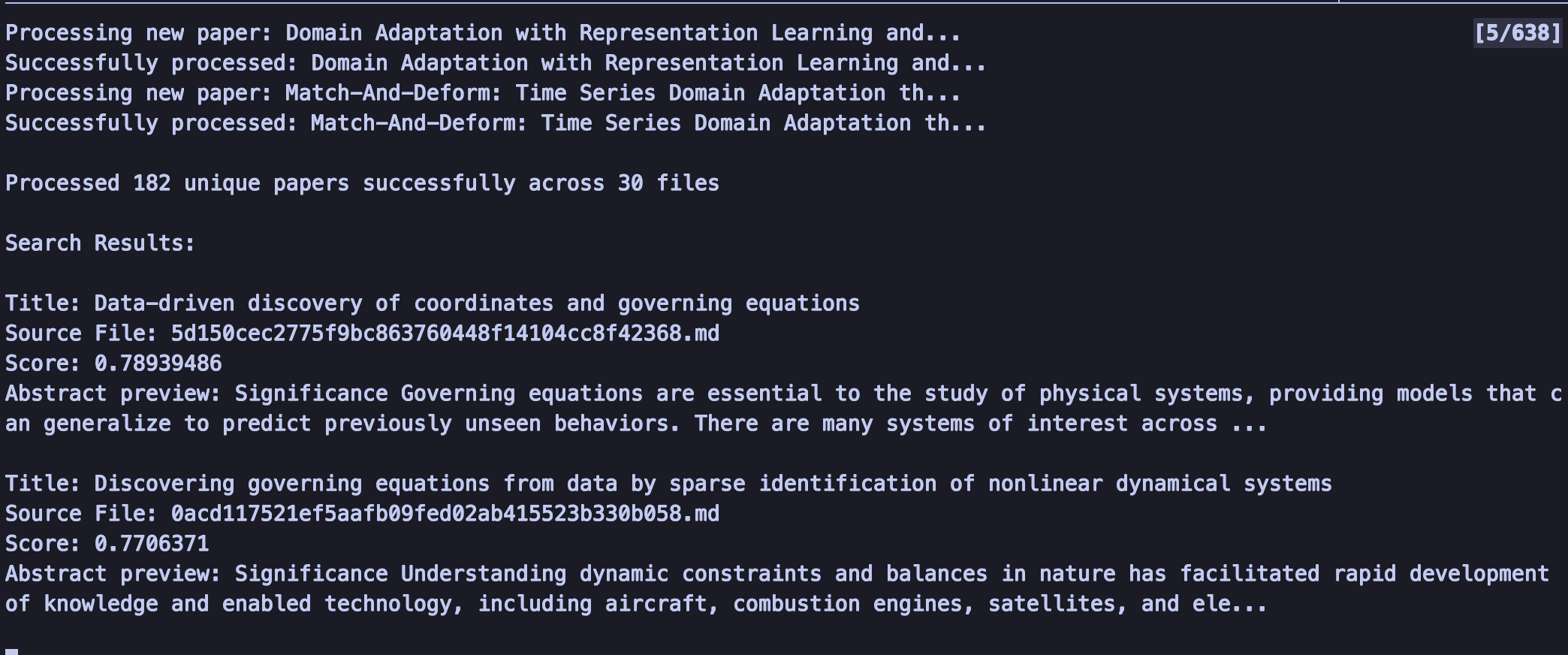

docker exec -it ollama ollama pull nomic-embed-textpython src/vector.pymarkdowns/ Directory中python src/llmquery.py # Run src/query.py to query qdrant database(without llm)quit或q :退出程序analytics :顯示系統使用統計信息clear :重置紙歷史history :查看最近的問題和回答 > What are the main approaches for discovering governing equations from data?

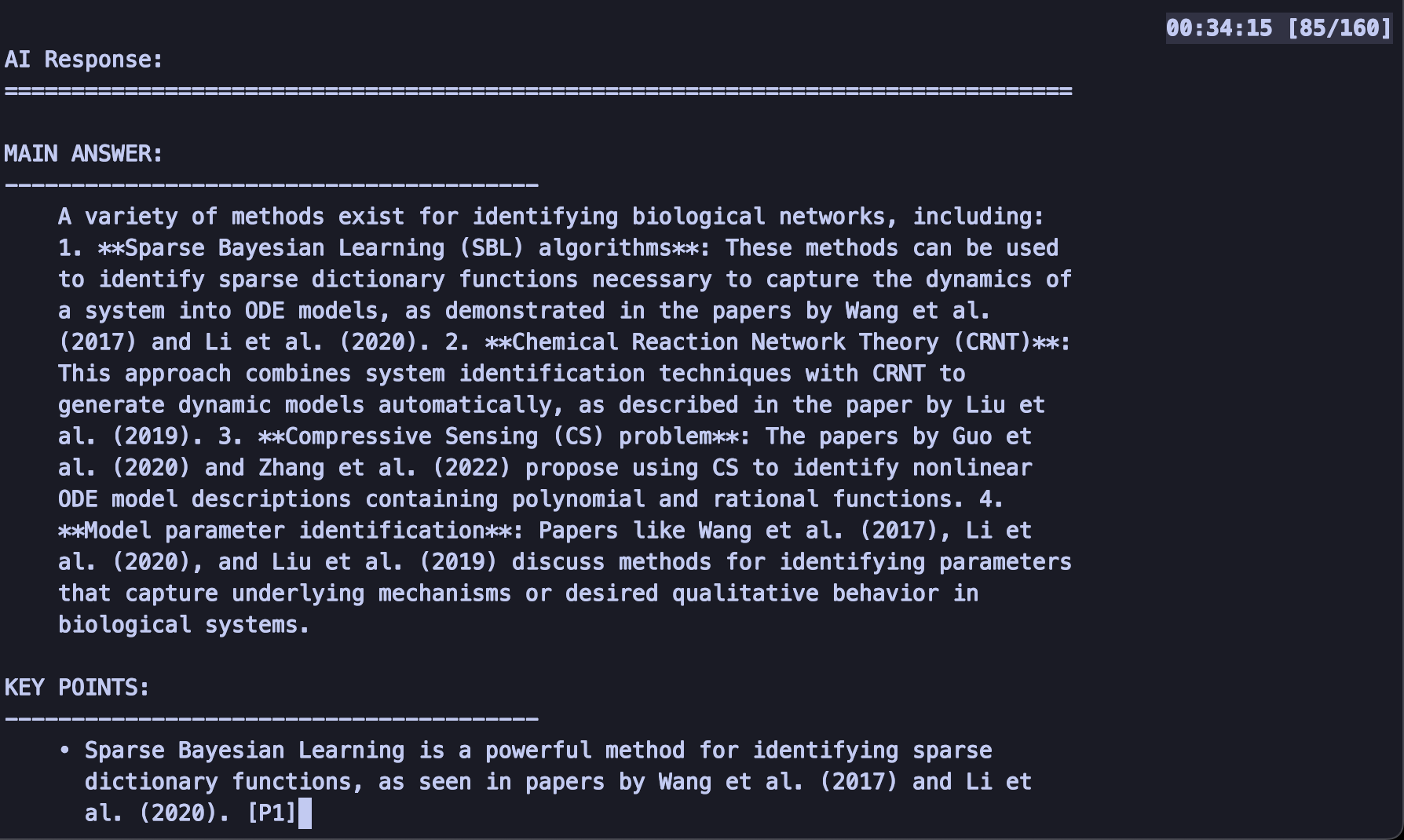

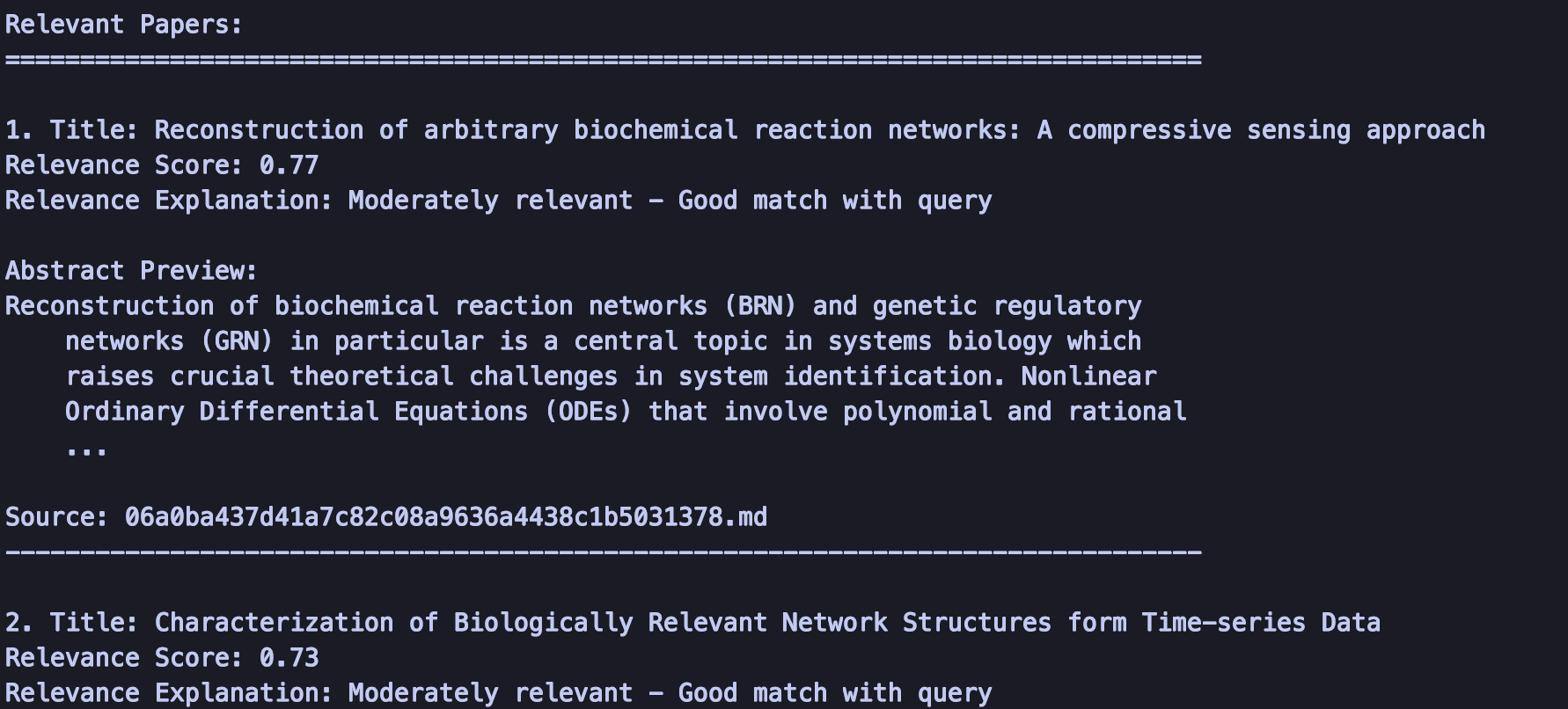

The system will provide:

1. Main Answer: Comprehensive summary

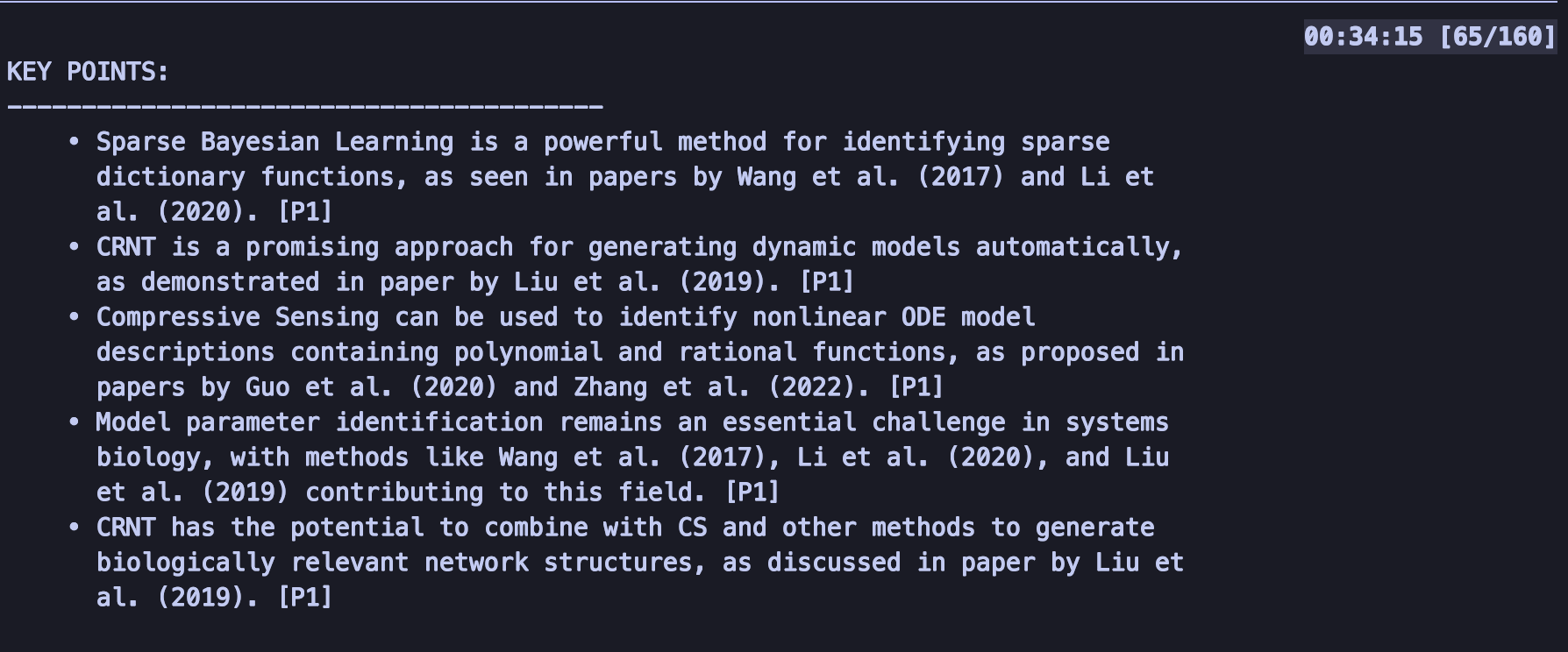

2. Key Points: Important findings

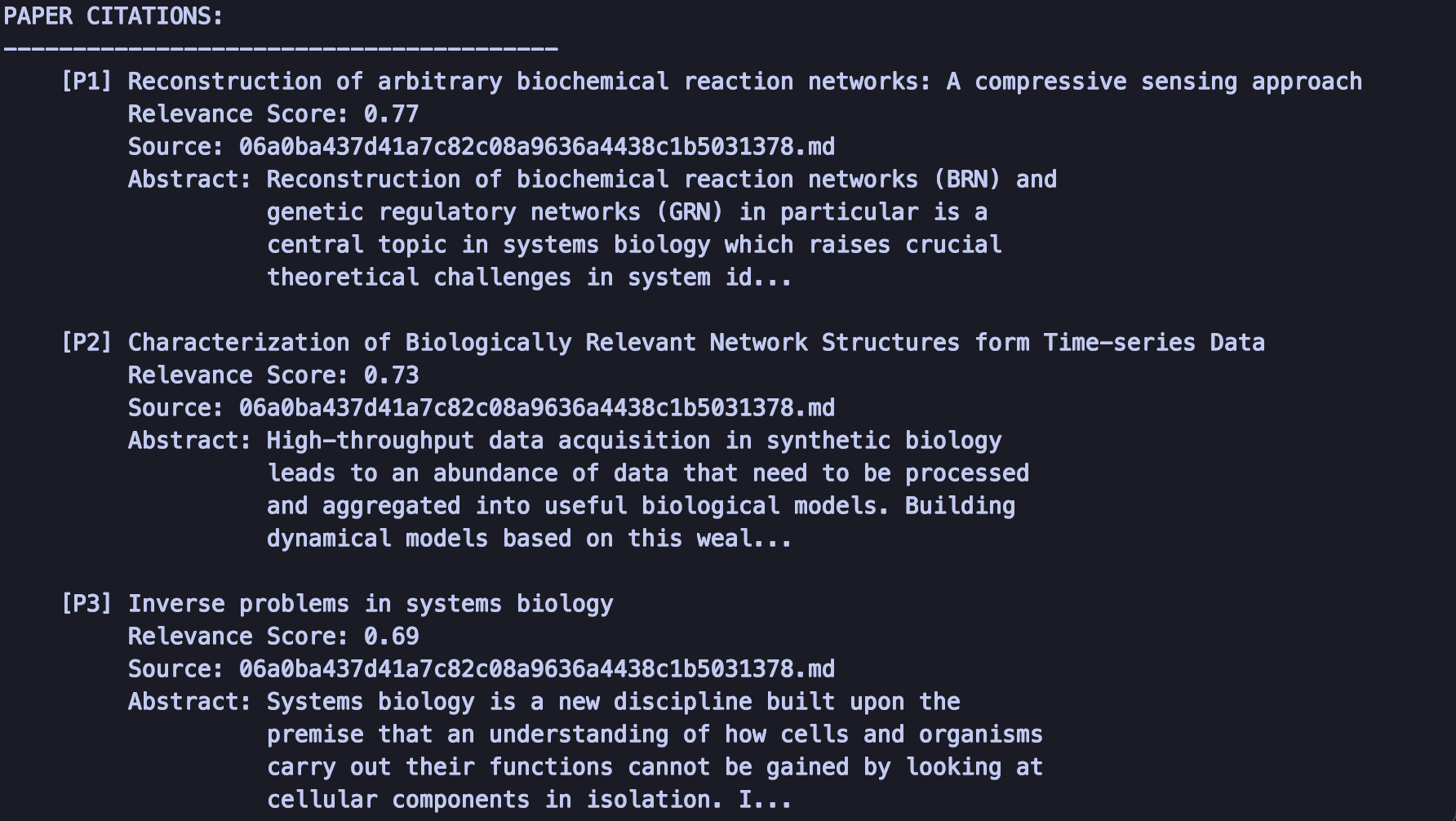

3. Paper Citations: Relevant sources

4. Limitations: Gaps in current knowledge

5. Relevance Scores: Why papers were selected

research-lens/

├── docker-compose.yml

├── requirements.txt

├── README.md

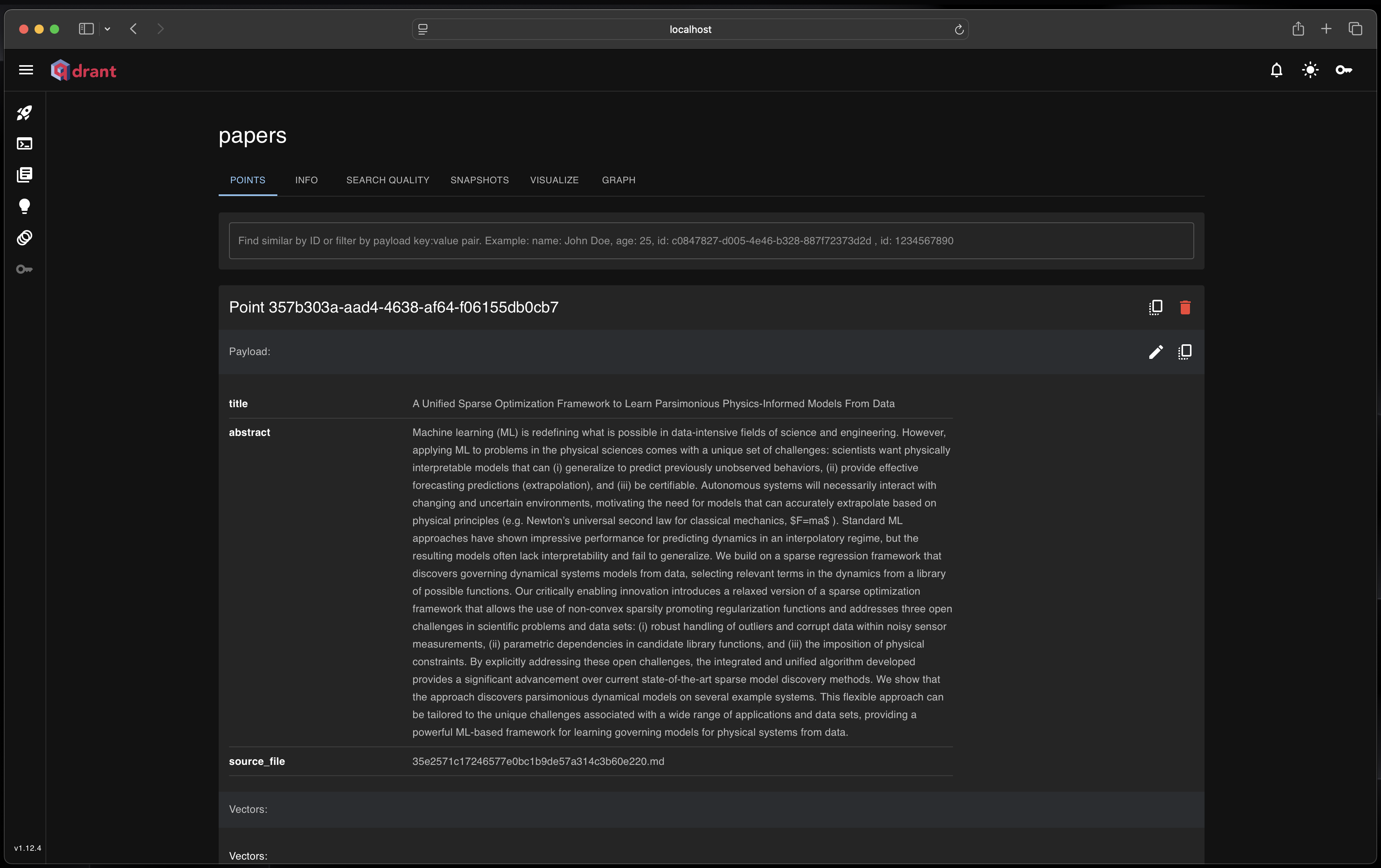

├── vector.py # Paper ingestion and processing

├── llmquery.py # Main Q&A interface

├── query.py # To query qdrant databse without llm

├── markdowns/ # Paper storage directory

└── processed_papers.json # Paper tracking database

系統配置的環境變量:

QDRANT_HOST=localhost # Qdrant server host

QDRANT_PORT=6333 # Qdrant server port

OLLAMA_HOST=localhost # Ollama server host

OLLAMA_PORT=11434 # Ollama server port 攝入紙:

查詢處理:

響應生成:

歡迎捐款!請:

git checkout -b feature/amazing-feature )git commit -m 'Add amazing feature' )git push origin feature/amazing-feature )該項目是根據MIT許可證獲得許可的 - 有關詳細信息,請參見許可證文件。

如果您在研究中使用此項目,請引用:

@software { PaperBrain_2024 ,

author = { Ansh Kumar and Apoorva Gupta } ,

title = { PaperBrain: Intelligent Research Paper Q&A System } ,

year = { 2024 } ,

url = { https://github.com/ansh-info/PaperBrain }

}