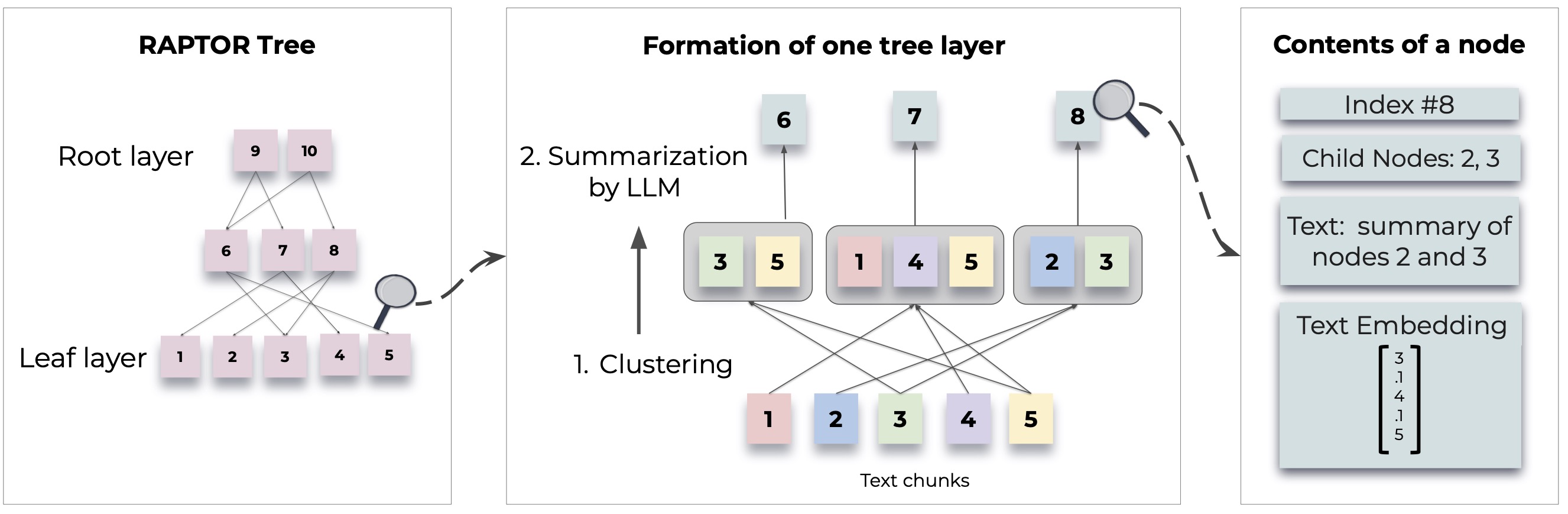

Raptor引入了一種新穎的方法,通過從文檔中構造遞歸樹結構來檢索啟動語言模型。這允許在大型文本中進行更有效和上下文感知的信息檢索,從而解決了傳統語言模型中的共同局限性。

有關詳細的方法和實施,請參閱原始論文:

在使用Raptor之前,請確保安裝Python 3.8+。克隆猛禽存儲庫並安裝必要的依賴項:

git clone https://github.com/parthsarthi03/raptor.git

cd raptor

pip install -r requirements.txt要開始使用Raptor,請按照以下步驟:

首先,設置OpenAI API密鑰並初始化猛禽配置:

import os

os . environ [ "OPENAI_API_KEY" ] = "your-openai-api-key"

from raptor import RetrievalAugmentation

# Initialize with default configuration. For advanced configurations, check the documentation. [WIP]

RA = RetrievalAugmentation ()將您的文本文檔添加到Raptor進行索引:

with open ( 'sample.txt' , 'r' ) as file :

text = file . read ()

RA . add_documents ( text )您現在可以使用猛禽根據索引文檔回答問題:

question = "How did Cinderella reach her happy ending?"

answer = RA . answer_question ( question = question )

print ( "Answer: " , answer )將構造的樹保存到指定的路徑:

SAVE_PATH = "demo/cinderella"

RA . save ( SAVE_PATH )將保存的樹加載回猛禽:

RA = RetrievalAugmentation ( tree = SAVE_PATH )

answer = RA . answer_question ( question = question )Raptor旨在靈活,並允許您整合任何匯總,提問(QA)和嵌入生成的模型。這是如何用自己的模型擴展猛禽的方法:

如果您希望使用其他語言模型進行摘要,則可以通過擴展BaseSummarizationModel類來做到這一點。實施summarize方法以集成您的自定義摘要邏輯:

from raptor import BaseSummarizationModel

class CustomSummarizationModel ( BaseSummarizationModel ):

def __init__ ( self ):

# Initialize your model here

pass

def summarize ( self , context , max_tokens = 150 ):

# Implement your summarization logic here

# Return the summary as a string

summary = "Your summary here"

return summary 對於自定義QA模型,擴展BaseQAModel類並實現answer_question方法。此方法應返回您的模型找到的最佳答案,並給定一個問題:

from raptor import BaseQAModel

class CustomQAModel ( BaseQAModel ):

def __init__ ( self ):

# Initialize your model here

pass

def answer_question ( self , context , question ):

# Implement your QA logic here

# Return the answer as a string

answer = "Your answer here"

return answer 要使用不同的嵌入模型,請擴展BaseEmbeddingModel模型類。實現create_embedding方法,該方法應返回輸入文本的向量表示:

from raptor import BaseEmbeddingModel

class CustomEmbeddingModel ( BaseEmbeddingModel ):

def __init__ ( self ):

# Initialize your model here

pass

def create_embedding ( self , text ):

# Implement your embedding logic here

# Return the embedding as a numpy array or a list of floats

embedding = [ 0.0 ] * embedding_dim # Replace with actual embedding logic

return embedding 實施自定義模型後,將它們與猛禽集成如下:

from raptor import RetrievalAugmentation , RetrievalAugmentationConfig

# Initialize your custom models

custom_summarizer = CustomSummarizationModel ()

custom_qa = CustomQAModel ()

custom_embedding = CustomEmbeddingModel ()

# Create a config with your custom models

custom_config = RetrievalAugmentationConfig (

summarization_model = custom_summarizer ,

qa_model = custom_qa ,

embedding_model = custom_embedding

)

# Initialize RAPTOR with your custom config

RA = RetrievalAugmentation ( config = custom_config )請查看demo.ipynb ,以獲取有關如何指定自己的摘要/QA模型的示例,例如Llama/Mistral/Gemma,以及嵌入Sbert等模型,例如與Raptor一起使用。

注意:更多示例和配置猛禽的方法即將到來。文檔和存儲庫更新中將提供高級用法和其他功能。

Raptor是一個開源項目,歡迎捐款。無論您是修復錯誤,添加新功能還是改進文檔,您的幫助都將受到讚賞。

猛禽根據麻省理工學院許可發布。有關詳細信息,請參見存儲庫中的許可證文件。

如果猛禽協助您的研究,請用如下來引用:

@inproceedings { sarthi2024raptor ,

title = { RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval } ,

author = { Sarthi, Parth and Abdullah, Salman and Tuli, Aditi and Khanna, Shubh and Goldie, Anna and Manning, Christopher D. } ,

booktitle = { International Conference on Learning Representations (ICLR) } ,

year = { 2024 }

}請繼續關注更多示例,配置指南和更新。