講師是最受歡迎的Python庫,用於與大型語言模型(LLMS)的結構化輸出合作,每月下載超過60萬。它建立在Pydantic的頂部,提供了一種簡單,透明且用戶友好的API,可以管理驗證,重試和流響應。準備好通過社區的最佳選擇來增強LLM工作流程!

如果您的公司經常使用教練,我們很樂意在我們的網站上擁有您的徽標!請填寫此表格

使用單個命令安裝講師:

pip install -U instructor現在,讓我們以一個簡單的示例來看講師:

import instructor

from pydantic import BaseModel

from openai import OpenAI

# Define your desired output structure

class UserInfo ( BaseModel ):

name : str

age : int

# Patch the OpenAI client

client = instructor . from_openai ( OpenAI ())

# Extract structured data from natural language

user_info = client . chat . completions . create (

model = "gpt-4o-mini" ,

response_model = UserInfo ,

messages = [{ "role" : "user" , "content" : "John Doe is 30 years old." }],

)

print ( user_info . name )

#> John Doe

print ( user_info . age )

#> 30講師提供了一個強大的掛鉤系統,使您可以攔截和記錄LLM交互過程的各個階段。這是一個簡單的示例,演示瞭如何使用鉤子:

import instructor

from openai import OpenAI

from pydantic import BaseModel

class UserInfo ( BaseModel ):

name : str

age : int

# Initialize the OpenAI client with Instructor

client = instructor . from_openai ( OpenAI ())

# Define hook functions

def log_kwargs ( ** kwargs ):

print ( f"Function called with kwargs: { kwargs } " )

def log_exception ( exception : Exception ):

print ( f"An exception occurred: { str ( exception ) } " )

client . on ( "completion:kwargs" , log_kwargs )

client . on ( "completion:error" , log_exception )

user_info = client . chat . completions . create (

model = "gpt-4o-mini" ,

response_model = UserInfo ,

messages = [

{ "role" : "user" , "content" : "Extract the user name: 'John is 20 years old'" }

],

)

"""

{

'args': (),

'kwargs': {

'messages': [

{

'role': 'user',

'content': "Extract the user name: 'John is 20 years old'",

}

],

'model': 'gpt-4o-mini',

'tools': [

{

'type': 'function',

'function': {

'name': 'UserInfo',

'description': 'Correctly extracted `UserInfo` with all the required parameters with correct types',

'parameters': {

'properties': {

'name': {'title': 'Name', 'type': 'string'},

'age': {'title': 'Age', 'type': 'integer'},

},

'required': ['age', 'name'],

'type': 'object',

},

},

}

],

'tool_choice': {'type': 'function', 'function': {'name': 'UserInfo'}},

},

}

"""

print ( f"Name: { user_info . name } , Age: { user_info . age } " )

#> Name: John, Age: 20此示例證明:

鉤子為函數的輸入和任何錯誤提供了寶貴的見解,從而增強了調試和監視功能。

import instructor

from anthropic import Anthropic

from pydantic import BaseModel

class User ( BaseModel ):

name : str

age : int

client = instructor . from_anthropic ( Anthropic ())

# note that client.chat.completions.create will also work

resp = client . messages . create (

model = "claude-3-opus-20240229" ,

max_tokens = 1024 ,

system = "You are a world class AI that excels at extracting user data from a sentence" ,

messages = [

{

"role" : "user" ,

"content" : "Extract Jason is 25 years old." ,

}

],

response_model = User ,

)

assert isinstance ( resp , User )

assert resp . name == "Jason"

assert resp . age == 25確保安裝cohere並使用export CO_API_KEY=<YOUR_COHERE_API_KEY>設置系統環境變量。

pip install cohere

import instructor

import cohere

from pydantic import BaseModel

class User ( BaseModel ):

name : str

age : int

client = instructor . from_cohere ( cohere . Client ())

# note that client.chat.completions.create will also work

resp = client . chat . completions . create (

model = "command-r-plus" ,

max_tokens = 1024 ,

messages = [

{

"role" : "user" ,

"content" : "Extract Jason is 25 years old." ,

}

],

response_model = User ,

)

assert isinstance ( resp , User )

assert resp . name == "Jason"

assert resp . age == 25確保安裝Google AI Python SDK。您應該使用API密鑰設置GOOGLE_API_KEY環境變量。雙子座工具調用還需要安裝jsonref 。

pip install google-generativeai jsonref

import instructor

import google . generativeai as genai

from pydantic import BaseModel

class User ( BaseModel ):

name : str

age : int

# genai.configure(api_key=os.environ["API_KEY"]) # alternative API key configuration

client = instructor . from_gemini (

client = genai . GenerativeModel (

model_name = "models/gemini-1.5-flash-latest" , # model defaults to "gemini-pro"

),

mode = instructor . Mode . GEMINI_JSON ,

)另外,您可以從OpenAI客戶端致電Gemini。您必須設置gcloud ,在頂點AI上進行設置,然後安裝Google Auth庫。

pip install google-auth import google . auth

import google . auth . transport . requests

import instructor

from openai import OpenAI

from pydantic import BaseModel

creds , project = google . auth . default ()

auth_req = google . auth . transport . requests . Request ()

creds . refresh ( auth_req )

# Pass the Vertex endpoint and authentication to the OpenAI SDK

PROJECT = 'PROJECT_ID'

LOCATION = (

'LOCATION' # https://cloud.google.com/vertex-ai/generative-ai/docs/learn/locations

)

base_url = f'https:// { LOCATION } -aiplatform.googleapis.com/v1beta1/projects/ { PROJECT } /locations/ { LOCATION } /endpoints/openapi'

client = instructor . from_openai (

OpenAI ( base_url = base_url , api_key = creds . token ), mode = instructor . Mode . JSON

)

# JSON mode is req'd

class User ( BaseModel ):

name : str

age : int

resp = client . chat . completions . create (

model = "google/gemini-1.5-flash-001" ,

max_tokens = 1024 ,

messages = [

{

"role" : "user" ,

"content" : "Extract Jason is 25 years old." ,

}

],

response_model = User ,

)

assert isinstance ( resp , User )

assert resp . name == "Jason"

assert resp . age == 25 import instructor

from litellm import completion

from pydantic import BaseModel

class User ( BaseModel ):

name : str

age : int

client = instructor . from_litellm ( completion )

resp = client . chat . completions . create (

model = "claude-3-opus-20240229" ,

max_tokens = 1024 ,

messages = [

{

"role" : "user" ,

"content" : "Extract Jason is 25 years old." ,

}

],

response_model = User ,

)

assert isinstance ( resp , User )

assert resp . name == "Jason"

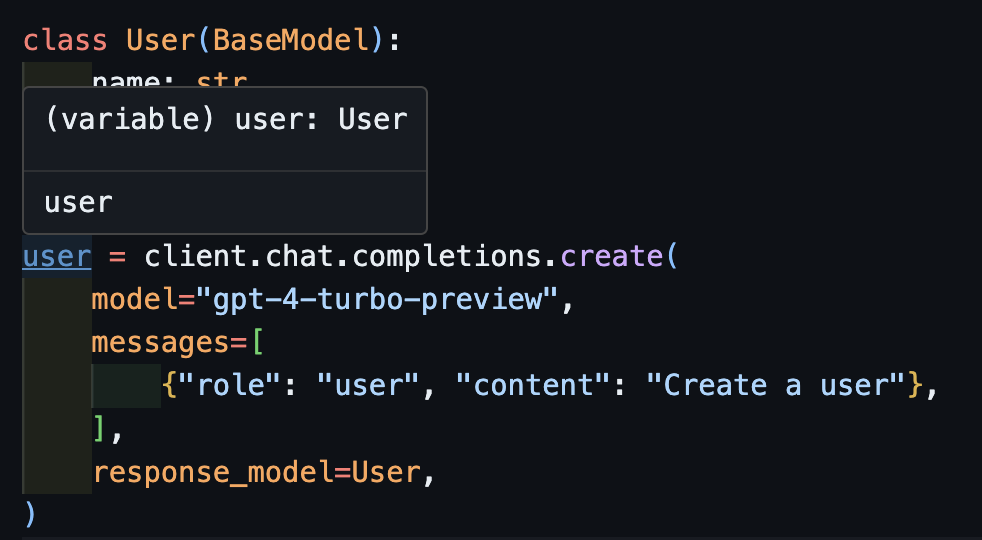

assert resp . age == 25 這是教練的夢想,但是由於Openai的修補,我不可能打字得很好。現在,有了新客戶,我們可以打字可以運行良好!我們還添加了一些create_*方法,以使創建迭代和部分的創建和訪問原始完成。

create import openai

import instructor

from pydantic import BaseModel

class User ( BaseModel ):

name : str

age : int

client = instructor . from_openai ( openai . OpenAI ())

user = client . chat . completions . create (

model = "gpt-4-turbo-preview" ,

messages = [

{ "role" : "user" , "content" : "Create a user" },

],

response_model = User ,

)現在,如果使用IDE,可以看到該類型已正確推斷。

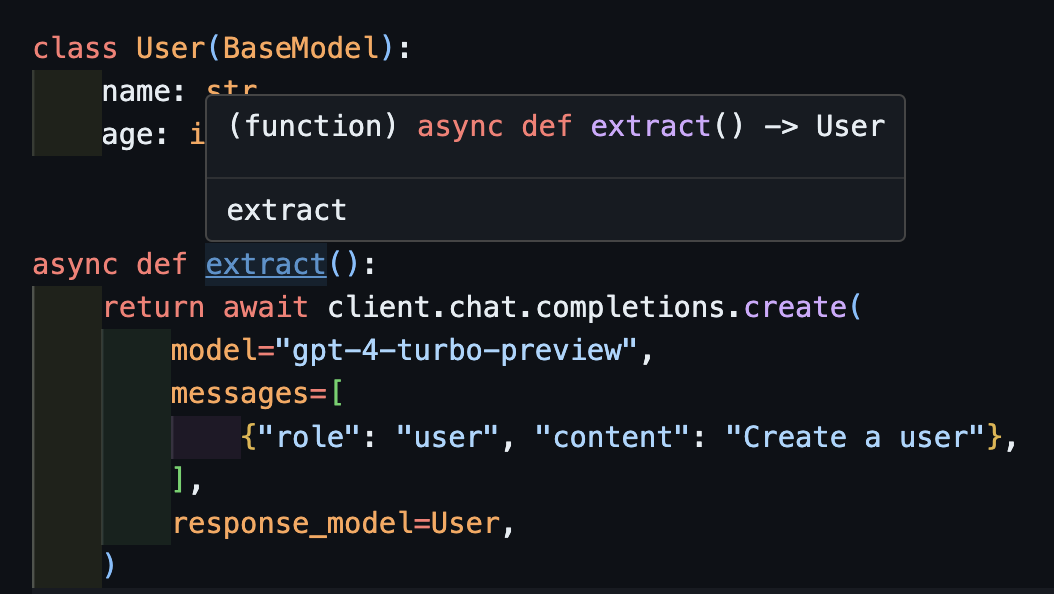

await create這也將與異步客戶端正確使用。

import openai

import instructor

from pydantic import BaseModel

client = instructor . from_openai ( openai . AsyncOpenAI ())

class User ( BaseModel ):

name : str

age : int

async def extract ():

return await client . chat . completions . create (

model = "gpt-4-turbo-preview" ,

messages = [

{ "role" : "user" , "content" : "Create a user" },

],

response_model = User ,

)請注意,僅僅因為我們返回create方法, extract()函數將返回正確的用戶類型。

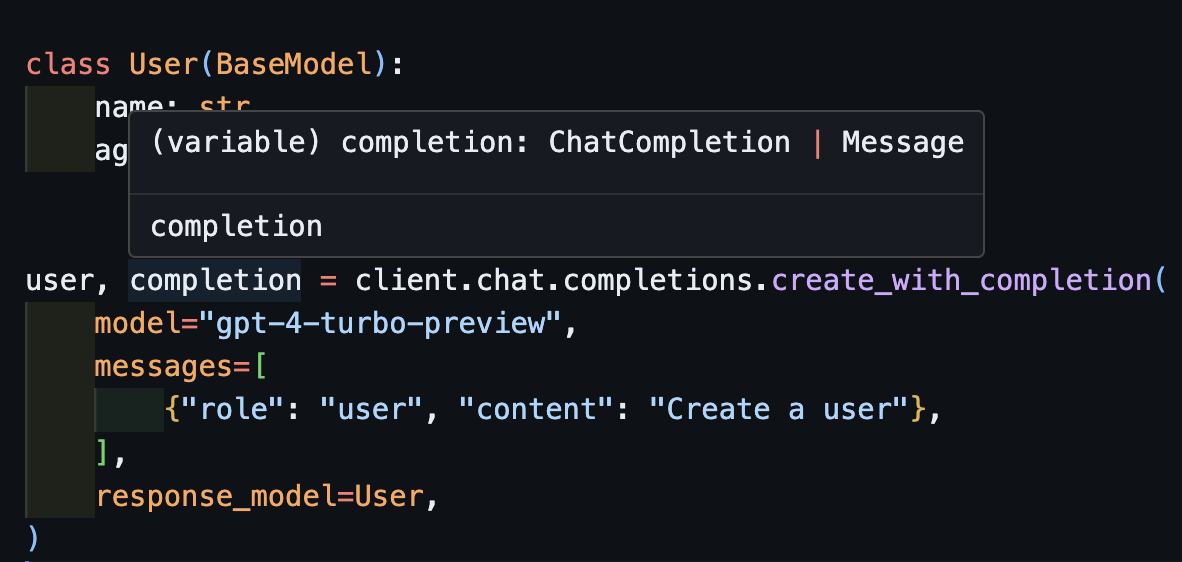

create_with_completion您也可以返回原始的完成對象

import openai

import instructor

from pydantic import BaseModel

client = instructor . from_openai ( openai . OpenAI ())

class User ( BaseModel ):

name : str

age : int

user , completion = client . chat . completions . create_with_completion (

model = "gpt-4-turbo-preview" ,

messages = [

{ "role" : "user" , "content" : "Create a user" },

],

response_model = User ,

)

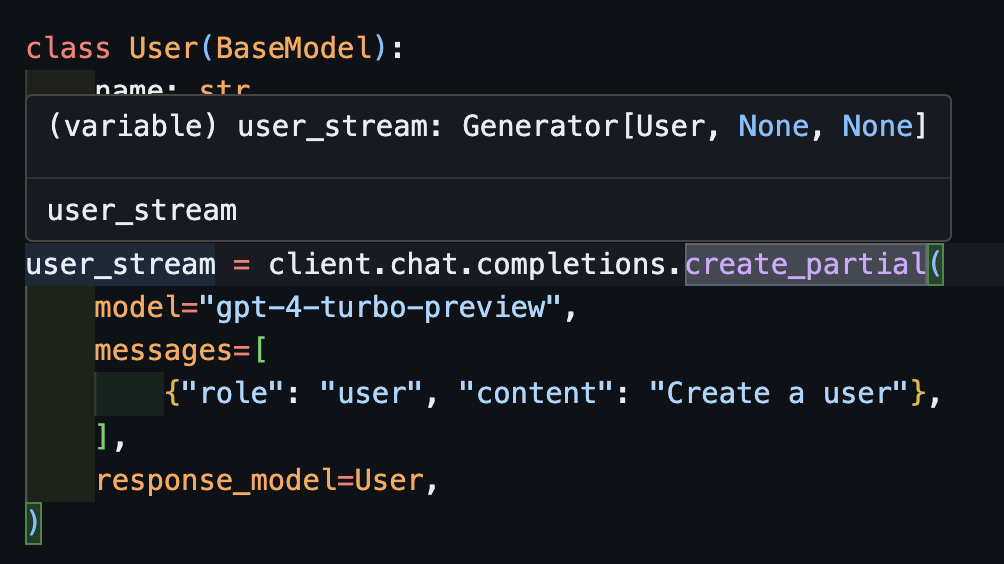

create_partial為了處理流,我們仍然支持Iterable[T]和Partial[T]但是為了簡化類型推理,我們還添加了create_iterable和create_partial方法!

import openai

import instructor

from pydantic import BaseModel

client = instructor . from_openai ( openai . OpenAI ())

class User ( BaseModel ):

name : str

age : int

user_stream = client . chat . completions . create_partial (

model = "gpt-4-turbo-preview" ,

messages = [

{ "role" : "user" , "content" : "Create a user" },

],

response_model = User ,

)

for user in user_stream :

print ( user )

#> name=None age=None

#> name=None age=None

#> name=None age=None

#> name=None age=None

#> name=None age=None

#> name=None age=None

#> name='John Doe' age=None

#> name='John Doe' age=None

#> name='John Doe' age=None

#> name='John Doe' age=30

#> name='John Doe' age=30

# name=None age=None

# name='' age=None

# name='John' age=None

# name='John Doe' age=None

# name='John Doe' age=30現在請注意,推斷的類型是Generator[User, None]

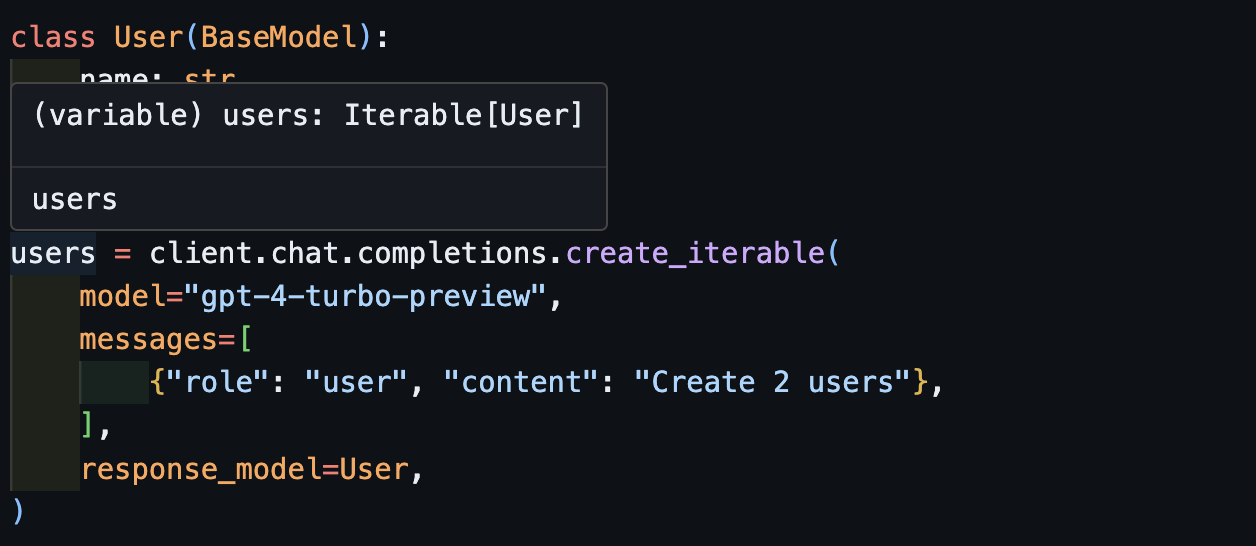

create_iterable當我們要提取多個對象時,我們會得到一個對象的功能。

import openai

import instructor

from pydantic import BaseModel

client = instructor . from_openai ( openai . OpenAI ())

class User ( BaseModel ):

name : str

age : int

users = client . chat . completions . create_iterable (

model = "gpt-4-turbo-preview" ,

messages = [

{ "role" : "user" , "content" : "Create 2 users" },

],

response_model = User ,

)

for user in users :

print ( user )

#> name='John Doe' age=30

#> name='Jane Doe' age=28

# User(name='John Doe', age=30)

# User(name='Jane Smith', age=25)

我們邀請您為pytest中的Evals做出貢獻,以監視OpenAI模型和instructor庫的質量。首先,請查看Evals的人類和Openai,並以Pytest測試的形式貢獻您自己的Evals。這些EVALS將每週運行一次,結果將發布。

如果您想提供幫助,請查看一些標記為good-first-issue或在這裡help-wanted問題。它們可以是改進代碼,來賓博客文章或新食譜的任何內容。

我們還提供一些添加的CLI功能,以便於方便:

instructor jobs :這有助於使用OpenAI創建微調工作。簡單使用instructor jobs create-from-file --help - 螺旋

instructor files :輕鬆管理上傳的文件。您將能夠從命令行創建,刪除和上傳文件

instructor usage :您可以每次前往OpenAI站點,而是可以監視CLI的使用情況,並按日期和時間段過濾。請注意,用法通常需要約5-10分鐘才能從Openai的一邊更新

該項目是根據MIT許可證的條款獲得許可的。