代码,数据集,文章“大语模型对归因的自动评估”的模型

2023年6月26日:1)包括GPT-4在内的更多模型的评估结果。 2)彻底重新检查Attreval-Gensearch数据集并纠正某些注释问题。更新了数据集。 3)培训和评估代码以及发布的模型检查点。

我们在以下位置发布数据集(包括培训和两个评估集:Attreval-Simulation和Attreval-Gensearch):HuggingFace数据集(更多详细信息可以在数据集页面上找到)

#loading dataset

from datasets import load_dataset

# training

attr_train = load_dataset ( "osunlp/AttrScore" , "combined_train" )

# test

# attr_eval_simulation = load_dataset("osunlp/AttrScore", "attreval_simulation")

attr_eval_gensearch = load_dataset ( "osunlp/AttrScore" , "attreval_gensearch" )我们展示了促使LLM和相关任务的重新调整数据的微调LLM的结果。

| 模拟 | Gensearch | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 环境 | 型号(尺寸) | attr。 | 反对。 | 额外的。 | 全面的 | attr。 | 反对。 | 额外的。 | 全面的 |

| 零射 | 羊驼(7b) | 50.0 | 4.0 | 1.4 | 33.6 | 50.7 | 8.6 | 3.6 | 34.3 |

| 羊驼(13b) | 48.3 | 5.6 | 2.2 | 33.5 | 50.6 | 6.1 | 19.3 | 34.7 | |

| 维库纳(13b) | 46.3 | 8.3 | 21.6 | 34.6 | 54.4 | 13.3 | 26.1 | 41.4 | |

| chatgpt | 45.7 | 17.9 | 52.7 | 43.2 | 61.2 | 20.6 | 53.3 | 55.0 | |

| GPT-4 | 58.7 | 23.2 | 61.5 | 55.6 | 87.3 | 45.0 | 89.6 | 85.1 | |

| 几次 | 羊驼(7b) | 45.4 | 8.2 | 9.6 | 31.9 | 49.6 | 5.2 | 13.5 | 37.2 |

| 羊驼(13b) | 38.9 | 20.1 | 2.2 | 33.1 | 50.5 | 10.3 | 5.6 | 34.8 | |

| 维库纳(13b) | 35.4 | 37.2 | 0.3 | 32.6 | 50.6 | 9.1 | 8.4 | 34.1 | |

| chatgpt | 46.6 | 27.6 | 35.8 | 39.2 | 62.6 | 26.8 | 49.5 | 53.3 | |

| GPT-4 | 61.1 | 31.3 | 68.8 | 60.0 | 85.2 | 53.3 | 88.9 | 84.3 | |

| 微调 | 罗伯塔(330m) | 62.5 | 54.6 | 74.7 | 65.0 | 47.2 | 25.2 | 62.3 | 49.8 |

| GPT2(1.5B) | 63.6 | 54.6 | 71.9 | 63.5 | 51.1 | 18.6 | 60.7 | 47.4 | |

| T5(770m) | 45.9 | 57.1 | 71.6 | 59.1 | 58.5 | 24.3 | 72.5 | 61.6 | |

| Flan-T5(770m) | 57.3 | 50.1 | 70.5 | 59.3 | 64.3 | 27.6 | 72.9 | 64.5 | |

| Flan-T5(3b) | 48.1 | 48.7 | 67.1 | 55.7 | 77.7 | 44.4 | 80.0 | 75.2 | |

| Flan-T5(11b) | 48.4 | 49.9 | 66.5 | 55.4 | 81.6 | 38.9 | 76.9 | 72.7 | |

| 骆驼(7b) | 62.2 | 50.7 | 74.6 | 62.8 | 77.9 | 41.1 | 78.3 | 72.5 | |

| 羊驼(7b) | 66.8 | 41.1 | 76.8 | 64.5 | 73.0 | 30.2 | 80.0 | 72.5 | |

| 羊驼(13b) | 63.6 | 48.9 | 75.8 | 63.6 | 77.5 | 34.5 | 79.4 | 73.3 | |

| 维库纳(13b) | 66.2 | 49.1 | 78.6 | 66.0 | 69.4 | 37.7 | 79.9 | 72.1 |

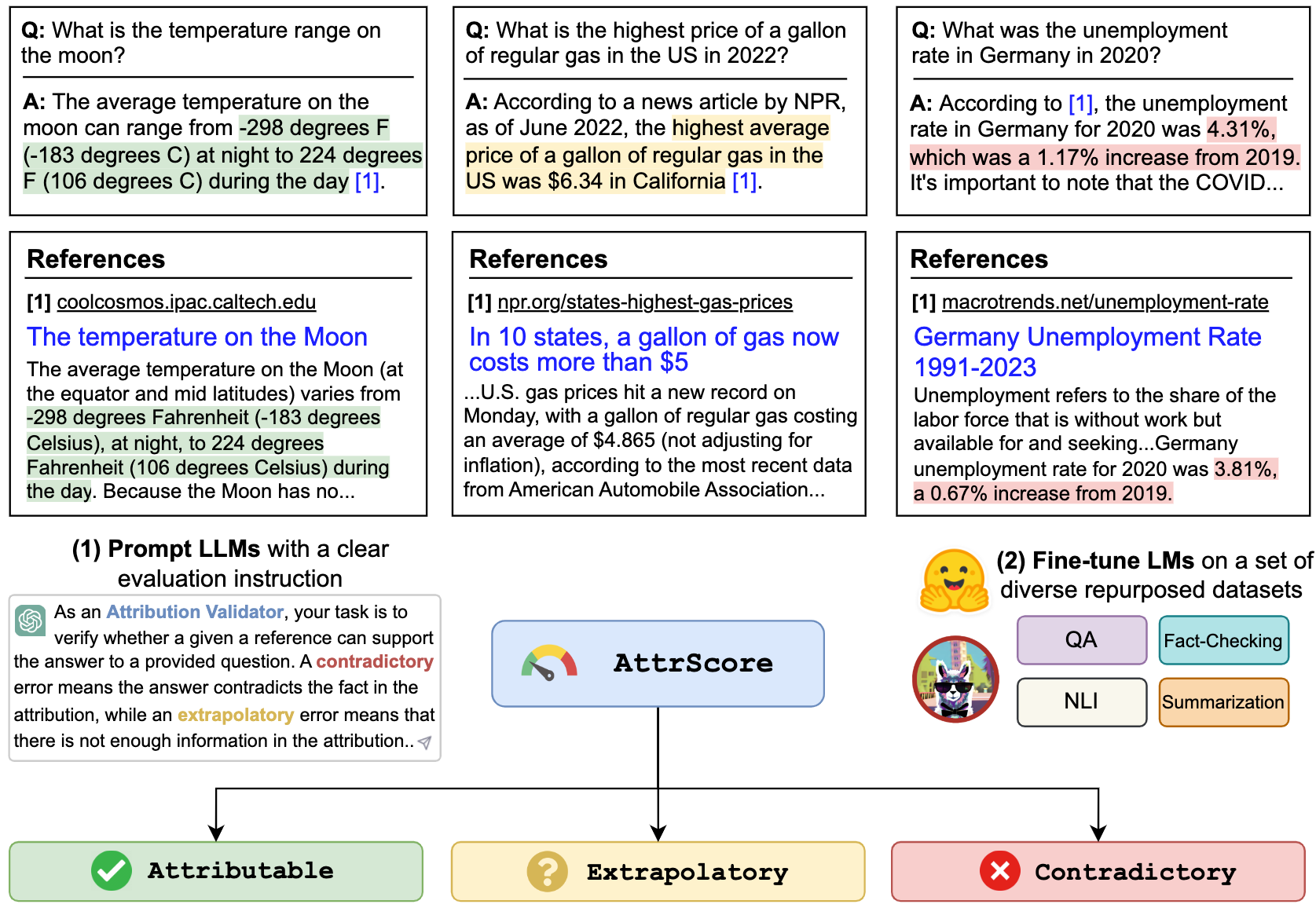

我们可以提示LLMS(例如ChatGpt和GPT-4)评估归因。输入是评估任务提示,索赔(查询 +答案的串联)和参考。例如,

验证给定的参考是否可以支持索赔。选项:归因,外推或矛盾。归因于该参考文献完全支持该索赔,外推是指参考缺乏足够的信息来验证索赔,而矛盾的是,该索赔与参考文献中提供的信息相矛盾。

主张:谁是Twitter的现任首席执行官? Twitter的现任首席执行官是Elon Musk

参考:Elon Musk是Twitter的首席执行官。马斯克(Musk)于2022年10月接任首席执行官,此前亿万富翁提议以440亿美元的价格购买社交媒体公司,试图退出,然后最终通过收购进行了收购。在成为首席执行官,前首席执行官Parag Agrawal之后,CFO NED SEGAL以及法律事务和政策主管Vijaya Gadde都被驳回了公司。

要复制Chatgpt/GPT4表中的编号,请在"./api_key.txt"中复制OpenAI API键,然后运行笔记本示例prompt_chatgpt_gpt4.ipynb

为了提示美洲驼/羊驼/维库纳,请参见下面有关这些模型的推断。

您可以在我们重新使用的数据集中微调所有LMS来评估归因。

在这里,我们举例说明了骆驼/羊驼/维库纳(Vicuna)的示例。您可以与任何Llama家庭模型一起使用--model_name_or_path 。我们对具有4个A100 80GB GPU的Llama/羊驼/Vicuna 7b/13b型号进行全面微调。

torchrun --nproc_per_node 4 train_alpaca.py

--model_name_or_path chavinlo/alpaca-13b

--data_path osunlp/AttrScore

--train_subset ' combined_train '

--input_has_query True

--num_train_samples -1

--bf16 True

--output_dir tmp/alpaca_13b_combined_train/

--evaluation_strategy steps

--eval_steps 500

--num_train_epochs 1

--model_max_length 512

--per_device_train_batch_size 2

--per_device_eval_batch_size 2

--gradient_accumulation_steps 8

--save_strategy steps

--save_steps 5000

--save_total_limit 1

--learning_rate 2e-5

--weight_decay 0.

--warmup_ratio 0.03

--lr_scheduler_type cosine

--logging_steps 1

--fsdp ' full_shard auto_wrap '

--fsdp_transformer_layer_cls_to_wrap ' LlamaDecoderLayer '

--tf32 True

您还可以加载我们的微调模型进行评估。我们提供以下在HuggingFace模型中combined_train的检查点:

例如,

from transformers import AutoTokenizer , AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer . from_pretrained ( "osunlp/attrscore-flan-t5-xl" )

model = AutoModelForSeq2SeqLM . from_pretrained ( "osunlp/attrscore-flan-t5-xl" )

input = "As an Attribution Validator, your task is to verify whether a given reference can support the given claim. A claim can be either a plain sentence or a question followed by its answer. Specifically, your response should clearly indicate the relationship: Attributable, Contradictory or Extrapolatory. A contradictory error occurs when you can infer that the answer contradicts the fact presented in the context, while an extrapolatory error means that you cannot infer the correctness of the answer based on the information provided in the context. n n Claim: Who is the current CEO of Twitter? The current CEO of Twitter is Elon Musk n Reference: Elon Musk is the CEO of Twitter. Musk took over as CEO in October 2022 following a back-and-forth affair in which the billionaire proposed to purchase the social media company for $44 billion, tried to back out, and then ultimately went through with the acquisition. After becoming CEO, former CEO Parag Agrawal, CFO Ned Segal, and legal affairs and policy chief Vijaya Gadde were all dismissed from the company."

input_ids = tokenizer . encode ( input , return_tensors = "pt" )

outputs = model . generate ( input_ids )

output = tokenizer . decode ( outputs [ 0 ], skip_special_tokens = True )

print ( output ) #'Attributable'或简单地使用pipeline

from transformers import pipeline

model = pipeline ( "text2text-generation" , "osunlp/attrscore-flan-t5-xl" )

input = "As an Attribution Validator, your task is to verify whether a given reference can support the given claim. A claim can be either a plain sentence or a question followed by its answer. Specifically, your response should clearly indicate the relationship: Attributable, Contradictory or Extrapolatory. A contradictory error occurs when you can infer that the answer contradicts the fact presented in the context, while an extrapolatory error means that you cannot infer the correctness of the answer based on the information provided in the context. n n Claim: Who is the current CEO of Twitter? The current CEO of Twitter is Elon Musk n Reference: Elon Musk is the CEO of Twitter. Musk took over as CEO in October 2022 following a back-and-forth affair in which the billionaire proposed to purchase the social media company for $44 billion, tried to back out, and then ultimately went through with the acquisition. After becoming CEO, former CEO Parag Agrawal, CFO Ned Segal, and legal affairs and policy chief Vijaya Gadde were all dismissed from the company."

output = model ( input )[ 0 ][ 'generated_text' ]

print ( output ) #'Attributable'我们显示了基于骆驼的模型的推论和评估脚本:

python inference_alpaca.py

--model_name_or_path osunlp/attrscore-vicuna-13b

--test_data_path osunlp/AttrScore

--subset_name attreval_simulation

--model_max_length 512该项目中的所有数据集均仅用于研究目的。我们收集并注释数据,以在新的bing生成搜索引擎的帮助下,使用网络上的公开信息进行评估。我们承认,LLM有可能复制和扩大数据中存在的有害信息。我们通过仔细选择我们的评估数据并进行分析以识别和减轻在此过程中的潜在风险来减轻这种风险。

我们注释的评估集Attreval-Gensearch源自新的Bing,该新的Bing使用GPT-4作为骨干。至关重要的是,我们还使用GPT-4来评估Attreval-Gensearch的归因,该搜索的总体准确性约为85%。一些偏见可能来自GPT-4生成测试示例和评估归因,这可能会使我们对模型的真实性能的理解有可能偏斜。因此,我们警告不要过度优势。我们还承认,Attreval-Gensearch的大小是中等的,这可能不能完全代表属性LLMS的真正使用设置。

此外,Attreval-Simulation数据集仍然具有实际情况的差距。此模拟数据集中的错误模式可能过于简单,并且缺乏多样性,这可能会限制模型有效处理更复杂和多样化的现实世界错误的能力。还值得注意的是,该模拟数据集可能包含噪声和错误标签,这可能进一步阻碍了模型的学习和随后的性能。如何获得高质量的培训数据以进行大规模归因评估可能是未来发展的主要重点。

如果您发现此代码或数据集有用,请考虑引用我们的论文:

@article { yue2023automatic ,

title = { Automatic Evaluation of Attribution by Large Language Models } ,

author = { Yue, Xiang and Wang, Boshi and Zhang, Kai and Chen, Ziru and Su, Yu and Sun, Huan } ,

journal = { arXiv preprint arXiv:2305.06311 } ,

year = { 2023 }

}如果您有任何疑问,请随时接触。 Xiang Yue,Yu Su,Huan Sun