ByteDance, together with research teams from the University of China and Singapore, has launched a new AI image editing system called PhotoDoodle, which is redefining our perception of image creation. PhotoDoodle, based on the Flux.1 model, is able to learn artistic style from a small number of samples and execute specific editing instructions accurately, opening up new possibilities for creative expression.

PhotoDoodle's core technology is the OmniEditor system developed by the research team, which cleverly utilizes LoRA (low-rank adaptive) technology to improve the Flux.1 image generation model of German startup Black Forest Labs. This approach does not require a complete change of the weights of the original model, but instead enables the ability to adjust from tiny concepts to full style conversion by adding a dedicated small matrix.

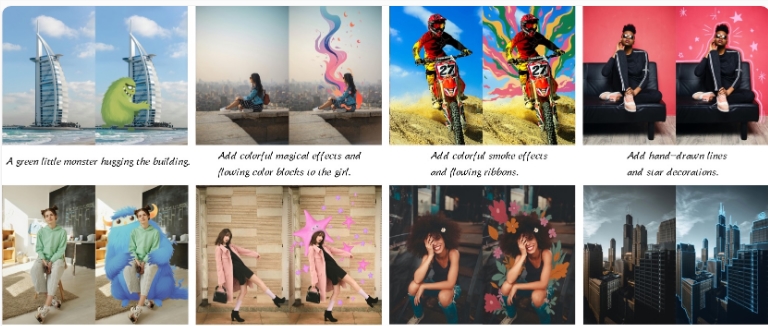

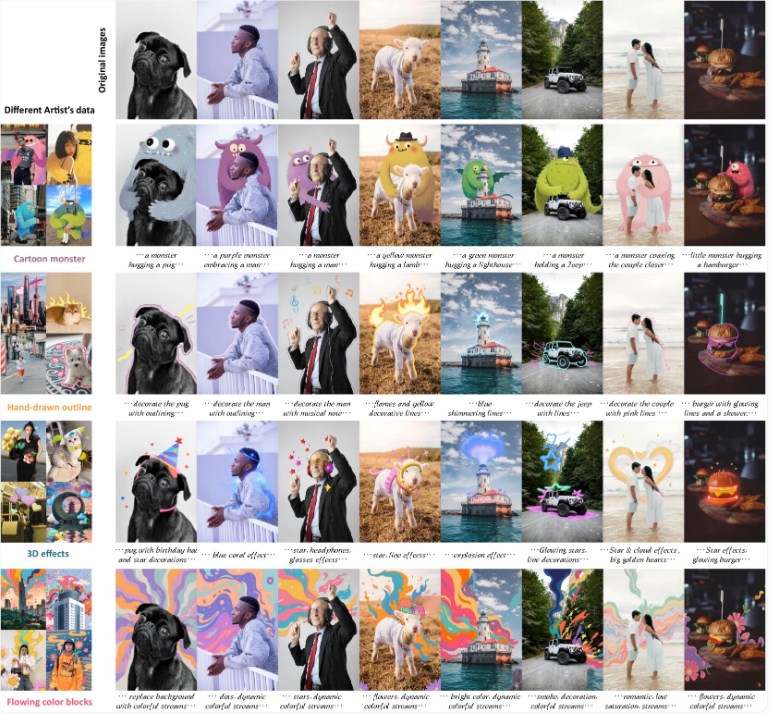

The researchers then used a variant called EditLoRA to train OmniEditor to replicate a unique artistic style. Through selected pairs of images created in collaboration with artists, the system is able to grasp the subtleties of each artistic style.

PhotoDoodle's most eye-catching innovation is the "position coding cloning" technology. This technology enables AI to remember the exact location of each pixel in the original image, thus maintaining the integrity of the picture composition when adding new elements and ensuring that newly added elements naturally blend into the background.

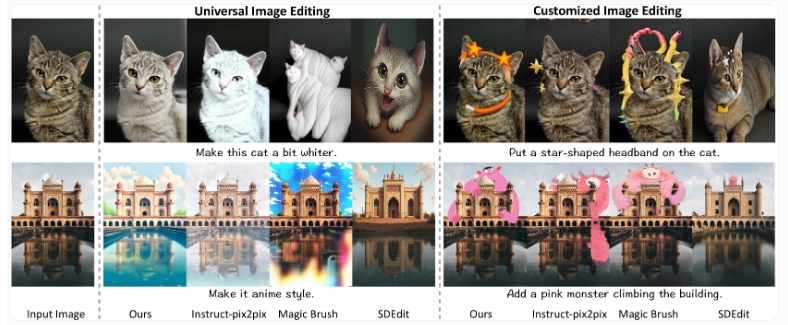

This solves the key pain points of traditional image editing AI: either changing the entire image style or editing only local areas, making it difficult to incorporate new decorative elements while maintaining the original perspective and background. PhotoDoodle can achieve this breakthrough without additional parameter training, greatly improving processing efficiency.

In actual testing, PhotoDoodle easily deals with complex instructions from "making the cat whiter" to "adding a pink monster climbing up a building." Compared with the prior art, it performs excellently in benchmarks such as image-text description similarity, far exceeding its peers whether targeted editing or global image changes.

Currently, PhotoDoodle requires dozens of pairs of images and thousands of training steps to master the new style. The research team has turned its attention to more efficient single-image training methods and released a dataset containing six different art styles and more than 300 pairs of images. The relevant code has also been open sourced on GitHub, providing a solid foundation for future research.

Address: https://github.com/showlab/PhotoDoodle