Mistral AI recently launched a new language model called Saba, which focuses on improving understanding of language and cultural differences in the Middle East and Southeast Asia. This innovative move marks an important step in regional application of AI technologies, especially in multilingual processing and cultural adaptability.

The Saba model has 24 billion parameters, and while smaller than many competitors, Mistral AI claims it provides higher speeds and lower costs while ensuring accuracy. Its architecture may be similar to the Mistral Small3 model. Saba is capable of running efficiently on low-performance systems, and even at a single GPU setup that can achieve speeds of more than 150 tokens per second. This efficiency allows Saba to perform excellent performance in resource-limited environments, providing more users with convenient AI solutions.

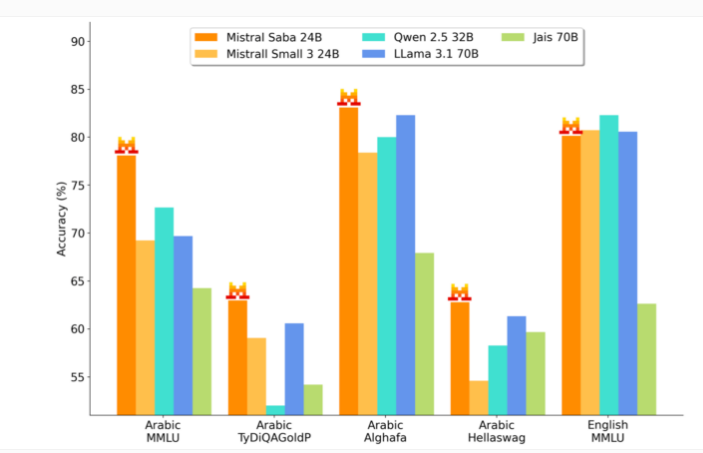

The model is particularly good at dealing with Arabic and Hindi, including South Hindi, such as Tamil and Malayalam. Mistral AI benchmarks show that Saba excels in Arabic while maintaining comparable abilities to English. This multilingual processing capability makes Saba have broad application prospects in cross-cultural communication and multilingual environments.

Saba has been applied in real-life scenarios, including Arabic virtual assistants and dedicated tools in the energy, financial markets and healthcare sectors. Its understanding of local idioms and cultural references enables it to effectively generate content in a specific area. This deep cultural understanding has enabled Saba to perform well in providing personalized services and support, meeting the diverse needs of users in different regions.

Users can access Saba through paid APIs or local deployments. Like other models of Mistral AI, Saba is not an open source model. This business model ensures that Mistral AI can continue to invest in research and development and provide users with higher quality products and services.

Mistral's benchmark test shows that Saba performs well in Arabic and has comparable English skills | Source: Mistral AI

The launch of Saba reflects the AI field's attention to the needs of language models in specific regions. Similar research is being conducted by other organizations such as the OpenGPT-X project (release of Teuken-7B model), OpenAI (developing a Japanese-specific GPT-4 model) and the EuroLingua project (focusing on European languages). This trend shows that AI developers around the world are actively responding to the challenges of multilingual and cultural diversity and promoting the popularization and application of AI technologies worldwide.

Traditional large language models mainly rely on a large number of English text data sets for training, and it is easy to ignore the nuances of specific languages. Saba aims to fill this gap and provide more accurate and more language processing capabilities that are in line with the local cultural context. This targeted design makes Saba perform well in specific language and cultural environments, providing users with more accurate and personalized services.