The research team from the University of Hong Kong and Tencent recently launched a revolutionary multimodal recommendation system - DiffMM, which significantly improves the accuracy of short video recommendations through innovative technical means. The DiffMM system builds a complex graph structure containing user and video information, and uses graph diffusion and comparison learning technology to deeply analyze the interactive relationship between users and videos, thereby achieving more personalized and accurate content recommendations.

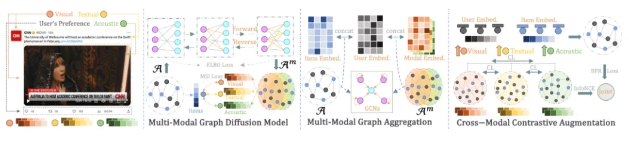

The core technical framework of the DiffMM system consists of three parts: multimodal graph diffusion model, multimodal graph aggregation, and cross-modal contrast enhancement. The multimodal graph diffusion model adopts a modal perceptual denoising diffusion probability model, effectively integrates the coordinated signals between users and items with multimodal information, and solves the negative impact problems in traditional multimodal recommendation systems. In addition, through graph diffusion optimization technology of graph probability diffusion paradigm and modal perception, DiffMM realizes modal perception generation and optimization of user-item maps, further improving the system's recommendation quality.

In terms of cross-modal contrast enhancement, the DiffMM system introduces modal-aware contrast view and contrast enhancement methods, which can effectively capture the consistency of user interaction modes on different items, thereby significantly improving the overall performance of the recommendation system. This innovative approach not only enhances the system's understanding of user preferences, but also improves the diversity and accuracy of recommended results.

The research team elaborated in detail on the design principles and experimental results of the DiffMM system in the paper. The relevant paper has been published on the arXiv platform. The paper link is: https://arxiv.org/abs/2406.1178. This research not only provides a new technical paradigm for the field of multimodal recommendation systems, but also provides important theoretical support and practical guidance for future content recommendations of short video platforms.

Key Highlights:

⭐ The new DiffMM paradigm proposed by the University of Hong Kong and Tencent has significantly improved the performance of multimodal recommendation systems.

⭐ The DiffMM system deeply understands the complex relationship between users and videos through graph diffusion and comparison learning techniques.

⭐ The introduction of cross-modal contrast enhancement methods has greatly improved the accuracy and overall performance of the recommendation system.