Against the backdrop of the rapid development of digital media today, the improvement and recovery of video quality have become a highly anticipated research field. With the popularity of video content production, users' requirements for video clarity and details are constantly increasing. However, during the generation, transmission or storage process of video, the picture is often blurred and the loss of details due to compression, noise or other factors. To solve this problem, Nanyang Technological University and ByteDance research team jointly developed a breakthrough video recovery technology called SeedVR, which brought new solutions to the field of video processing.

SeedVR’s core technology lies in its innovative Diffusion Transformer model, which is optimized specifically for the complex challenges of video recovery in the real world. Unlike traditional video recovery methods, SeedVR introduces a mobile window attention mechanism, which significantly improves the system's processing capabilities for long video sequences. By using variable-sized windows in spatial and temporal dimensions, SeedVR successfully breaks through the limitations of traditional methods when dealing with high-resolution video. In addition, SeedVR can effectively fix flickering problems common in AI-generated videos, making it perform well when dealing with videos of any length.

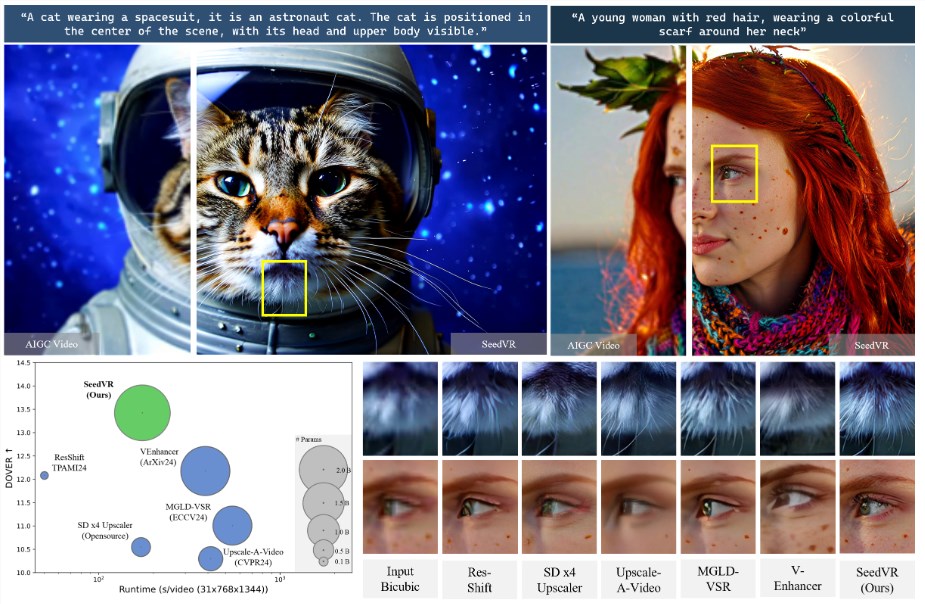

In terms of technical implementation, SeedVR adopts the MM-DiT basic model and has made significant improvements to it. The research team replaced the traditional fully self-attention mechanism with a window attention mechanism and boldly expanded the window size from the traditional 8x8 to 64x64. This innovative design allows SeedVR to provide clearer and more detailed recovery results when processing high-resolution videos, significantly improving the visual quality of the video.

In addition to the window attention mechanism, SeedVR also integrates a variety of advanced technologies to further improve performance. For example, the use of causal video autoencoder enables the model to understand and generate video content more accurately. At the same time, the training method of hybrid images and videos and the step-by-step training strategy provide SeedVR with strong learning ability, making it outstanding in both synthetic videos and real video scenarios.

SeedVR demonstrates outstanding performance in several benchmarks, especially when processing AI-generated videos. Experimental results show that SeedVR can not only restore the details in the video, but also effectively maintain the overall consistency of the picture, providing users with a more realistic and immersive visual experience.

The launch of SeedVR marks a new era in video recovery technology. This innovative technology not only provides higher quality assurance for video creators and consumers, but also opens up new application possibilities for related industries (such as film and television production, security monitoring, etc.). It is worth noting that although SeedVR's technical achievements are impressive, its code has not yet been released publicly, which leaves more room for imagination for future research and application.

Project introduction: https://iceclear.github.io/projects/seedvr/

Key points:

SeedVR uses the mobile window attention mechanism to successfully improve the processing capabilities of long video sequences.

The technology adopts a larger window size, significantly improving the recovery quality of high-resolution video.

Combining a variety of modern technology, SeedVR performs outstandingly in multiple benchmarks, especially for AI-generated videos.