In recent years, the rapid development of image re-illumination technology has benefited from the accumulation of large-scale data sets and the wide application of pre-trained diffusion models, which makes consistent illumination becoming increasingly common in image processing. However, in the field of video re-illumination, technological progress is relatively lagging due to the high training costs and the lack of diversified and high-quality video re-illumination datasets.

Simply applying the image re-lighting model to video processing frame by frame often leads to a series of problems, such as inconsistency of the light source and fluctuations in the appearance of the re-lighting, which ultimately triggers flickering in the video, seriously affecting the viewing experience.

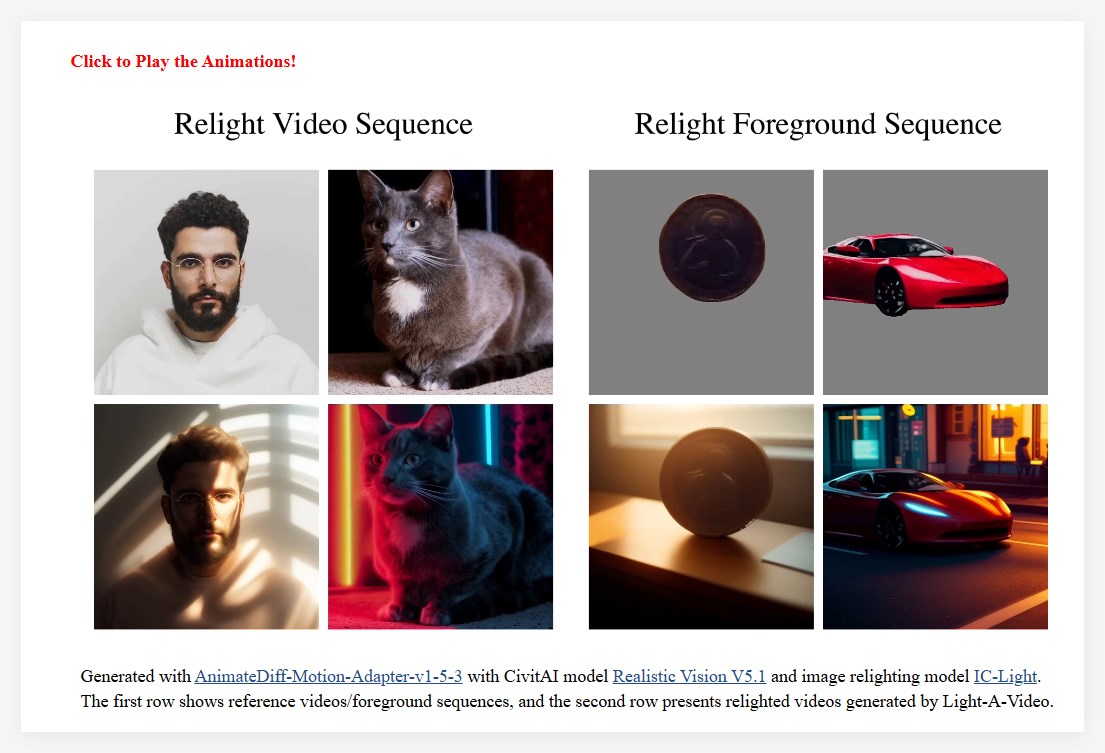

To address these challenges, the research team proposed Light-A-Video, an innovative method that can achieve time-smooth video re-illumination without training. Light-A-Video not only draws on the advantages of the image re-lighting model, but also introduces two key modules to significantly enhance the consistency of lighting in video.

First, the researchers developed the Consistent Light Attention (CLA) module. This module effectively stabilizes the generation of background light sources by enhancing cross-frame interaction within the self-attention layer, thereby avoiding the jump and inconsistency of light sources in the video.

Secondly, based on the physical principle of optical transmission independence, the research team adopted a linear fusion strategy to mix the appearance of the source video with the appearance of heavy lighting. Through Progressive Light Fusion (PLF) strategy, the smooth transition of light in time is ensured, further improving the visual coherence of the video.

In the experiment, Light-A-Video demonstrated excellent performance, significantly improving the time consistency of heavy-light videos while maintaining high-quality image output. Its processing framework first performs noise processing on the source video, and then gradually denoising it through the VDM model. In each step, the predicted noise-free component serves as a consistent target, while the consistent light attention module injects unique lighting information to convert it into a heavy light target. Finally, the progressive light fusion strategy combines the two goals to form a fusion goal, providing a more refined direction for the current steps.

The success of Light-A-Video not only demonstrates the huge potential of video re-lighting technology, but also provides new ideas and directions for future related research. The application of this technology is expected to bring revolutionary changes in the fields of film and television production, virtual reality, etc.

Key points:

Light-A-Video is a training-free technique designed to achieve time consistency in video re-lighting.

The consistent light attention module and progressive light fusion strategy are adopted to solve the problem of light source inhomogeneity in video re-lighting.

Experiments show that Light-A-Video significantly improves the time consistency and image quality of heavy-light videos.