Fish Audio has released its new voice processing model, Fish Agent V0.13B, an impressive voice-to-speech model that can efficiently and accurately generate and process speech, and is good at simulating and cloning different sounds. The model is pre-trained based on Qwen-2.5-3B-Instruct and uses a massive dataset containing 200 billion speech and text tokens. Its innovation lies in the adoption of a "semantic token-free" architecture that directly processes voice at the sound level, thereby improving speed and efficiency, realizing "instant" voice cloning and text-to-speech conversion, which takes only 200 milliseconds. The model supports multiple languages and is open source, bringing new possibilities to the development of AI voice technology.

Recently, Fish Audio released the new voice processing model Fish Agent V0.13B. This voice-to-speech model can efficiently and accurately generate and process speech, especially at simulating or cloning different sounds. This means we are one step closer to having a natural and responsive AI voice assistant.

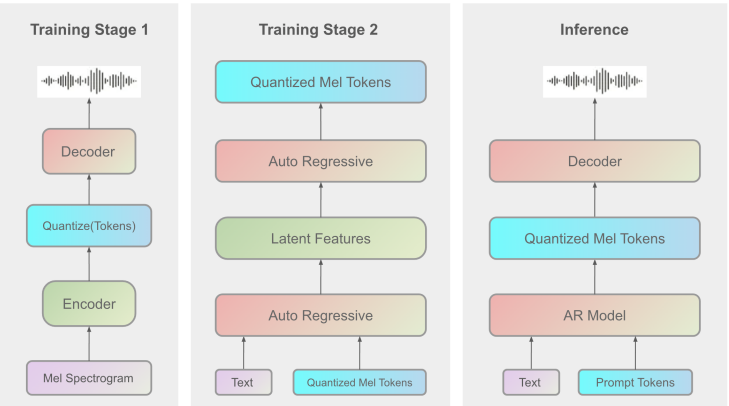

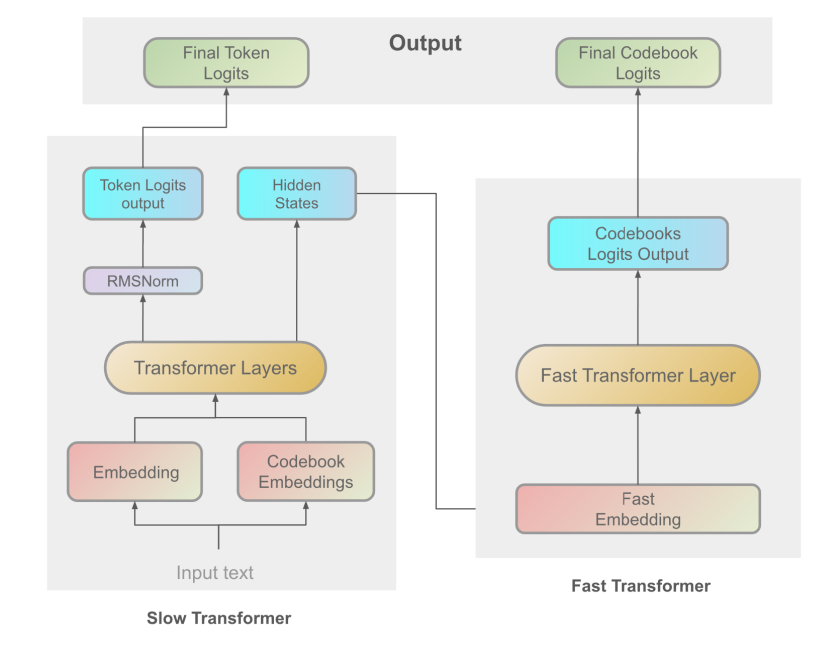

The Fish Agent V0.13B model is pre-trained based on Qwen-2.5-3B-Instruct and uses a massive data set containing 200 billion speech and text tokens. Unlike traditional models that require first converting speech into complex semantic encoding, Fish Agent V0.13B adopts an architecture called "semanticless token" to process and generate speech directly at the sound level. This direct processing not only simplifies the model structure, but also improves the model's reaction speed and efficiency.

Thanks to this innovative architecture, Fish Agent V0.13B can quickly and naturally generate high-quality voice, enabling "instant" voice cloning and text-to-speech conversion, with text-to-audio conversion time (TTFA) in just 200 milliseconds. This feature makes it ideal for application scenarios that require real-time voice generation, such as voice assistants, automatic customer service, and other scenarios that require fast voice feedback.

The Fish Agent V0.13B model supports multiple languages, including English, Chinese, German, Japanese, French, Spanish, Korean and Arabic, and uses about 700,000 hours of multilingual audio data for training. This means it can handle multiple languages and contexts and generates more natural and closer pronunciation to the real person.

In addition to voice-to-speech generation and text-to-speech conversion functions, Fish Agent V0.13B also has the following key features:

Zero-sample voice cloning: Voice cloning can be achieved without training.

Simplified 3B parameters: Use 3 billion parameters for easy development.

Supports text and audio input: flexible multi-input method.

Currently, Fish Audio has open sourced the Fish Agent V0.13B model and provides a preliminary demo version for user experience. The release of this model will further promote the development of AI voice technology and bring more possibilities to applications such as voice assistants and virtual people.

GitHub: https://github.com/fishaudio/fish-speech

Fish Agent Demo: https://huggingface.co/spaces/fishaudio/fish-agent

Model download: https://huggingface.co/fishaudio/fish-agent-v0.1-3b

Technical report: https://arxiv.org/abs/2411.01156

The open source release of Fish Agent V0.13B model will bring new breakthroughs to the research and application of AI voice field, and it is worth looking forward to its role in the future development of voice technology. I hope more developers can participate and jointly promote the advancement of AI voice technology.